From: Lynn Wheeler <lynn@garlic.com> Subject: 2314 Disks Date: 29 Jul, 2024 Blog: FacebookIBM Wilshire Showroom

when I graduated and join science center, had 360/67 with increasing banks of 2314, quickly grew to five 8+1 banks and a 5 bank (for 45 drives) ... the CSC FE then painted each bank panel door with a different color ... to help operator mapping disk mount request address to a 2314 bank.

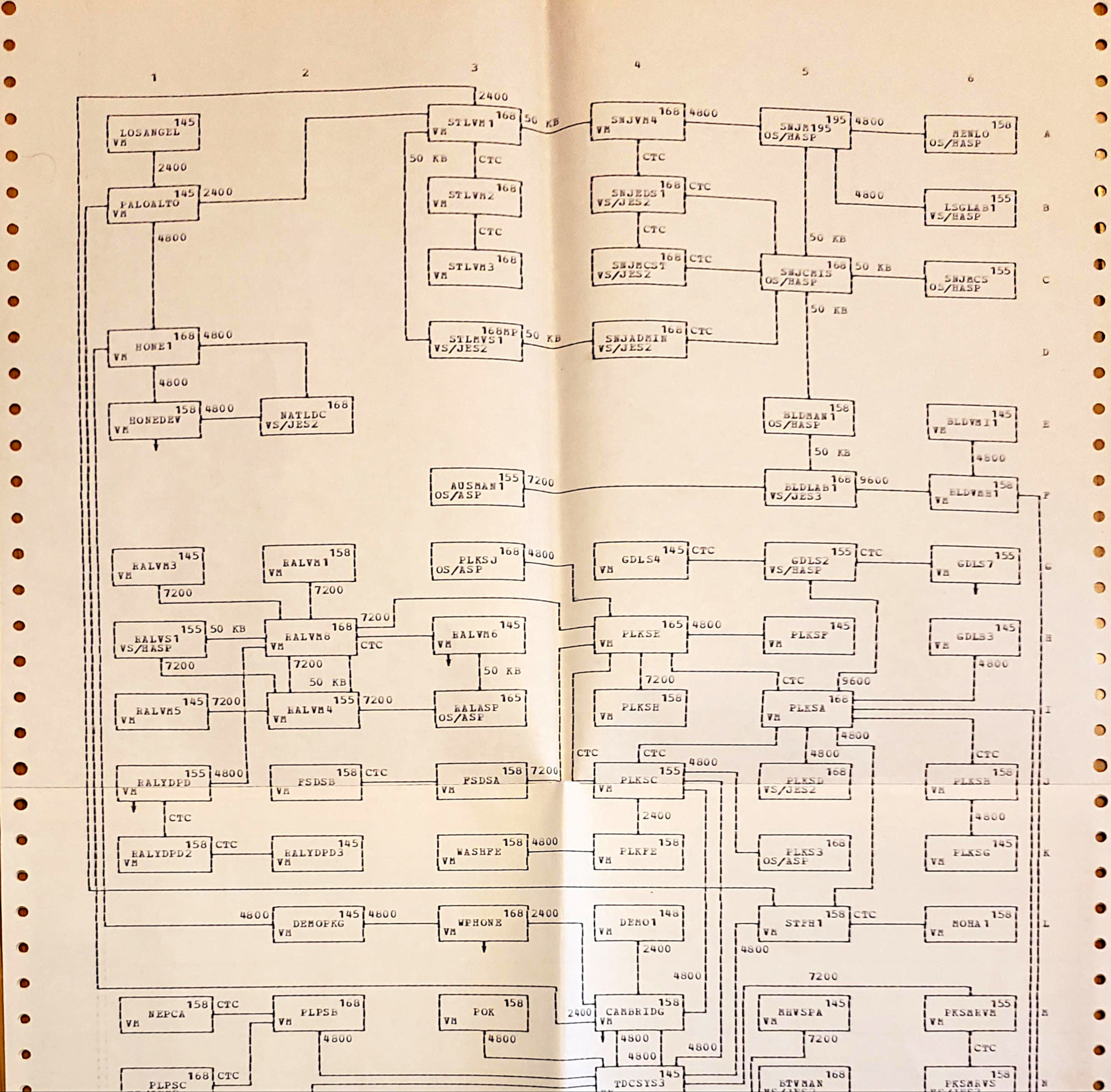

one of hobbies after joining IBM was enhanced production operating systems for internal datacenters and online branch office sales&marketing suppprt HONE systems was long time customer (1st cp67/cms then vm370/cms) ... most frequently stopped by HONE 1133 westchester and wilshire blvd (3424?) ... before all US HONE datacenters were consolidated in Palo Alto (trivia: when facebook 1st moves into silicon valley it is new bldg built next to the former US consolidated HONE datacenter). First US consolidated operation was single-system-image, loosely-coupled, shared DASD with load-balancing and fall-over across the complex (one of the largest in the world, some similar airlines TPF operations), then a 2nd processor was added to each system (making it the largest, TPF didn't get SMP multiprocessor support for another decade).

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

HONE system posts

https://www.garlic.com/~lynn/subtopic.html#hone

CSC/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

SMP, loosely-coupled, multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

Posts mentioning CSC & 45 2314 drives

https://www.garlic.com/~lynn/2021c.html#72 I/O processors, What could cause a comeback for big-endianism very slowly?

https://www.garlic.com/~lynn/2019.html#51 3090/3880 trivia

https://www.garlic.com/~lynn/2013d.html#50 Arthur C. Clarke Predicts the Internet, 1974

https://www.garlic.com/~lynn/2012n.html#60 The IBM mainframe has been the backbone of most of the world's largest IT organizations for more than 48 years

https://www.garlic.com/~lynn/2011.html#16 Looking for a real Fortran-66 compatible PC compiler (CP/M or DOS or Windows, doesn't matter)

https://www.garlic.com/~lynn/2003b.html#14 Disk drives as commodities. Was Re: Yamhill

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: TYMSHARE Dialup Date: 30 Jul, 2024 Blog: FacebookIBM 2741 dialup at home, Mar1970-Jun1977, replaced by CDI Miniterm.

Note Aug1976, TYMSHARE starts offering their VM370/CMS-based online

computer conferencing to (user group) SHARE as VMSHARE ... archives

here:

http://vm.marist.edu/~vmshare

I cut deal w/TYMSHARE to get a monthly tape dump of all VMSHARE (and later PCSHARE) files for putting up on internal network and systems ... one of biggest problems was lawyers concern that internal employees would be contaminated by unfiltered customer information.

Much later with M/D acquiring TYMSHARE, I was brought in to review GNOSIS for the spinoff:

Ann Hardy at Computer History Museum

https://www.computerhistory.org/collections/catalog/102717167

Ann rose up to become Vice President of the Integrated Systems

Division at Tymshare, from 1976 to 1984, which did online airline

reservations, home banking, and other applications. When Tymshare was

acquired by McDonnell-Douglas in 1984, Ann's position as a female VP

became untenable, and was eased out of the company by being encouraged

to spin out Gnosis, a secure, capabilities-based operating system

developed at Tymshare. Ann founded Key Logic, with funding from Gene

Amdahl, which produced KeyKOS, based on Gnosis, for IBM and Amdahl

mainframes. After closing Key Logic, Ann became a consultant, leading

to her cofounding Agorics with members of Ted Nelson's Xanadu project.

... snip ...

Ann Hardy

https://medium.com/chmcore/someone-elses-computer-the-prehistory-of-cloud-computing-bca25645f89

Ann Hardy is a crucial figure in the story of Tymshare and

time-sharing. She began programming in the 1950s, developing software

for the IBM Stretch supercomputer. Frustrated at the lack of

opportunity and pay inequality for women at IBM -- at one point she

discovered she was paid less than half of what the lowest-paid man

reporting to her was paid -- Hardy left to study at the University of

California, Berkeley, and then joined the Lawrence Livermore National

Laboratory in 1962. At the lab, one of her projects involved an early

and surprisingly successful time-sharing operating system.

... snip ...

If Discrimination, Then Branch: Ann Hardy's Contributions to Computing

https://computerhistory.org/blog/if-discrimination-then-branch-ann-hardy-s-contributions-to-computing/

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

online virtual machine commercial services

https://www.garlic.com/~lynn/submain.html#online

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: DASD CKD Date: 30 Jul, 2024 Blog: FacebookCKD was trade-off with i/o capacity and mainframe memory in mid-60s ... but the mid-70s trade-off started to flip. IBM 3370 FBA in the late 70s and then all disks started to migrate to fixed-block (can be seen in 3380 records/track formulas, record size had to be rounded up to fixed "cell size"). Currently there haven't been any CKD DASD made for decades, all being simulated on industry standard fixed-block disks).

took two credit hr intro to fortran/computers, end of the semester hired to rewrite 1401 MPIO for 360/30. Univ getting 360/67 for tss/360. to replace 709/1401 and temporary got 360/30 (that had 1401 microcode emulation) to replace 1401, pending arrival of 360/67 (Univ shutdown datacenter on weekend, and I would have it dedicated, although 48hrs w/o sleep made Monday classes hard). Within a year of taking intro class, 360/67 showed up and I was hired fulltime responsibility for OS/360 (tss/360 didn't come to production, so ran as 360/65 with os/360) ... and I continued to have my dedicated weekend time. Student fortran ran under second on 709 (tape to tape), but initial over a minute on 360/65. I install HASP and it cuts time in half. I then start revamping stage2 sysgen to place datasets and PDS members to optimize disk seek and multi-track searches, cutting another 2/3rds to 12.9secs; never got better than 709 until I install univ of waterloo WATFOR.

My 1st SYSGEN was R9.5MFT, then started redoing stage2 sysgen for R11MFT. MVT shows up with R12 but I didn't do MVT gen until R15/16 (15/16 disk format shows up being able to specify VTOC cyl ... aka place other than cyl0 to reduce avg. arm seek).

Bob Bemer history page (gone 404, but lives on at wayback machine)

https://web.archive.org/web/20180402200149/http://www.bobbemer.com/HISTORY.HTM

360s were originally to be ASCII machines ... but the ASCII unit

record gear wasn't ready ... so had to use old tab BCD gear (and

EBCDIC) ... biggest computer goof ever:

https://web.archive.org/web/20180513184025/http://www.bobbemer.com/P-BIT.HTM

Learson named in the "biggest computer goof ever" ... then he is CEO

and tried (and failed) to block the bureaucrats, careerists and MBAs

from destroying Watson culture and legacy ... then 20yrs later, IBM

has one of the largest losses in the history of US companies and was

being reorged into the 13 baby blues in preparation for breaking up

the company

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

DASD CKD, FBA, multi-track search posts

https://www.garlic.com/~lynn/submain.html#dasd

getting to play disk engineer in bldg 14&15 posts

https://www.garlic.com/~lynn/subtopic.html#disk

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

some recent posts mentioning ASCII and/or Bob Bemer

https://www.garlic.com/~lynn/2024d.html#107 Biggest Computer Goof Ever

https://www.garlic.com/~lynn/2024d.html#105 Biggest Computer Goof Ever

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#99 Interdata Clone IBM Telecommunication Controller

https://www.garlic.com/~lynn/2024d.html#74 Some Email History

https://www.garlic.com/~lynn/2024d.html#33 IBM 23June1969 Unbundling Announcement

https://www.garlic.com/~lynn/2024c.html#93 ASCII/TTY33 Support

https://www.garlic.com/~lynn/2024c.html#53 IBM 3705 & 3725

https://www.garlic.com/~lynn/2024c.html#16 CTSS, Multicis, CP67/CMS

https://www.garlic.com/~lynn/2024c.html#15 360&370 Unix (and other history)

https://www.garlic.com/~lynn/2024c.html#14 Bemer, ASCII, Brooks and Mythical Man Month

https://www.garlic.com/~lynn/2024b.html#114 EBCDIC

https://www.garlic.com/~lynn/2024b.html#113 EBCDIC

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#81 rusty iron why ``folklore''?

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#59 Vintage HSDT

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024.html#102 EBCDIC Card Punch Format

https://www.garlic.com/~lynn/2024.html#100 Multicians

https://www.garlic.com/~lynn/2024.html#40 UNIX, MULTICS, CTSS, CSC, CP67

https://www.garlic.com/~lynn/2024.html#31 MIT Area Computing

https://www.garlic.com/~lynn/2024.html#26 1960's COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME Origin and Technology (IRS, NASA)

https://www.garlic.com/~lynn/2024.html#12 THE RISE OF UNIX. THE SEEDS OF ITS FALL

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: TYMSHARE Dialup Date: 30 Jul, 2024 Blog: Facebookre:

CSC comes out to install CP67/CMS (3rd after CSC itself and MIT Lincoln Labs, precursor to VM370), which I mostly got to play with during my weekend dedicated time. First few months I mostly spent rewriting CP67 pathlengths for running os/360 in virtual machine; test os/360 stream 322secs, initially ran 856secs virtually; CP67 CPU 534secs got down to CP67 CPU 113secs. CP67 had 1052 & 2741 support with automatic terminal type identification (controller SAD CCW to switch port scanner terminal type). Univ had some TTY terminals so I added TTY support integrated with automatic terminal type.

I then wanted to have single dial-up number ("hunt group") for all

terminals ... but IBM had taken short-cut and hardwired port

line-speed ... which kicks off univ. program to build clone

controller, building channel interface board for Interdata/3,

programmed to emulate IBM controller with inclusion of automatic line

speed. Later upgraded to Interdata/4 for channel interface and cluster

of Interdata/3s for port interfaces. Four of us get written up

responsible for (some part of) IBM clone controller business

... initially sold by Interdata and then by Perkin-Elmer

https://en.wikipedia.org/wiki/Interdata

https://en.wikipedia.org/wiki/Perkin-Elmer#Computer_Systems_Division

Turn of century, tour of datacenter and a descendant of the Interdata telecommunication controller handling majority of all credit card swipe dial-up terminals east of the mississippi.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

clone controller posts

https://www.garlic.com/~lynn/submain.html#360pcm

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Private Equity Date: 31 Jul, 2024 Blog: Facebookre:

When private equity buys a hospital, assets shrink, new research

finds. The study comes as U.S. regulators investigate the industry's

profit-taking and its effect on patient care.

https://archive.ph/ClHZ5

Private Equity Professionals Are 'Fighting Fires' in Their Portfolios,

Slowing Down the Recovery. At the same time, "the interest rate spike

has raised the stakes of holding an asset longer," says Bain & Co.

https://www.institutionalinvestor.com/article/2dkcwhdzmq3njso767d34/portfolio/private-equity-professionals-are-fighting-fires-in-their-portfolios-slowing-down-the-recovery

private equity posts

https://www.garlic.com/~lynn/submisc.html#private.equity

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: For Big Companies, Felony Convictions Are a Mere Footnote Date: 31 Jul, 2024 Blog: FacebookFor Big Companies, Felony Convictions Are a Mere Footnote. Boeing guilty plea highlights how corporate convictions rarely have consequences that threaten the business

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

some posts mentioning Boeing (& fraud):

https://www.garlic.com/~lynn/2019d.html#42 Defense contractors aren't securing sensitive information, watchdog finds

https://www.garlic.com/~lynn/2018d.html#37 Imagining a Cyber Surprise: How Might China Use Stolen OPM Records to Target Trust?

https://www.garlic.com/~lynn/2018c.html#26 DoD watchdog: Air Force failed to effectively manage F-22 modernization

https://www.garlic.com/~lynn/2017h.html#55 Pareto efficiency

https://www.garlic.com/~lynn/2017h.html#54 Pareto efficiency

https://www.garlic.com/~lynn/2015f.html#42 No, the F-35 Can't Fight at Long Range, Either

https://www.garlic.com/~lynn/2014i.html#13 IBM & Boyd

https://www.garlic.com/~lynn/2012g.html#3 Quitting Top IBM Salespeople Say They Are Leaving In Droves

https://www.garlic.com/~lynn/2011f.html#88 Court OKs Firing of Boeing Computer-Security Whistleblowers

https://www.garlic.com/~lynn/2010f.html#75 Is Security a Curse for the Cloud Computing Industry?

https://www.garlic.com/~lynn/2007c.html#18 Securing financial transactions a high priority for 2007

https://www.garlic.com/~lynn/2007.html#5 Securing financial transactions a high priority for 2007

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 2314 Disks Date: 31 Jul, 2024 Blog: Facebookre:

trivia: ACP/TPF got a 3830 symbolic lock RPQ for loosely-coupled operation (sort of like the later DEC VAXCluster implementation) much faster than reserve/release protocol ... but was limited to four system operation, disk division discontinued since it conflicted with string switch requiring two 3830s). HONE did an interesting HACK that simulated processor compare&swap semantics instruction, that worked across string switch ... so extended to 8 system operation (and with SMP was 16 processor).

archived email with ACP/TPF 3830 disk controller lock RPQ details

... only serializes I/O for channels connected to same 3830

controller.

https://www.garlic.com/~lynn/2008i.html#email800325

in this post which has a little detail about HONE I/O that simulates

the processor compare-and-swap instruction semantics (works across

string switch and multiple disk controllers)

https://www.garlic.com/~lynn/2008i.html#39

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

HONE system posts

https://www.garlic.com/~lynn/subtopic.html#hone

SMP, tightly-coupled, multiprocessor (and some compare-and-swap)

https://www.garlic.com/~lynn/subtopic.html#smp

other posts getting to play disk engineer in bldgs 14&15

https://www.garlic.com/~lynn/subtopic.html#disk

recent posts mentionine HONE, loosely-coupled, single-system-image

https://www.garlic.com/~lynn/2024d.html#113 ... some 3090 and a little 3081

https://www.garlic.com/~lynn/2024d.html#100 Chipsandcheese article on the CDC6600

https://www.garlic.com/~lynn/2024d.html#92 Computer Virtual Memory

https://www.garlic.com/~lynn/2024d.html#62 360/65, 360/67, 360/75 750ns memory

https://www.garlic.com/~lynn/2024c.html#119 Financial/ATM Processing

https://www.garlic.com/~lynn/2024c.html#112 Multithreading

https://www.garlic.com/~lynn/2024c.html#90 Gordon Bell

https://www.garlic.com/~lynn/2024b.html#72 Vintage Internet and Vintage APL

https://www.garlic.com/~lynn/2024b.html#31 HONE, Performance Predictor, and Configurators

https://www.garlic.com/~lynn/2024b.html#26 HA/CMP

https://www.garlic.com/~lynn/2024b.html#18 IBM 5100

https://www.garlic.com/~lynn/2024.html#112 IBM User Group SHARE

https://www.garlic.com/~lynn/2024.html#88 IBM 360

https://www.garlic.com/~lynn/2024.html#78 Mainframe Performance Optimization

https://www.garlic.com/~lynn/2023g.html#72 MVS/TSO and VM370/CMS Interactive Response

https://www.garlic.com/~lynn/2023g.html#30 Vintage IBM OS/VU

https://www.garlic.com/~lynn/2023g.html#6 Vintage Future System

https://www.garlic.com/~lynn/2023f.html#92 CSC, HONE, 23Jun69 Unbundling, Future System

https://www.garlic.com/~lynn/2023f.html#41 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2023e.html#87 CP/67, VM/370, VM/SP, VM/XA

https://www.garlic.com/~lynn/2023e.html#75 microcomputers, minicomputers, mainframes, supercomputers

https://www.garlic.com/~lynn/2023e.html#53 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#90 IBM 3083

https://www.garlic.com/~lynn/2023d.html#23 VM370, SMP, HONE

https://www.garlic.com/~lynn/2023c.html#77 IBM Big Blue, True Blue, Bleed Blue

https://www.garlic.com/~lynn/2023c.html#10 IBM Downfall

https://www.garlic.com/~lynn/2023b.html#80 IBM 158-3 (& 4341)

https://www.garlic.com/~lynn/2023.html#91 IBM 4341

https://www.garlic.com/~lynn/2023.html#49 23Jun1969 Unbundling and Online IBM Branch Offices

https://www.garlic.com/~lynn/2022h.html#112 TOPS-20 Boot Camp for VMS Users 05-Mar-2022

https://www.garlic.com/~lynn/2022h.html#2 360/91

https://www.garlic.com/~lynn/2022g.html#90 IBM Cambridge Science Center Performance Technology

https://www.garlic.com/~lynn/2022g.html#88 IBM Cambridge Science Center Performance Technology

https://www.garlic.com/~lynn/2022g.html#61 Datacenter Vulnerability

https://www.garlic.com/~lynn/2022f.html#59 The Man That Helped Change IBM

https://www.garlic.com/~lynn/2022f.html#53 z/VM 50th - part 4

https://www.garlic.com/~lynn/2022f.html#44 z/VM 50th

https://www.garlic.com/~lynn/2022f.html#30 IBM Power: The Servers that Apple Should Have Created

https://www.garlic.com/~lynn/2022f.html#10 9 Mainframe Statistics That May Surprise You

https://www.garlic.com/~lynn/2022e.html#50 Channel Program I/O Processing Efficiency

https://www.garlic.com/~lynn/2022d.html#62 IBM 360/50 Simulation From Its Microcode

https://www.garlic.com/~lynn/2022b.html#8 Porting APL to CP67/CMS

https://www.garlic.com/~lynn/2022.html#101 Online Computer Conferencing

https://www.garlic.com/~lynn/2022.html#81 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#29 IBM HONE

https://www.garlic.com/~lynn/2021k.html#23 MS/DOS for IBM/PC

https://www.garlic.com/~lynn/2021j.html#108 168 Loosely-Coupled Configuration

https://www.garlic.com/~lynn/2021j.html#25 VM370, 3081, and AT&T Long Lines

https://www.garlic.com/~lynn/2021i.html#77 IBM ACP/TPF

https://www.garlic.com/~lynn/2021i.html#10 A brief overview of IBM's new 7 nm Telum mainframe CPU

https://www.garlic.com/~lynn/2021g.html#34 IBM Fan-fold cards

https://www.garlic.com/~lynn/2021f.html#30 IBM HSDT & HA/CMP

https://www.garlic.com/~lynn/2021e.html#61 Performance Monitoring, Analysis, Simulation, etc

https://www.garlic.com/~lynn/2021d.html#43 IBM Powerpoint sales presentations

https://www.garlic.com/~lynn/2021b.html#80 AT&T Long-lines

https://www.garlic.com/~lynn/2021b.html#15 IBM Recruiting

https://www.garlic.com/~lynn/2021.html#86 IBM Auditors and Games

https://www.garlic.com/~lynn/2021.html#74 Airline Reservation System

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: For Big Companies, Felony Convictions Are a Mere Footnote Date: 31 Jul, 2024 Blog: Facebookre:

The rest is

The accounting firm Arthur Andersen collapsed in 2002 after

prosecutors indicted the company for shredding evidence related to its

audits of failed energy conglomerate Enron. For years after Andersen's

demise, prosecutors held back from indicting major corporations,

fearing they would kill the firm in the process.

... snip ...

The Sarbanes-Oxley joke was that congress felt so badly about the

Anderson collapse that they really increased the audit requirements

for public companies. The rhetoric on flr of congress were claims that

SOX would prevent future ENRONs and guarantee executives &

auditors did jail time ... however it required SEC to do

something. GAO did analysis of public company fraudulent financial

filings showing that it even increased after SOX went into effect (and

nobody doing jail time).

http://www.gao.gov/products/GAO-03-138

http://www.gao.gov/products/GAO-06-678

http://www.gao.gov/products/GAO-06-1053R

The other observation was possibly the only part of SOX that was going to make some difference might be the informants/whistleblowers (folklore was one of congressional members involved in SOX had been former FBI involved in taking down organized crime and supposedly what made if possible were informants/whistleblowers).

Something similar showed up with the economic mess, financial houses 2001-2008 did over $27T in securitizing mortgages/loans; aka paying for triple-A rating (when rating agencies knew they weren't worth triple-A, from Oct2008 congressional hearings) and selling into the bond market. YE2008, just the four largest too-big-to-fail were still carrying $5.2T in offbook toxic CDOs.

Then found some of the too-big-to-fail were money laundering

for terrorists and drug cartels (various stories it enabled drug

cartels to buy military grade equipment largely responsible for

violence on both sides of the border). There would be repeated

"deferred prosecution" (promising never to do again, each time)

... supposedly if they repeated they would be prosecuting (but

apparent previous violations were consistently ignored). Gave rise to

too-big-to-prosecute and too-big-to-jail ... in addition to

too-big-to-fail.

https://en.wikipedia.org/wiki/Deferred_prosecution

trivia: 1999: I was asked to try and help block (we failed) the coming economic mess; 2004: I was invited to annual conference of EU CEOs and heads of financial exchanges, that year's theme was EU companies, that dealt with US companies, were being forced into performing SOX audits (aka I was there to discuss effectiveness of SOX).

ENRON posts

https://www.garlic.com/~lynn/submisc.html#enron

Sarbanes-Oxley posts

https://www.garlic.com/~lynn/submisc.html#sarbanes-oxley

whistleblower posts

https://www.garlic.com/~lynn/submisc.html#whistleblower

Fraudulent Financial Filing posts

https://www.garlic.com/~lynn/submisc.html#financial.reporting.fraud

economic mess posts

https://www.garlic.com/~lynn/submisc.html#economic.mess

too-big-to-fail (too-big-to-prosecute, too-big-to-jail) posts

https://www.garlic.com/~lynn/submisc.html#too-big-to-fail

(offbook) toxic CDOs

https://www.garlic.com/~lynn/submisc.html#toxic.cdo

money laundering posts

https://www.garlic.com/~lynn/submisc.html#money.laundering

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

some posts mentioning deferred prosecution

https://www.garlic.com/~lynn/2024d.html#59 Too-Big-To-Fail Money Laundering

https://www.garlic.com/~lynn/2024.html#58 Sales of US-Made Guns and Weapons, Including US Army-Issued Ones, Are Under Spotlight in Mexico Again

https://www.garlic.com/~lynn/2024.html#19 Huge Number of Migrants Highlights Border Crisis

https://www.garlic.com/~lynn/2022h.html#89 As US-style corporate leniency deals for bribery and corruption go global, repeat offenders are on the rise

https://www.garlic.com/~lynn/2021k.html#73 Wall Street Has Deployed a Dirty Tricks Playbook Against Whistleblowers for Decades, Now the Secrets Are Spilling Out

https://www.garlic.com/~lynn/2018e.html#111 Pigs Want To Feed at the Trough Again: Bernanke, Geithner and Paulson Use Crisis Anniversary to Ask for More Bailout Powers

https://www.garlic.com/~lynn/2018d.html#60 Dirty Money, Shiny Architecture

https://www.garlic.com/~lynn/2017h.html#56 Feds WIMP

https://www.garlic.com/~lynn/2017b.html#39 Trump to sign cyber security order

https://www.garlic.com/~lynn/2017b.html#13 Trump to sign cyber security order

https://www.garlic.com/~lynn/2017.html#45 Western Union Admits Anti-Money Laundering and Consumer Fraud Violations, Forfeits $586 Million in Settlement with Justice Department and Federal Trade Commission

https://www.garlic.com/~lynn/2016e.html#109 Why Aren't Any Bankers in Prison for Causing the Financial Crisis?

https://www.garlic.com/~lynn/2016c.html#99 Why Is the Obama Administration Trying to Keep 11,000 Documents Sealed?

https://www.garlic.com/~lynn/2016c.html#41 Qbasic

https://www.garlic.com/~lynn/2016c.html#29 Qbasic

https://www.garlic.com/~lynn/2016b.html#73 Qbasic

https://www.garlic.com/~lynn/2016b.html#0 Thanks Obama

https://www.garlic.com/~lynn/2016.html#36 I Feel Old

https://www.garlic.com/~lynn/2016.html#10 25 Years: How the Web began

https://www.garlic.com/~lynn/2015h.html#65 Economic Mess

https://www.garlic.com/~lynn/2015h.html#47 rationality

https://www.garlic.com/~lynn/2015h.html#44 rationality

https://www.garlic.com/~lynn/2015h.html#31 Talk of Criminally Prosecuting Corporations Up, Actual Prosecutions Down

https://www.garlic.com/~lynn/2015f.html#61 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015f.html#57 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015f.html#37 LIBOR: History's Largest Financial Crime that the WSJ and NYT Would Like You to Forget

https://www.garlic.com/~lynn/2015f.html#36 Eric Holder, Wall Street Double Agent, Comes in From the Cold

https://www.garlic.com/~lynn/2015e.html#47 Do we REALLY NEED all this regulatory oversight?

https://www.garlic.com/~lynn/2015e.html#44 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015e.html#23 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015d.html#80 Greedy Banks Nailed With $5 BILLION+ Fine For Fraud And Corruption

https://www.garlic.com/~lynn/2014i.html#10 Instead of focusing on big fines, law enforcement should seek long prison terms for the responsible executives

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Ampere Arm Server CPUs To Get 512 Cores, AI Accelerator Date: 31 Jul, 2024 Blog: FacebookAmpereOne Aurora In Development With Up To 512 Cores, AmpereOne Prices Published

There was comparison of IBM max configured z196, 80processors, 50BIPS, $30M ($600,000/BIPS) and IBM E5-2600 server blades, 16processors, 500BIPS, base list price $1815 ($3.63/BIPS). Note BIPS benchmark is number of iterations of program compared to the reference platform (not actual count of instructions). At the time, large cloud operations claimed that they were assembling their own server blades for 1/3rd brand named servers ($605, $1.21/BIPS and 500BIPS, ten times BIPS of max. configured z196 at 1/500000 the price/BIPS). Then there were articles that the server chip vendors were shipping at least half their product directly to large cloud operators (that assemble their own servers). Shortly later, IBM sells-off its server product line.

cloud megadatacenter posts

https://www.garlic.com/~lynn/submisc.html#megadatacenter

some posts mentioning IBM z196/e5-2600

https://www.garlic.com/~lynn/2023g.html#40 Vintage Mainframe

https://www.garlic.com/~lynn/2022h.html#112 TOPS-20 Boot Camp for VMS Users 05-Mar-2022

https://www.garlic.com/~lynn/2022c.html#19 Telum & z16

https://www.garlic.com/~lynn/2022b.html#63 Mainframes

https://www.garlic.com/~lynn/2021j.html#56 IBM and Cloud Computing

https://www.garlic.com/~lynn/2021i.html#92 How IBM lost the cloud

https://www.garlic.com/~lynn/2021b.html#0 Will The Cloud Take Down The Mainframe?

https://www.garlic.com/~lynn/2014f.html#78 Over in the Mainframe Experts Network LinkedIn group

https://www.garlic.com/~lynn/2014f.html#67 Is end of mainframe near ?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Saudi Arabia and 9/11 Date: 31 Jul, 2024 Blog: FacebookSaudi Arabia and 9/11

After 9/11, victims were prohibited from suing Saudi Arabia for responsibility, that wasn't lifted until 2013, some recent progress in holding Saudi Arabia accountable

New Claims of Saudi Role in 9/11 Bring Victims' Families Back to Court

in Lawsuit Against Riyadh

https://www.nysun.com/article/new-claims-of-saudi-role-in-9-11-bring-victims-families-back-to-court-in-lawsuit-against-riyadh

Lawyers for Saudi Arabia seek dismissal of claims it supported the

Sept. 11 hijackers

https://abcnews.go.com/US/wireStory/lawyers-saudi-arabia-seek-dismissal-claims-supported-sept-112458690

September 11th families suing Saudi Arabia back in federal court in

Lower Manhattan, New York City

https://abc7ny.com/post/september-11th-families-suing-saudi-arabia-back-federal-court-lower-manhattan-new-york-city/15126848/

Video: 'Wow, shocking': '9/11 Justice' president reacts to report on

possible Saudi involvement in 9/11

https://www.cnn.com/2024/07/31/us/video/saudi-arabia-9-11-report-eagleson-lead-digvid

9/11 defendants reach plea deal with Defense Department in Saudi

Arabia lawsuit

https://www.fox5ny.com/news/9-11-justice-families-saudi-arabia-lawsuit-hearing-attacks

9/11 families furious over plea deal for terror mastermind on same day

Saudi lawsuit before judge

https://www.bostonherald.com/2024/07/31/9-11-families-furious-over-plea-deal-for-terror-mastermind-on-same-day-saudi-lawsuit-before-judge/

Judge hears evidence against Saudi Arabia in 9/11 families lawsuit

https://www.newsnationnow.com/world/9-11-families-news-conference-saudi-lawsuit-hearing/

9/11 families furious over plea deal for terror mastermind on same day

Saudi lawsuit goes before judge | Nation World

https://www.rv-times.com/nation_world/9-11-families-furious-over-plea-deal-for-terror-mastermind-on-same-day-saudi-lawsuit/article_762df686-9b9e-58df-9140-30268848e252.html

Latest news some of the recent 9/11 Saudi Arabia material wasn't released by US gov. but obtained from the British gov.

U.S. Signals It Will Release Some Still-Secret Files on Saudi Arabia

and 9/11

https://www.nytimes.com/2021/08/09/us/politics/sept-11-saudi-arabia-biden.html

Democratic senators increase pressure to declassify 9/11 documents

related to Saudi role in attacks

https://thehill.com/policy/national-security/566547-democratic-senators-increase-pressure-to-declassify-9-11-documents/

military-industrial(-congressional) complex posts

https://www.garlic.com/~lynn/submisc.html#military.industrial.complex

perpetual war posts

https://www.garlic.com/~lynn/submisc.html#perpetual.war

WMD posts

https://www.garlic.com/~lynn/submisc.html#wmds

some past posts mentioning Saudi Arabia and 9/11

https://www.garlic.com/~lynn/2020.html#22 The Saudi Connection: Inside the 9/11 Case That Divided the F.B.I

https://www.garlic.com/~lynn/2019e.html#143 "Undeniable Evidence": Explosive Classified Docs Reveal Afghan War Mass Deception

https://www.garlic.com/~lynn/2019e.html#85 Just and Unjust Wars

https://www.garlic.com/~lynn/2019e.html#70 Since 2001 We Have Spent $32 Million Per Hour on War

https://www.garlic.com/~lynn/2019e.html#67 Profit propaganda ads witch-hunt era

https://www.garlic.com/~lynn/2019d.html#99 Trump claims he's the messiah. Maybe he should quit while he's ahead

https://www.garlic.com/~lynn/2019d.html#79 Bretton Woods Institutions: Enforcers, Not Saviours?

https://www.garlic.com/~lynn/2019d.html#54 Global Warming and U.S. National Security Diplomacy

https://www.garlic.com/~lynn/2019d.html#7 You paid taxes. These corporations didn't

https://www.garlic.com/~lynn/2019b.html#56 U.S. Has Spent Six Trillion Dollars on Wars That Killed Half a Million People Since 9/11, Report Says

https://www.garlic.com/~lynn/2019.html#45 Jeffrey Skilling, Former Enron Chief, Released After 12 Years in Prison

https://www.garlic.com/~lynn/2019.html#42 Army Special Operations Forces Unconventional Warfare

https://www.garlic.com/~lynn/2018b.html#65 Doubts about the HR departments that require knowledge of technology that does not exist

https://www.garlic.com/~lynn/2016c.html#93 Qbasic

https://www.garlic.com/~lynn/2015g.html#13 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015g.html#12 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015d.html#54 The Jeb Bush Adviser Who Should Scare You

https://www.garlic.com/~lynn/2015.html#72 George W. Bush: Still the worst; A new study ranks Bush near the very bottom in history

https://www.garlic.com/~lynn/2014d.html#89 Difference between MVS and z / OS systems

https://www.garlic.com/~lynn/2014d.html#11 Royal Pardon For Turing

https://www.garlic.com/~lynn/2014d.html#4 Royal Pardon For Turing

https://www.garlic.com/~lynn/2014c.html#103 Royal Pardon For Turing

https://www.garlic.com/~lynn/2013j.html#30 What Makes a Tax System Bizarre?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: For Big Companies, Felony Convictions Are a Mere Footnote Date: 31 Jul, 2024 Blog: Facebookre:

The Madoff congressional hearings had the person that had tried (unsuccessfully) for a decade to get SEC to do something about Madoff (SEC's hands were finally forced when Madoff turned himself in, story is that he had defrauded some unsavory characters and Madoff was looking for gov. protection). In any case, part of the hearing testimony was that informants turn up 13 times more fraud than audits (while SEC had a 1-800 number to complain about audits, it didn't have a 1-800 "tip" line)

Madoff posts

https://www.garlic.com/~lynn/submisc.html#madoff

Sarbanes-Oxley posts

https://www.garlic.com/~lynn/submisc.html#sarbanes-oxley

whistleblower posts

https://www.garlic.com/~lynn/submisc.html#whistleblower

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Private Equity Giants Invest More Than $200M in Federal Races to Protect Their Lucrative Tax Loophole Date: 02 Aug, 2024 Blog: FacebookPrivate Equity Giants Invest More Than $200M in Federal Races to Protect Their Lucrative Tax Loophole

... trivia, the industry had gotten such a bad reputation during the "S&L Crisis" that they changed the name to private equity and "junk bonds" became "high-yield bonds". There was business TV news show where the interviewer repeatedly said "junk bonds" and the person being interviewed kept saying "high-yield bonds"

private-equity posts

https://www.garlic.com/~lynn/submisc.html#private.equity

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

tax fraud, tax evasion, tax loopholes, tax abuse, tax avoidance, tax

haven posts

https://www.garlic.com/~lynn/submisc.html#tax.evasion

S&L Crisis posts

https://www.garlic.com/~lynn/submisc.html#s&l.crisis

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 360 1052-7 Operator's Console Date: 02 Aug, 2024 Blog: FacebookI took two credit hr intro to fortran/computers and end of semester was hired to rewrite 1401 MPIO for 360/30. Univ was getting 360/67 (for tss/360) to replace 709/1401 and temporarily got 360/30 (replacing 1401) pending 360/67. The univ. shutdown datacenter over the weekend and I had the whole place dedicated, although 48hrs w/o sleep made monday classes hard. They gave me a bunch of hardware and software manuals and I got to design my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc and within a few weeks had 2000 card assembler program. Within year of taking intro class, the 360/67 arrived and I was hired fulltime responsible for os/360 (tss/360 never came to production) and I continued to have my 48hr weekend window. One weekend I had been at it for some 30+hrs and the 1052-7 console (same as 360/30) stopped typing and machine would just ring the bell. I spent 30-40mins trying everything I could think off before I hit the 1052-7 with my fist and the paper drop to the floor. It turns out that the end of the (fan-fold) paper had passed the paper sensing finger (resulting in 1052-7 unit check with intervention required) but there was enough friction to keep the paper in position and not apparent (until console jostled with my fist).

archived posts mentioning 1052-7 and end of paper

https://www.garlic.com/~lynn/2023f.html#108 CSC, HONE, 23Jun69 Unbundling, Future System

https://www.garlic.com/~lynn/2022d.html#27 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2022.html#5 360 IPL

https://www.garlic.com/~lynn/2017h.html#5 IBM System/360

https://www.garlic.com/~lynn/2017.html#38 Paper tape (was Re: Hidden Figures)

https://www.garlic.com/~lynn/2010n.html#43 Paper tape

https://www.garlic.com/~lynn/2006n.html#1 The System/360 Model 20 Wasn't As Bad As All That

https://www.garlic.com/~lynn/2006k.html#27 PDP-1

https://www.garlic.com/~lynn/2006f.html#23 Old PCs--environmental hazard

https://www.garlic.com/~lynn/2005c.html#12 The mid-seventies SHARE survey

https://www.garlic.com/~lynn/2002j.html#16 Ever inflicted revenge on hardware ?

https://www.garlic.com/~lynn/2001.html#3 First video terminal?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 360 1052-7 Operator's Console Date: 02 Aug, 2024 Blog: Facebookre:

... other trivia: student fortran jobs ran less than second on 709 (tape->tape) .... initially with os/360 on 360/67 ran over a minute. I install HASP and cuts time in half. I then start redoing stage2 sysgen to place datasets and PDS members to optimize arm seek and multi-track search, cutting another 2/3rds to 12.9secs; never got better than 709 until I install univ. waterloo watfor.

before I graduate was hired fulltime into small group in Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing into an independent business unit). I thot Renton datacenter largest in the world, couple hundred million in 360s, 360/65s arriving faster than they could be installed, boxes constantly staged in hallways around machine room. Lots of politics between Renton director and CFO, who only had a 360/30 up at Boeing field for payroll (although they enlarge the room and install 360/67 for me to play with when I'm not doing other stuff).

recent posts mentioning Watfor and Boeing CFO/Renton

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#76 Some work before IBM

https://www.garlic.com/~lynn/2024d.html#22 Early Computer Use

https://www.garlic.com/~lynn/2024c.html#93 ASCII/TTY33 Support

https://www.garlic.com/~lynn/2024c.html#15 360&370 Unix (and other history)

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2024.html#73 UNIX, MULTICS, CTSS, CSC, CP67

https://www.garlic.com/~lynn/2024.html#43 Univ, Boeing Renton and "Spook Base"

https://www.garlic.com/~lynn/2024.html#17 IBM Embraces Virtual Memory -- Finally

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 50 years ago, CP/M started the microcomputer revolution Date: 03 Aug, 2024 Blog: Facebook50 years ago, CP/M started the microcomputer revolution

some of the MIT CTSS/7094

https://en.wikipedia.org/wiki/Compatible_Time-Sharing_System

went to the 5th flr for Multics

https://en.wikipedia.org/wiki/Multics

Others went to the IBM science center on the 4th flr and did virtual machines. https://en.wikipedia.org/wiki/Cambridge_Scientific_Center

They originally wanted 360/50 to do hardware mods to add virtual

memory, but all the extra 360/50s were going to the FAA ATC program,

and so had to settle for a 360/40 ... doing CP40/CMS (control

program/40, cambridge monitor system)

https://en.wikipedia.org/wiki/IBM_CP-40

CMS would run on 360/40 real machine (pending CP40 virtual machine being operational). CMS started with single letter for filesystem ("P", "S", etc) which were mapped mapped to "symbolic name" that started out mapped to physical (360/40) 2311 disk, then later to minidisks

Then when 360/67 standard with virtual memory became available,

CP40/CMS morphs into CP67/CMS (later for VM370/CMS, virtual machine

370 and conversational monitor system). CMS Program Logic Manual

(CP67/CMS Version 3.1)

https://bitsavers.org/pdf/ibm/360/cp67/GY20-0591-1_CMS_PLM_Oct1971.pdf

(going back to CMS implementation for real 360/40) the system API

convention: pg4:

Symbolic Name: CON1, DSK1, DSK2, DSK3, DSK4, DSK5, DSK6, PRN1, RDR1,

PCH1, TAP1, TAP2

... snip ...

trivia: I took two credit hr intro to fortran/computers class and at the end of the semester, hired to rewrite 1401 MPIO for 360/30. Univ getting 360/67 for tss/360. to replace 709/1401 and temporary got 360/30 (that had 1401 microcode emulation) to replace 1401, pending arrival of 360/67 (Univ shutdown datacenter on weekend, and I would have it dedicated, although 48hrs w/o sleep made Monday classes hard). I was given a bunch of hardware & software manuals and got to design my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc ... and within a few weeks had a 2000 card assembler program

Within a year of taking intro class, 360/67 showed up and I was hired fulltime responsibility for OS/360 (tss/360 didn't come to production, so ran as 360/65 with os/360) ... and I continued to have my dedicated weekend time. Student fortran ran under second on 709 (tape to tape), but initial over a minute on 360/65. I install HASP and it cuts time in half. I then start revamping stage2 sysgen to place datasets and PDS members to optimize disk seek and multi-track searches, cutting another 2/3rds to 12.9secs; never got better than 709 until I install univ of waterloo WATFOR. My 1st SYSGEN was R9.5MFT, then started redoing stage2 sysgen for R11MFT. MVT shows up with R12 but I didn't do MVT gen until R15/16 (15/16 disk format shows up being able to specify VTOC cyl ... aka place other than cyl0 to reduce avg. arm seek).

along the way, CSC comes out to install CP67/CMS (3rd after CSC itself and MIT Lincoln Labs, precursor to VM370), which I mostly got to play with during my weekend dedicated time. First few months I mostly spent rewriting CP67 pathlengths for running os/360 in virtual machine; test os/360 stream 322secs, initially ran 856secs virtually; CP67 CPU 534secs got down to CP67 CPU 113secs. CP67 had 1052 & 2741 support with automatic terminal type identification (controller SAD CCW to switch port scanner terminal type). Univ had some TTY terminals so I added TTY support integrated with automatic terminal type.

before msdos

https://en.wikipedia.org/wiki/MS-DOS

there was Seattle computer

https://en.wikipedia.org/wiki/Seattle_Computer_Products

before Seattle computer, there was CP/M

https://en.wikipedia.org/wiki/CP/M

before developing CP/M, Kildall worked on IBM CP67/CMS at npg

https://en.wikipedia.org/wiki/Naval_Postgraduate_School

aka: CP/M ... control program/microcomputer

Opel's obit ...

https://www.pcworld.com/article/243311/former_ibm_ceo_john_opel_dies.html

According to the New York Times, it was Opel who met with Bill Gates,

CEO of the then-small software firm Microsoft, to discuss the

possibility of using Microsoft PC-DOS OS for IBM's

about-to-be-released PC. Opel set up the meeting at the request of

Gates' mother, Mary Maxwell Gates. The two had both served on the

National United Way's executive committee.

... snip ...

other trivia: Boca claimed that they weren't doing any software for ACORN (code name for IBM/PC) and so a small IBM group in Silicon Valley formed to do ACORN software (many who had been involved with CP/67-CMS and/or its follow-on VM/370-CMS) ... and every few weeks, there was contact with Boca that decision hadn't changed. Then at some point, Boca changed its mind and silicon valley group was told that if they wanted to do ACORN software, they would have to move to Boca (only one person accepted the offer, didn't last long and returned to silicon valley). Then there was joke that Boca didn't want any internal company competition and it was better to deal with external organization via contract than what went on with internal IBM politics.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

some recent posts mentioning early work on CP67 pathlengths for

running os/360

https://www.garlic.com/~lynn/2024e.html#3 TYMSHARE Dialup

https://www.garlic.com/~lynn/2024d.html#111 GNOME bans Manjaro Core Team Member for uttering "Lunduke"

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#99 Interdata Clone IBM Telecommunication Controller

https://www.garlic.com/~lynn/2024d.html#90 Computer Virtual Memory

https://www.garlic.com/~lynn/2024d.html#76 Some work before IBM

https://www.garlic.com/~lynn/2024d.html#36 This New Internet Thing, Chapter 8

https://www.garlic.com/~lynn/2024c.html#53 IBM 3705 & 3725

https://www.garlic.com/~lynn/2024c.html#15 360&370 Unix (and other history)

https://www.garlic.com/~lynn/2024b.html#114 EBCDIC

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#95 Ferranti Atlas and Virtual Memory

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024b.html#17 IBM 5100

https://www.garlic.com/~lynn/2024.html#94 MVS SRM

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2024.html#73 UNIX, MULTICS, CTSS, CSC, CP67

https://www.garlic.com/~lynn/2024.html#28 IBM Disks and Drums

https://www.garlic.com/~lynn/2024.html#17 IBM Embraces Virtual Memory -- Finally

https://www.garlic.com/~lynn/2024.html#12 THE RISE OF UNIX. THE SEEDS OF ITS FALL

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Downfall and Make-over Date: 03 Aug, 2024 Blog: Facebookre: SNA/TCPIP; 80s, the communication group was fighting off client/server and distributed computing, trying to preserve their dumb terminal paradigm ... late 80s, a senior disk engineer got a talk scheduled at an annual, world-wide, internal, communication group conference, supposedly on 3174 performance ... but opens the talk with statement that the communication group was going to be responsible for the demise of the disk division. The disk division was seeing a drop in disk sales with data fleeing datacenters to more distributed computing friendly platforms. They had come up with a number of solutions but were constantly vetoed by the communication group (with their corporate strategic ownership of everything that crossed datacenters walls) ... communication group datacenter stranglehold wasn't just disks and a couple years later IBM has one of the largest losses in the history of US companies.

As partial work-around, senior disk division executive was investing in distributed computing startups that would use IBM disks ... and would periodically ask us to drop by his investments to see if we could provide any help.

Learson was CEO and tried (and failed) to block the bureaucrats,

careerists and MBAs from destroying Watson culture and legacy ... then

20yrs later IBM (w/one of the largest losses in the history of US

companies) was being reorged into the 13 baby blues in preparation

for breaking up the company

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

we had already left IBM but get a call from the bowels of Armonk asking if we could help with the breakup of the company. Before we get started, the board brings in the former president of AMEX that (somewhat) reverses the breakup (although it wasn't long before the disk division is gone).

some more Learson detail

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

communication group trying to preserve dumb terminal paradigm posts

https://www.garlic.com/~lynn/subnetwork.html#terminal

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 50 years ago, CP/M started the microcomputer revolution Date: 04 Aug, 2024 Blog: Facebookre:

some personal computing history

https://arstechnica.com/features/2005/12/total-share/

https://arstechnica.com/features/2005/12/total-share/2/

https://arstechnica.com/features/2005/12/total-share/3/

https://arstechnica.com/features/2005/12/total-share/4/

https://arstechnica.com/features/2005/12/total-share/5

https://arstechnica.com/features/2005/12/total-share/6/

https://arstechnica.com/features/2005/12/total-share/7/

https://arstechnica.com/features/2005/12/total-share/8/

https://arstechnica.com/features/2005/12/total-share/9/

https://arstechnica.com/features/2005/12/total-share/10/

old archived post with decade of vax sales (including microvax),

sliced and diced by year, model, us/non-us

https://www.garlic.com/~lynn/2002f.html#0

IBM 4300s sold into the same mid-range market as VAX and in about the same numbers (excluding microvax) in the small/single unit orders, big difference large corporations with orders for hundreds of vm/4300s for placing out in departmental areas ... sort of the leading edge of coming the distributed computing tsunami.

other trivia: In jan1979, I was con'ed into doing (old CDC6600 fortran) benchmark on early engineering 4341 for national lab that was looking at getting 70 for compute farm, sort of the leading edge of the coming cluster supercomputing tsunami. A small vm/4341 cluster was much less expensive than a 3033, higher throughput, smaller footprint, less power&cooling, folklore that POK felt so threatened that they got corporate to cut Endicoot allocation of critical 4341 manufacturing component in half.

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Downfall and Make-over Date: 04 Aug, 2024 Blog: Facebookre:

other background, AMEX and KKR were in competition for (private

equity) take-over off RJR and KKR wins. KKR then runs into trouble

with RJR and hires away the AMEX president to help.

https://en.wikipedia.org/wiki/Barbarians_at_the_Gate:_The_Fall_of_RJR_Nabisco

Then IBM board hires former AMEX president to help with IBM make-over

... who uses some of the same tactics used at RJR (ref gone 404, but

lives on at wayback machine).

https://web.archive.org/web/20181019074906/http://www.ibmemployee.com/RetirementHeist.shtml

The former AMEX president then leaves IBM to head up another major

private-equity company

http://www.motherjones.com/politics/2007/10/barbarians-capitol-private-equity-public-enemy/

"Lou Gerstner, former ceo of ibm, now heads the Carlyle Group, a

Washington-based global private equity firm whose 2006 revenues of $87

billion were just a few billion below ibm's. Carlyle has boasted

George H.W. Bush, George W. Bush, and former Secretary of State James

Baker III on its employee roster."

... snip ...

... around turn of the century, private-equity were buying up beltway bandits and gov. contractors, hiring prominent politicians to lobby congress to outsource gov. to their companies, side-stepping laws blocking companies from using money from gov. contracts to lobby congress. the bought companies also were having their funding cut to the bone, maximizing revenue for the private equity owners; one poster child was company doing outsourced high security clearances but were found to just doing paper work, but not actually doing background checks.

former amex president posts

https://www.garlic.com/~lynn/submisc.html#gerstner

private-equity posts

https://www.garlic.com/~lynn/submisc.html#private.equity

pension plan posts

https://www.garlic.com/~lynn/submisc.html#pensions

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

success of failure posts

https://www.garlic.com/~lynn/submisc.html#success.of.failure

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Downfall and Make-over Date: 04 Aug, 2024 Blog: Facebookre:

23Jun1969 unbundling announcement, starting to charge for (application) software (made the case that kernel software should still be free), SE services, hardware maint.

SE training had included trainee type operation, part of large group at customer ship ... however, they couldn't figure out how *NOT* to charge for trainee SEs at customer location ... thus was born HONE, branch-office online access to CP67 datacenters, practicing with guest operating systems in virtual machines. The science center had also ported APL\360 to CMS for CMS\APL (fixes for large demand page virtual memory workspaces and supporting system APIs for things like file I/O, enabling real-world applications). HONE then started offering CMS\APL-based sales&marketing support applications, which came to dominate all HONE activity (with guest operating system use dwindling away). One of my hobbies after joining IBM was enhanced operating systems for internal datacenters and HONE was long-time customer.

Early 70s, IBM had the Future System effort, totally different from

360/370 and was going to completely replace 370; dearth of new 370

during FS is credited with giving the clone 370 makers (including

Amdahl) their market foothold (all during FS, I continued to work on

360/370 even periodically ridiculing what FS was doing, even drawing

analogy with long running cult film down at Central Sq; wasn't exactly

career enhancing activity). When FS implodes, there is mad rush to get

stuff back into the 370 product pipelines, including kicking off

quick&dirty 3033&3081 efforts in parallel.

http://www.jfsowa.com/computer/memo125.htm

https://people.computing.clemson.edu/~mark/fs.html

FS (failing) significantly accelerated the rise of the bureaucrats,

careerists, and MBAs .... From Ferguson & Morris, "Computer Wars: The

Post-IBM World", Time Books

https://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

... and perhaps most damaging, the old culture under Watson Snr and Jr

of free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE

NO WAVES* under Opel and Akers. It's claimed that thereafter, IBM

lived in the shadow of defeat ... But because of the heavy investment

of face by the top management, F/S took years to kill, although its

wrong headedness was obvious from the very outset. "For the first

time, during F/S, outspoken criticism became politically dangerous,"

recalls a former top executive

... snip ...

repeat, CEO Learson had tried (and failed) to block bureaucrats,

careerists, MBAs from destroying Watson culture & legacy.

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

20yrs later, IBM has one of the largest losses in the history of US

companies

In the wake of the FS implosion, the decision was changed to start charging for kernel software and some of my stuff (for internal datacenters) was selected to be initial guinea pig and I had to spend some amount of time with lawyers and business people on kernel software charging practices.

Application software practice was to forecast customer market at high, medium and low price (the forecasted customer revenue had to cover original development along with ongoing support, maintenance and new development). It was a great culture shock for much of IBM software development ... one solution was combining software packages, enormously bloated projects with extremely efficient projects (for "combined" forecast, efficient projects underwriting the extremely bloated efforts).

Trivia: after FS implodes, the head of POK also managed to convince corporate to kill the VM370 product, shutdown the development group and transfer all the people to POK for MVS/XA (Endicott eventually manages to save the VM370 product mission, but had to recreate a development group from scratch). I was also con'ed into helping with a 16-processor tightly-coupled, multiprocessor effort and we got the 3033 processor engineers into working on it in their spare time (lot more interesting than remapping 168 logic to 20% faster chips). Everybody thought was great until somebody told the head of POK it could be decades before POK favorite son operating system (MVS) had effective 16-way support (i.e. IBM documentation at the time was MVS 2-processor only had 1.2-1.5 times the throughput of single processor). Head of POK then invited some of us to never visit POK again and told the 3033 processor engineers, heads down and no distractions (note: POK doesn't ship 16 processor system until after the turn of the century).

Other trivia: Amdahl had won the battle to make ACS, 360 compatible

... folklore then was IBM executives killed ACS/360 because it would

advance the state of the art too fast and IBM would loose control of

the market. Amdahl then leaves IBM. Following has some ACS/360

features that don't show up until ES/9000 in the 90s:

https://people.computing.clemson.edu/~mark/acs_end.html

... note there were comments that if any other computer company had dumped so much money into such an enormous failed (Future System) project, they would have never survived (it took IBM another 20yrs before it was about to become extinct)

one of the last nails in the Future System coffin was analysis by the IBM Houston Science Center that if apps were moved from 370/195 to FS machine made out of the fastest available hardware technology, they would have throughput of 370/145 ... about 30 times slowdown.

23Jun1969 IBM unbundling posts

https://www.garlic.com/~lynn/submain.html#unbundle

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

smp, tightly-coupled, multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

dynamic adaptive resource management posts

https://www.garlic.com/~lynn/subtopic.html#fairshare

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: HONE, APL, IBM 5100 Date: 06 Aug, 2024 Blog: Facebook23Jun1969 unbundling announcement starts charging for (application) software, SE services, maint. etc. SE training used to include part of large group at customer site, but couldn't figure out how not to charge for trainee SE time ... so was born "HONE", online branch office access to CP67 datacenters, practicing with guest operating systems in virtual machines. IBM Cambridge Science Center also did port of APL\360 to CP67/CMS for CMS\APL (lots of fixes for workspaces in large demand page virtual memory and APIs for system services like file I/O, enabling lots of real world apps) and HONE started offering CMS\APL-based sales&marketing support applications ... which comes to dominate all HONE use.

HONE transitions from CP67/CMS to VM370/CMS (and VM370 APL\CMS done at

Palo Alto Science Center) and clone HONE installations start popping

up all over the world (HONE by far largest use of APL). PASC also does

the 370/145 APL microcode assist (claims runs APL as fast as on

370/168) and prototypes for what becomes 5100

https://en.wikipedia.org/wiki/IBM_5110

https://en.wikipedia.org/wiki/IBM_PALM_processor

The US HONE datacenters are also consolidated in Palo Alto (across the back parking lot from PASC, trivia when FACEBOOK 1st moves into Silicon Valley, it is new bldg built next door to the former US consolidated HONE datacenter). US HONE systems are enhanced with single-system image, loosely-coupled, shared DASD with load balancing and fall-over support (at least as large as any airline ACP/TPF installation) and then add 2nd processor to each system (16 processors aggregate) ... ACP/TPF not getting two-processor support for another decade. PASC helps (HONE) with lots of APL\CMS tweaks.

trivia: When I 1st joined IBM, one of my hobbies was enhanced production operating systems for internal datacenters, and HONE was long-time customer. One of my 1st IBM overseas trips was for HONE EMEA install in Paris (La Defense, "Tour Franklin?" brand new bldg, still brown dirt, not yet landscaped)

23Jun1969 unbundling posts

https://www.garlic.com/~lynn/submain.html#unbundling

cambridge science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

(internal) CSC/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

HONE (& APL) posts

https://www.garlic.com/~lynn/subtopic.html#hone

SMP, tightly-coupled, multiprocessor support posts

https://www.garlic.com/~lynn/subtopic.html#smp

posts mentioning APL, PASC, PALM, 5100/5110:

https://www.garlic.com/~lynn/2024b.html#15 IBM 5100

https://www.garlic.com/~lynn/2023e.html#53 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2015c.html#44 John Titor was right? IBM 5100

https://www.garlic.com/~lynn/2013o.html#82 One day, a computer will fit on a desk (1974) - YouTube

https://www.garlic.com/~lynn/2005.html#44 John Titor was right? IBM 5100

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: TYMSHARE, ADVENTURE/games Date: 06 Aug, 2024 Blog: FacebookOne of the visits to TYMSHARE they demo'ed a game somebody had found on Stanford PDP10 and ported it to VM370/CMS, I got a copy and made executable available inside IBM ... and would send the source to anybody that got all points .... within a sort period of time, new versions with more points appeared as well as port to PLI.

We had argument with corporate auditors that directed all games had to be removed from the system. At the time most company 3270 logon screens included "For Business Purposes Only" ... our 3270 logon screens said "For Management Approved Uses" (and claimed they were human factors demo programs).

commercial virtual machine online service posts

https://www.garlic.com/~lynn/submain.html#online

some recent posts mentioning tymshare and adventure/games

https://www.garlic.com/~lynn/2024c.html#120 Disconnect Between Coursework And Real-World Computers

https://www.garlic.com/~lynn/2024c.html#43 TYMSHARE, VMSHARE, ADVENTURE

https://www.garlic.com/~lynn/2024c.html#25 Tymshare & Ann Hardy

https://www.garlic.com/~lynn/2023f.html#116 Computer Games

https://www.garlic.com/~lynn/2023f.html#60 The Many Ways To Play Colossal Cave Adventure After Nearly Half A Century

https://www.garlic.com/~lynn/2023f.html#7 Video terminals

https://www.garlic.com/~lynn/2023e.html#9 Tymshare

https://www.garlic.com/~lynn/2023d.html#115 ADVENTURE

https://www.garlic.com/~lynn/2023c.html#14 Adventure

https://www.garlic.com/~lynn/2023b.html#86 Online systems fostering online communication

https://www.garlic.com/~lynn/2023.html#37 Adventure Game

https://www.garlic.com/~lynn/2022e.html#1 IBM Games

https://www.garlic.com/~lynn/2022c.html#28 IBM Cambridge Science Center

https://www.garlic.com/~lynn/2022b.html#107 15 Examples of How Different Life Was Before The Internet

https://www.garlic.com/~lynn/2022b.html#28 Early Online

https://www.garlic.com/~lynn/2022.html#123 SHARE LSRAD Report

https://www.garlic.com/~lynn/2022.html#57 Computer Security

https://www.garlic.com/~lynn/2021k.html#102 IBM CSO

https://www.garlic.com/~lynn/2021h.html#68 TYMSHARE, VMSHARE, and Adventure

https://www.garlic.com/~lynn/2021e.html#8 Online Computer Conferencing

https://www.garlic.com/~lynn/2021b.html#84 1977: Zork

https://www.garlic.com/~lynn/2021.html#85 IBM Auditors and Games

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 360/50 and CP-40 Date: 06 Aug, 2024 Blog: FacebookIBM Cambridge Science Center had a similar problem, wanted to have a 360/50 to modify for virtual memory, but all the extra 360/50s were going to FAA ATC ... and so they had to settle for 360/40 ... they implemented virtual memory with associative array that held process-ID and virtual page number for each real page (compared to Atlas associative array, which just had virtual page number for each real page... effectively just single large virtual address space).

the official IBM operating system for (standard virtual memory) 360/67 was TSS/360 which peaked around 1200 people at a time when the science center had 12 people (that included secretary) morphing CP/40 into CP/67.

Melinda's history website

https://www.leeandmelindavarian.com/Melinda#VMHist

trivia: FE had a bootstrap diagnostic process that started with "scoping" components. With 3081 TCMs ... it was no longer possible to scope ... so a system was written for UC processor (communication group used for 37xx and other boxes) that implemented "service processor" with probes into TCMs for diagnostic purposes (and a scope could be used to diagnose the "service processor", bring it up and then used to diagnose the 3081).

Moving to 3090, they decided on using 4331 running a highly modified

version of VM370 Release 6 with all the screens implemented in CMS

IOS3270. This was then upgraded to a pair of 4361s for service

processor. Your can sort of see this in the 3092 requiring a pair of

3370s FBA (one for each 4361) ... even for MVS systems that never had

FBA support

https://web.archive.org/web/20230719145910/https://www.ibm.com/ibm/history/exhibits/mainframe/mainframe_PP3090.html

trivia: Early in rex (before renamed rexx and released to customers),

I wanted to show it wsn't just another pretty scripting language. I

decided to spend half time over three months reWriting large

assembler (large dump reader and diagnostic) application with ten

times the function and running ten times faster (some slight of hand

to make interpreted rex run faster than asm). I finished early so

wrote a library of automated routines that searched for common failure

signatures. For some reason it was never released to customers (even

though it was in use by nearly every internal datacenter and PSR)

... I did eventually get permission to give user group presentations

on how I did the implementation ... and eventually similar

implementations started to appear. Then the 3092 group asked if they

could ship it with the 3090 service processor; some old archive email

https://www.garlic.com/~lynn/2010e.html#email861031

https://www.garlic.com/~lynn/2010e.html#email861223

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

dumprx posts

https://www.garlic.com/~lynn/submain.html#dumprx

recent posts mentioning CP40

https://www.garlic.com/~lynn/2024e.html#14 50 years ago, CP/M started the microcomputer revolution

https://www.garlic.com/~lynn/2024d.html#111 GNOME bans Manjaro Core Team Member for uttering "Lunduke"

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#102 Chipsandcheese article on the CDC6600

https://www.garlic.com/~lynn/2024d.html#25 IBM 23June1969 Unbundling Announcement

https://www.garlic.com/~lynn/2024d.html#0 time-sharing history, Privilege Levels Below User

https://www.garlic.com/~lynn/2024c.html#88 Virtual Machines

https://www.garlic.com/~lynn/2024c.html#65 More CPS

https://www.garlic.com/~lynn/2024c.html#18 CP40/CMS

https://www.garlic.com/~lynn/2024b.html#5 Vintage REXX

https://www.garlic.com/~lynn/2024.html#28 IBM Disks and Drums

https://www.garlic.com/~lynn/2023g.html#82 Cloud and Megadatacenter

https://www.garlic.com/~lynn/2023g.html#35 Vintage TSS/360

https://www.garlic.com/~lynn/2023g.html#1 Vintage TSS/360

https://www.garlic.com/~lynn/2023f.html#108 CSC, HONE, 23Jun69 Unbundling, Future System

https://www.garlic.com/~lynn/2023d.html#87 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#73 Some Virtual Machine History

https://www.garlic.com/~lynn/2023c.html#105 IBM 360/40 and CP/40

https://www.garlic.com/~lynn/2022g.html#2 VM/370

https://www.garlic.com/~lynn/2022f.html#113 360/67 Virtual Memory

https://www.garlic.com/~lynn/2022d.html#58 IBM 360/50 Simulation From Its Microcode

https://www.garlic.com/~lynn/2022.html#71 165/168/3033 & 370 virtual memory

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Disk Capacity and Channel Performance Date: 07 Aug, 2024 Blog: FacebookOriginal 3380 had 20 track spacings between each data track. That was then cut in half giving twice the tracks(& cylinders) for double the capacity, then spacing cut again for triple the capacity.

About then the father of 801/risc gets me to try and help him with a disk "wide-head" ... transferring data in parallel with 16 closely placed data tracks ... following servo tracks on each side (18 tracks total). One of the problems was mainframe channels were still 3mbytes/sec and this required 50mbytes/sec. Then in 1988, the branch office asks me to help LLNL (national lab) get some serial stuff they were working with standardized, which quickly becomes fibre-channel standard ("FCS", including some stuff I did in 1980 ... initially 1gbit/sec full-duplex, 200mbytes/sec aggregate). Later POK announces some serial stuff that had been working on since at least 1980, with ES/9000 as ESCON (when it was already obsolete, around 17mbytes/sec).

Then some POK engineers become involved with FCS and define a protocol that radically reduces the throughput, eventually announced as FICON. Latest public benchmark I've found is z196 "Peak I/O" that gets 2M IOPS over 104 FICON. About the same time a FCS was announced for E5-2600 server blades claiming over million IOPS (two such FCS having higher throughput than 104 FICON). Note IBM documentation also recommended that SAPs (system assist processors that handle the actual I/O) be kept to 70% CPU ... or around 1.5M IOPS. Somewhat complicating matters is CKD DASD haven't been made for decades, all being simulated using industry standard fixed-block disks.

re:

https://www.ibm.com/support/pages/system/files/inline-files/IBM%20z16%20FEx32S%20Performance_3.pdf

aka zHPF & TCW is closer to native FCS operation starting in 1988 (and what I had done in 1980) ... trivia the hardware vendor tried to get IBM to release my support in 1980 ... but the group in POK playing with fiber were afraid it would make it harder to get their stuff released (eventually a decade later as ESCON) ... and get it vetoed. Old archived (bit.listserv.ibm-main) post from 2012 discussing zHPF&TCW is closer to original 1988 FCS specification (and what I had done in 1980). Also mentions throughput being throttled by SAP processing https://www.garlic.com/~lynn/2012m.html#4

while working with FCS was also doing IBM HA/CMP product ... Nick Donofrio had approved HA/6000 project, originally for NYTimes to move their newspaper system (ATEX) off VAXCluster to RS/6000. I rename it HA/CMP when I start doing technical/scientific cluster scale-up with national labs and commercial cluster scale-up with RDBMS vendors (Oracle, Sybase, Informix, Ingres) that had VAXcluster support in same source base with UNIX (I do a distributed lock manager with VAXCluster API semantics to ease the transition).

We were also using Hursley's 9333 in some configurations and I was

hoping to make it interoperable with FCS. Early jan1992, in meeting

with Oracle CEO, AWD/Hester tells Ellison that we would have

16-system clusters by mid92 and 128-system clusters by

ye92. Then late jan1992, cluster scale-up was transferred for IBM

Supercomputer (technical/scientific *ONLY*) and we were told we

couldn't work with anything having more than four processors. We leave

IBM a few months later. Then later find that 9333 evolves into SSA

instead:

https://en.wikipedia.org/wiki/Serial_Storage_Architecture

channel extender posts

https://www.garlic.com/~lynn/submisc.html#channel.extender

FCS & FICON posts

https://www.garlic.com/~lynn/submisc.html#ficon

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

posts specifically mentioning 9333, SSA, FCS, FICON

https://www.garlic.com/~lynn/2024c.html#60 IBM "Winchester" Disk

https://www.garlic.com/~lynn/2024.html#71 IBM AIX

https://www.garlic.com/~lynn/2024.html#35 RS/6000 Mainframe

https://www.garlic.com/~lynn/2023f.html#70 Vintage RS/6000 Mainframe

https://www.garlic.com/~lynn/2023f.html#58 Vintage IBM 5100

https://www.garlic.com/~lynn/2023e.html#78 microcomputers, minicomputers, mainframes, supercomputers

https://www.garlic.com/~lynn/2023d.html#104 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#97 The IBM mainframe: How it runs and why it survives

https://www.garlic.com/~lynn/2022e.html#47 Best dumb terminal for serial connections

https://www.garlic.com/~lynn/2022b.html#15 Channel I/O

https://www.garlic.com/~lynn/2021k.html#127 SSA

https://www.garlic.com/~lynn/2021g.html#1 IBM ESCON Experience

https://www.garlic.com/~lynn/2019b.html#60 S/360

https://www.garlic.com/~lynn/2019b.html#57 HA/CMP, HA/6000, Harrier/9333, STK Iceberg & Adstar Seastar

https://www.garlic.com/~lynn/2016h.html#95 Retrieving data from old hard drives?

https://www.garlic.com/~lynn/2013m.html#99 SHARE Blog: News Flash: The Mainframe (Still) Isn't Dead

https://www.garlic.com/~lynn/2013m.html#96 SHARE Blog: News Flash: The Mainframe (Still) Isn't Dead

https://www.garlic.com/~lynn/2013i.html#50 The Subroutine Call

https://www.garlic.com/~lynn/2013g.html#14 Tech Time Warp of the Week: The 50-Pound Portable PC, 1977

https://www.garlic.com/~lynn/2012m.html#2 Blades versus z was Re: Turn Off Another Light - Univ. of Tennessee

https://www.garlic.com/~lynn/2012k.html#77 ESCON

https://www.garlic.com/~lynn/2012k.html#69 ESCON

https://www.garlic.com/~lynn/2012j.html#13 Can anybody give me a clear idea about Cloud Computing in MAINFRAME ?

https://www.garlic.com/~lynn/2011p.html#40 Has anyone successfully migrated off mainframes?

https://www.garlic.com/~lynn/2011p.html#39 Has anyone successfully migrated off mainframes?

https://www.garlic.com/~lynn/2011e.html#31 "Social Security Confronts IT Obsolescence"

https://www.garlic.com/~lynn/2010i.html#61 IBM to announce new MF's this year

https://www.garlic.com/~lynn/2010h.html#63 25 reasons why hardware is still hot at IBM

https://www.garlic.com/~lynn/2010f.html#13 What was the historical price of a P/390?