From: Lynn Wheeler <lynn@garlic.com> Subject: DataTree, UniTree, Mesa Archival Date: 06 Oct, 2023 Blog: Facebookre:

Learson trying to block the bureaucrats, careerists, and MBAs from

destroying Watson legacy (and failed; two decades later IBM has one of

the largest losses in history of US companies and was being re-organized

into the 13 baby blues in preparation for breaking up the company)

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

I had been introduced to John Boyd in the early 80s and would sponsor

his briefings ... when he passes, the USAF had pretty much disowned

him and it was the Marines at Arlington. Somewhat surprised that the

USAF then dedicates a hall to him at Nellis (USAF Weapons School)

... with one of his quotes:

There are two career paths in front of you, and you have to choose

which path you will follow. One path leads to promotions, titles, and

positions of distinction.... The other path leads to doing things that

are truly significant for the Air Force, but the rewards will quite

often be a kick in the stomach because you may have to cross swords

with the party line on occasion. You can't go down both paths, you

have to choose. Do you want to be a man of distinction or do you want

to do things that really influence the shape of the Air Force? To be

or to do, that is the question.

... snip ...

Boyd posts and WEB URLs

https://www.garlic.com/~lynn/subboyd.html

ibm downfall, breakup, controlling market posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

some past posts:

https://www.garlic.com/~lynn/2022e.html#103 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022e.html#104 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022f.html#2 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022f.html#32 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022f.html#60 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022f.html#67 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022g.html#24 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2023e.html#108 John Boyd and IBM Wild Ducks

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: How U.S. Hospitals Undercut Public Health Date: 07 Oct, 2023 Blog: FacebookHow U.S. Hospitals Undercut Public Health

... aggravated by public equity buying up hospitals, health care systems, medical practices, retirement facilities, etc ... and skimming off as much as possible

Private equity changes workforce stability in physician-owned medical practices

https://www.eurekalert.org/news-releases/975889

When Private Equity Takes Over a Nursing Home. After an

investment firm bought St. Joseph's Home for the Aged, in Richmond,

Virginia, the company reduced staff, removed amenities, and set the

stage for a deadly outbreak of COVID-19.

https://www.newyorker.com/news/dispatch/when-private-equity-takes-over-a-nursing-home

Parasitic Private Equity is Consuming U.S. Health Care from the

Inside Out

https://www.juancole.com/2022/11/parasitic-private-consuming.html

Patients for Profit: How Private Equity Hijacked Health

Care. ER Doctors Call Private Equity Staffing Practices Illegal

and Seek to Ban Them

https://khn.org/news/article/er-doctors-call-private-equity-staffing-practices-illegal-and-seek-to-ban-them/

Another Private Equity-Style Hospital Raid Kills a Busy Urban

Hospital

https://prospect.org/health/another-private-equity%E2%80%93style-hospital-raid-kills-a-busy-urban-hospital/

How Private Equity Looted America. Inside the industry that has

ransacked the US economy--and upended the lives of working people

everywhere.

https://www.motherjones.com/politics/2022/05/private-equity-apollo-blackstone-kkr-carlyle-carried-interest-loophole/

Elizabeth Warren's Long, Thankless Fight Against Our Private

Equity Overlords. She sponsored a bill to fight what she calls

"legalized looting." Too bad her colleagues don't seem all that

interested.

https://www.motherjones.com/politics/2022/05/elizabeth-warren-private-equity-stop-wall-street-looting/

Your Money and Your Life: Private Equity Blasts Ethical

Boundaries of American Medicine

https://www.nakedcapitalism.com/2022/05/your-money-and-your-life-private-equity-blasts-ethical-boundaries-of-american-medicine.html

Ethically Challenged: Private Equity Storms US Health Care

https://www.amazon.com/Ethically-Challenged-Private-Equity-Storms-ebook-dp-B099NXGNB1/dp/B099NXGNB1/

AHIP advocates for transparency for healthcare private equity

firms. Raising prices has been a common strategy after a private

equity acquisition, and patient outcomes have suffered as well,

the group said.

https://www.healthcarefinancenews.com/news/ahip-advocates-transparency-healthcare-private-equity-firms

Private equity's newest target: Your pension fund

https://www.fnlondon.com/articles/private-equitys-newest-target-your-pension-fund-20220517

private equity posts

https://www.garlic.com/~lynn/submisc.html#private.equity

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Bounty offered for secret NSA seeds behind NIST elliptic curves algo Date: 07 Oct, 2023 Blog: FacebookBounty offered for secret NSA seeds behind NIST elliptic curves algo

trivia: I had got a secure chip right after turn of century with silicon EC/DSA built in and was hoping to get EAL5+ (or even EAL6+) certification ... but then the NIST ECC certification criteria was pulled and I had to settle for EAL4+.

Some pilot chips with software programming were demoed in booth at

December Miami BAI retail banking conference (old archived post)

https://www.garlic.com/~lynn/99.html#224

TD to Information Assurance DDI had a panel session in trusted

computer track at Intel IDF and asked me to give talk on the chip

(gone 404, but lives on at wayback machine).

https://web.archive.org/web/20011109072807/http://www.intel94.com/idf/spr2001/sessiondescription.asp?id=stp%2bs13

AADS Chip Strawman

https://www.garlic.com/~lynn/x959.html#aadsstraw

X9.59, Identity, Authentication, and Privacy posts

https://www.garlic.com/~lynn/subpubkey.html#privacy

trusted computing posts

https://www.garlic.com/~lynn/submisc.html#trusted.computing

some recent posts mentioning secure chip talk at Intel IDF

https://www.garlic.com/~lynn/2022f.html#112 IBM Downfall

https://www.garlic.com/~lynn/2022f.html#68 Security Chips and Chip Fabs

https://www.garlic.com/~lynn/2022e.html#105 FedEx to Stop Using Mainframes, Close All Data Centers By 2024

https://www.garlic.com/~lynn/2022e.html#98 Enhanced Production Operating Systems II

https://www.garlic.com/~lynn/2022e.html#84 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022b.html#108 Attackers exploit fundamental flaw in the web's security to steal $2 million in cryptocurrency

https://www.garlic.com/~lynn/2022b.html#103 AADS Chip Strawman

https://www.garlic.com/~lynn/2021k.html#133 IBM Clone Controllers

https://www.garlic.com/~lynn/2021k.html#17 Data Breach

https://www.garlic.com/~lynn/2021j.html#62 IBM ROLM

https://www.garlic.com/~lynn/2021j.html#41 IBM Confidential

https://www.garlic.com/~lynn/2021j.html#21 IBM Lost Opportunities

https://www.garlic.com/~lynn/2021h.html#97 What Is a TPM, and Why Do I Need One for Windows 11?

https://www.garlic.com/~lynn/2021h.html#74 "Safe" Internet Payment Products

https://www.garlic.com/~lynn/2021g.html#75 Electronic Signature

https://www.garlic.com/~lynn/2021g.html#66 The Case Against SQL

https://www.garlic.com/~lynn/2021d.html#87 Bizarre Career Events

https://www.garlic.com/~lynn/2021d.html#20 The Rise of the Internet

https://www.garlic.com/~lynn/2021b.html#21 IBM Recruiting

some archived posts mentioning EC/DSA and/or elliptic curve

https://www.garlic.com/~lynn/2021j.html#13 cryptologic museum

https://www.garlic.com/~lynn/2017d.html#24 elliptic curve pkinit?

https://www.garlic.com/~lynn/2015g.html#23 [Poll] Computing favorities

https://www.garlic.com/~lynn/2013o.html#50 Secret contract tied NSA and security industry pioneer

https://www.garlic.com/~lynn/2013l.html#55 "NSA foils much internet encryption"

https://www.garlic.com/~lynn/2012b.html#71 Password shortcomings

https://www.garlic.com/~lynn/2012b.html#36 RFC6507 Ellipitc Curve-Based Certificate-Less Signatures

https://www.garlic.com/~lynn/2011o.html#65 Hamming Code

https://www.garlic.com/~lynn/2010m.html#57 Has there been a change in US banking regulations recently

https://www.garlic.com/~lynn/2009r.html#36 SSL certificates and keys

https://www.garlic.com/~lynn/2009q.html#40 Crypto dongles to secure online transactions

https://www.garlic.com/~lynn/2008q.html#64 EAL5 Certification for z10 Enterprise Class Server

https://www.garlic.com/~lynn/2008q.html#63 EAL5 Certification for z10 Enterprise Class Server

https://www.garlic.com/~lynn/2008j.html#43 What is "timesharing" (Re: OS X Finder windows vs terminal window weirdness)

https://www.garlic.com/~lynn/2007q.html#72 Value of SSL client certificates?

https://www.garlic.com/~lynn/2007q.html#34 what does xp do when system is copying

https://www.garlic.com/~lynn/2007q.html#32 what does xp do when system is copying

https://www.garlic.com/~lynn/2007b.html#65 newbie need help (ECC and wireless)

https://www.garlic.com/~lynn/2007b.html#30 How many 36-bit Unix ports in the old days?

https://www.garlic.com/~lynn/2005u.html#27 RSA SecurID product

https://www.garlic.com/~lynn/2005l.html#34 More Phishing scams, still no SSL being used

https://www.garlic.com/~lynn/2005e.html#22 PKI: the end

https://www.garlic.com/~lynn/2004b.html#22 Hardware issues [Re: Floating point required exponent range?]

https://www.garlic.com/~lynn/2003n.html#32 NSA chooses ECC

https://www.garlic.com/~lynn/2003n.html#25 Are there any authentication algorithms with runtime changeable

https://www.garlic.com/~lynn/2003n.html#23 Are there any authentication algorithms with runtime changeable key length?

https://www.garlic.com/~lynn/2003l.html#61 Can you use ECC to produce digital signatures? It doesn't see

https://www.garlic.com/~lynn/2002n.html#20 Help! Good protocol for national ID card?

https://www.garlic.com/~lynn/2002j.html#21 basic smart card PKI development questions

https://www.garlic.com/~lynn/2002i.html#78 Does Diffie-Hellman schema belong to Public Key schema family?

https://www.garlic.com/~lynn/2002g.html#38 Why is DSA so complicated?

https://www.garlic.com/~lynn/2002c.html#31 You think? TOM

https://www.garlic.com/~lynn/2002c.html#10 Opinion on smartcard security requested

https://www.garlic.com/~lynn/aadsm27.htm#37 The bank fraud blame game

https://www.garlic.com/~lynn/aadsm24.htm#51 Crypto to defend chip IP: snake oil or good idea?

https://www.garlic.com/~lynn/aadsm24.htm#29 DDA cards may address the UK Chip&Pin woes

https://www.garlic.com/~lynn/aadsm24.htm#28 DDA cards may address the UK Chip&Pin woes

https://www.garlic.com/~lynn/aadsm24.htm#23 Use of TPM chip for RNG?

https://www.garlic.com/~lynn/aadsm23.htm#42 Elliptic Curve Cryptography (ECC) Cipher Suites for Transport Layer Security (TLS)

https://www.garlic.com/~lynn/aadsm15.htm#30 NSA Buys License for Certicom's Encryption Technolog

https://www.garlic.com/~lynn/aadsm9.htm#3dvulner5 3D Secure Vulnerabilities?

https://www.garlic.com/~lynn/aadsm5.htm#x959 X9.59 Electronic Payment Standard

https://www.garlic.com/~lynn/aepay10.htm#46 x9.73 Cryptographic Message Syntax

https://www.garlic.com/~lynn/aepay6.htm#docstore ANSI X9 Electronic Standards "store"

https://www.garlic.com/~lynn/ansiepay.htm#anxclean Misc 8583 mapping cleanup

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: CP/67, VM/370, VM/SP, VM/XA Date: 07 Oct, 2023 Blog: Facebookre:

(IBM) Mainframe Hall of Fame (full list)

https://www.enterprisesystemsmedia.com/mainframehalloffame

old addition, 4 new members (gone 404 but lives on at wayback machine)

https://web.archive.org/web/20110727105535/http://www.mainframezone.com/blog/mainframe-hall-of-fame-four-new-members-added/

Knights of VM

http://mvmua.org/knights.html

Old mainframe 2005 article (some details slightly garbled, gone 404

but lives on at wayback machine)

https://web.archive.org/web/20200103152517/http://archive.ibmsystemsmag.com/mainframe/stoprun/stop-run/making-history/

more IBM (not all mainframe) ... Learson (mainframe hall of fame,

"Father of the system/360") tried (& failed) to block the bureaucrats,

careerists, and MBAs from destroying the Watson legacy.

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

https://www.linkedin.com/pulse/inventing-internet-lynn-wheeler/

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

ibm downfall, breakup, controlling market posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: GML/SGML separating content and format Date: 07 Oct, 2023 Blog: Facebooknote some of the MIT CTSS/7094 had gone to 5th flr to do multics, others went to the IBM science center on the 4th and did internal network (larger than arpanet/internet from just about beginning until sometime mid/late 80s, technology also used for corporate sponsored univ BITNET), virtual machines (initially cp40/cms on 360/40 with hardware to add virtual memory, morphs into cp67/cms when 360/67, standard with virtual memory, becomes available). CTSS RUNOFF was redone for CMS as SCRIPT. GML was invented at science center in 1969 and GML tag processing added to SCRIPT (GML chosen because 1st letters of inventors' last name). I've regularly cited Goldfarb's SGML website ... but checking just now, not responding ... so most recent page from wayback machine

SGML history

https://web.archive.org/web/20230402213042/http://www.sgmlsource.com/history/index.htm

Welcome to the SGML History Niche. It contains some reliable papers on

the early history of SGML, and its precursor, IBM's Generalized Markup

Language, GML.

... snip ...

papers about early GML

https://web.archive.org/web/20230402212558/http://www.sgmlsource.com/history/jasis.htm

Actually, the law office application was the original motivation for

the project, something I was allowed to do part-time because of my

knowledge of the user requirements. My real job was to encourage the

staffs of the various scientific centers to make use of the

CP-67-based Wide Area Network that was centered in Cambridge.

... snip ...

... see "Conclusions" section in above webpage. ... then decade after

the morph of GML into SGML and after another decade, SGML morphs into

HTML at CERN. Trivia: 1st webserver in the US is on (Stanford) SLAC's

VM370 system (CP67 having morphed into VM370)

https://ahro.slac.stanford.edu/wwwslac-exhibit

https://ahro.slac.stanford.edu/wwwslac-exhibit/early-web-chronology-and-documents-1991-1994

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

GML, SGML, HTML, XML, ... etc, posts

https://www.garlic.com/~lynn/submain.html#sgml

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

BITNET posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 10 Oct, 2023 Blog: FacebookCo-worker at science center responsible for internal network (larger arpanet/internet from just about beginning until sometime mid/late 80s), technology also used for the corporate sponsored univ. BITNET. Ed Hendricks

Great cutover of arpanet (imp/host) to internetworking on 1jan1983,

there was approx. 100 IMPS and 255 hosts, while internal network was

rapidly approaching 1000 nodes. Old archived post that lists

corporate locations that added one or more nodes during 1983:

https://www.garlic.com/~lynn/2006k.html#8

We had 1st corporate CSNET gateway at SJR in 1982 ... old archived

CSNET email about the cutover from imp/host to internetworking:

https://www.garlic.com/~lynn/2000e.html#email821230

https://www.garlic.com/~lynn/2000e.html#email830202

Big inhibitor for ARPANET proliferation was requirement for (tightly controlled) IMPs. Big inhibitor for internal network proliferation was 1) requirement that links had to be encrypted (and gov. resistance, especially when links cross national boundaries, 1985 major link-encryptor vendor claimed that internal network had more than half all link encryptors in the world) and 2) communication group forcing conversion to SNA and limitation to mainframes.

In the early 80s, I had HSDT project (T1 and faster computer links,

both terrestrial and satellite) and was working with NSF director, was

suppose to get $20M to interconnect the NSF supercomputer

centers. Then congress cuts the budget, some other things happen and

eventually RFP was released (in part based on what we already had

running). ... Preliminary Announcement (28Mar1986):

https://www.garlic.com/~lynn/2002k.html#12

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

IBM internal politics was not allowing us to bid (being blamed for

online computer conferencing inside IBM likely contributed, folklore

is that 5of6 members of corporate executive committee wanted to fire

me). The NSF director tried to help by writing the company a letter

3Apr1986, NSF Director to IBM Chief Scientist and IBM Senior VP and

director of Research, copying IBM CEO) with support from other

gov. agencies ... but that just made the internal politics worse (as

did claims that what we already had operational was at least 5yrs

ahead of the winning bid, RFP awarded 24Nov87), as regional networks connect in, it becomes

the NSFNET backbone, precursor to modern internet

https://www.technologyreview.com/s/401444/grid-computing/

BITNET converted to TCP/IP for "BITNET2" (about the same time the

communication group forced the internal network to convert to SNA)

... then merged with CSNET.

https://en.wikipedia.org/wiki/BITNET

https://en.wikipedia.org/wiki/CSNET

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

bitnet posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

hsdt posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

Interop 88 posts

https://www.garlic.com/~lynn/subnetwork.html#interop88

inventing the internet

https://www.linkedin.com/pulse/inventing-internet-lynn-wheeler/

some internal politics

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

ibm downfall, breakup, controlling market posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 10 Oct, 2023 Blog: Facebookre:

some gov agencies were mandating GOSIP (gov OSI) and elimination of TCP/IP ... there were some number of OSI booths at Interop88

I was on TAB for (Chesson's) XTP and there were some gov. operations involved that believed they needed ISO standard ... so took it to (ISO Chartered) ANSI X3S3.3 for standard as HSP (high-speed protocol) ... eventually they said that ISO required standards that conformed to OSI. XTP didn't qualify because 1) it supported internetworking (a non-existent layer between OSI 3/4, network/transport), 2) skipped interface between layer 3/4, and 3) went directly to LAN/MAC (non-existent interface somewhere in the middle of OSI 3/network layer).

... at the time there was joke that ISO could standardize stuff that wasn't even possible to implement while IETF required at least two interoperable implementations before standards progression.

interop88 posts

https://www.garlic.com/~lynn/subnetwork.html#interop88

xtp/hsp posts

https://www.garlic.com/~lynn/subnetwork.html#xtphsp

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

... and old email from somebody I had previously worked with ... who

got responsibility for setting up EARN

https://www.garlic.com/~lynn/2001h.html#email840320

bitnet posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Video terminals Newsgroups: alt.folklore.computers Date: Tue, 10 Oct 2023 15:35:52 -1000Charlie Gibbs <cgibbs@kltpzyxm.invalid> writes:

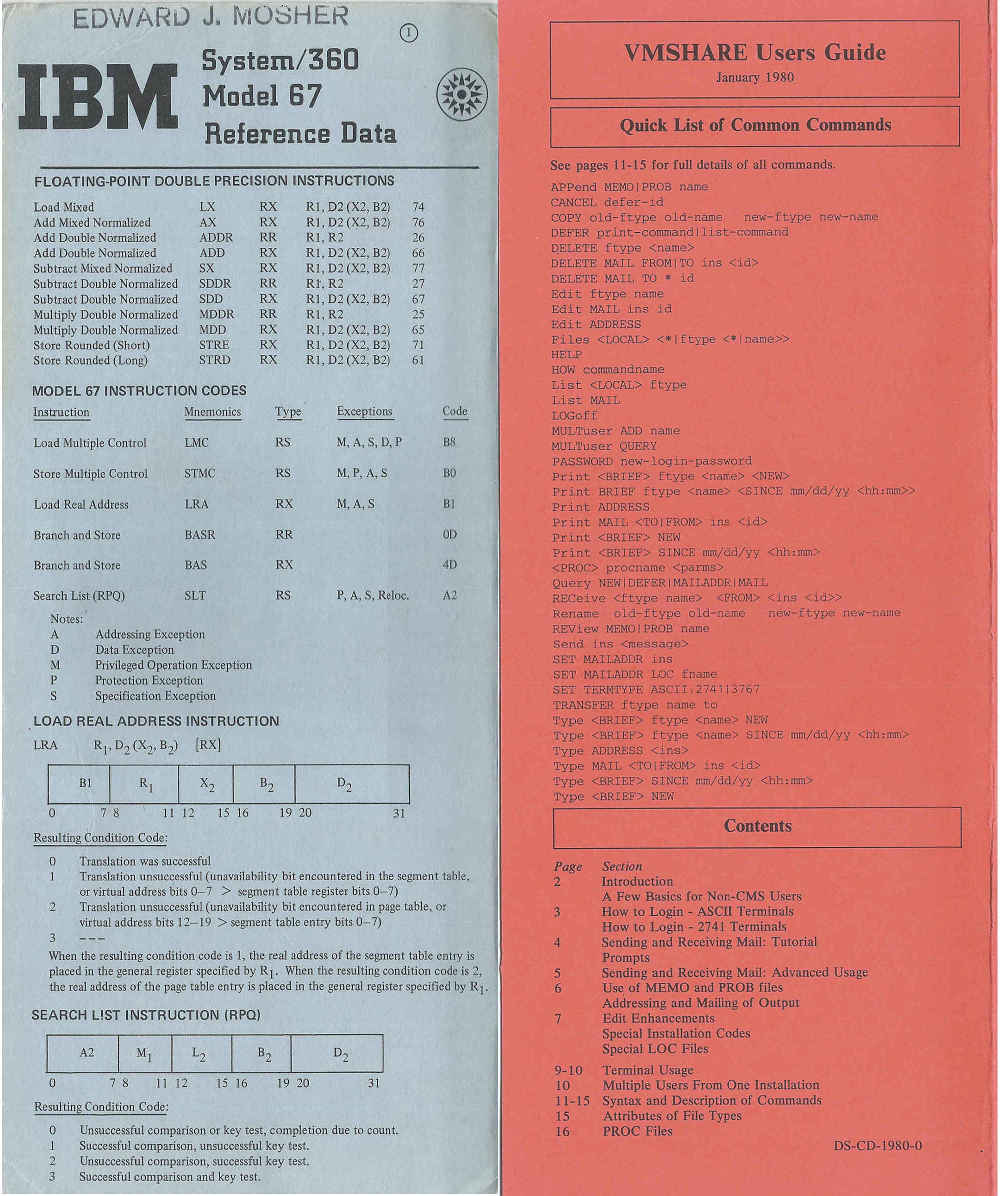

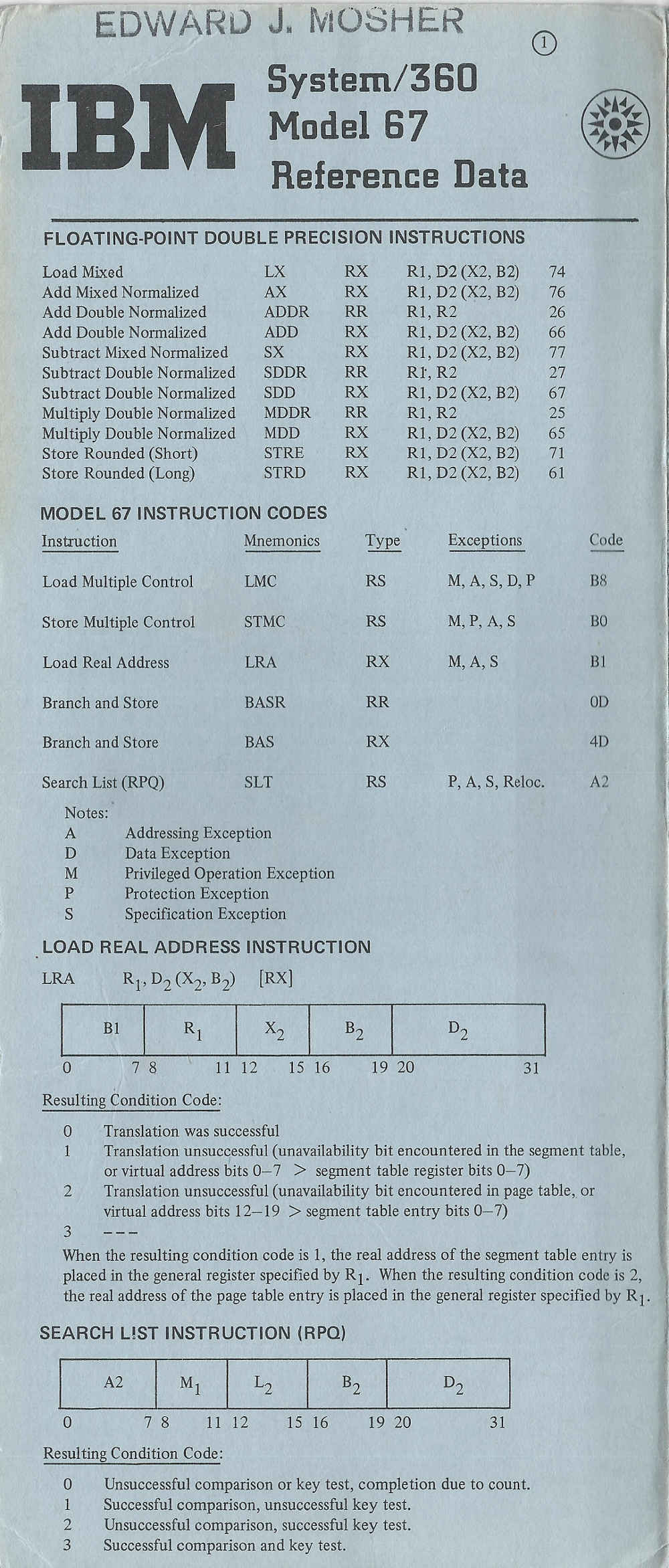

I was given a bunch of hardware & software manuals and got to design & implement my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc. The univ. shutdown datacenter on weekends and I would have the place dedicated (although monday morning classes were a little hard after 48hrs w/o sleep). Within a few weeks, I had 2000 card assembler program. Within a year of taking intro class, the 360/67 had arrived and I was hired fulltime responsible for os/360 (tss/360 never came to production fruition, so ran as 360/65).

The univ. had some number of 2741s (originally for tss/360) ... but then got some tty/ascii terminals (tty/ascii port scanner for the IBM telecommunication controller arrived in Heathkit box).

Then some people from science center came out to install (virtual

machine) CP67 ... which was pretty much me playing with it on my weekend

dedicated time. It had 1052&2741 terminal support and some tricks to

dynamically recognize line terminal type and switch the line port

scanner type. I added TTY/ASCCI support (including being able to

dynamically switch terminal scanner type). I then wanted to have single

dail-up number for all terminal types (hunt group):

https://en.wikipedia.org/wiki/Line_hunting

I could dynamically switch port scanner terminal type for each line but

IBM had taken short-cut and hardwired the line speed (so didn't quite

work). Univ. starts a project to implement clone controller; build a

channel interface board for Interdata/3 programmed to emulate IBM

terminal control unit, with the addition it can do dynamic line

speed. It was later enhanced to be a Interdata/4 for channel interface

and cluster of Interdata/3s for port interfaces; Interdata and later

Perkin-Elmer sell it as IBM clone controller) ... four of us get written

up as responsible for (some part of) clone controller business

https://en.wikipedia.org/wiki/Interdata

https://en.wikipedia.org/wiki/Perkin-Elmer

other trivia: 360 was originally suppose to be ASCII machine ... but

ASCII unit record gear wasn't ready, so they were going to (temporarily)

extend BCD (refs gone 404, but lives on at wayback machine)

https://web.archive.org/web/20180513184025/http://www.bobbemer.com/P-BIT.HTM

other

https://web.archive.org/web/20180513184025/http://www.bobbemer.com/FATHEROF.HTM

https://web.archive.org/web/20180513184025/http://www.bobbemer.com/HISTORY.HTM

https://web.archive.org/web/20180513184025/http://www.bobbemer.com/ASCII.HTM

after graduating and joining IBM at cambridge science center (i got 2741

at home) ... and then transferring to san jose research (home 2741

replaced 1st with cdi miniterm, then a IBM 3101 glass tty) ... I also

got to wander around lots of IBM & customer datacenters in silicon

valley ... including tymshare

https://en.wikipedia.org/wiki/Tymshare

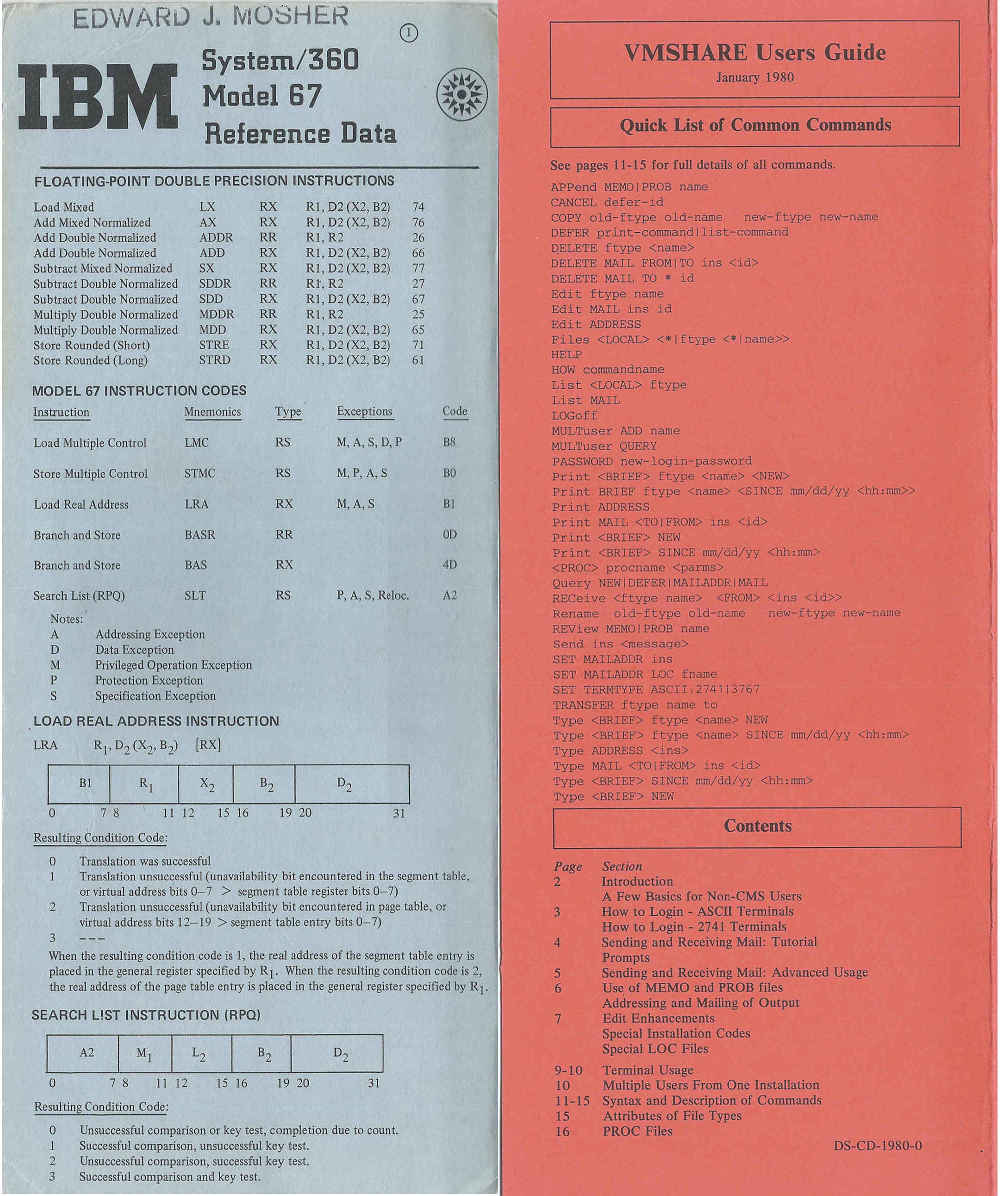

provided their (vm370) CMS-based online computer conferencing system,

"free" to (mainframe user group) SHARE in Aug1976 as VMSHARE

... archives here

http://vm.marist.edu/~vmshare

I had cut a deal with TYMSHARE to get a monthly tape dump of all VMSHARE files for internal network&systems ... biggest problem was lawyers concerned about internal employees being directly exposed to (unfiltered) customer information. (after M/D bought TYMSHARE in 84, vmshare was moved to different platform)

One TYMSHARE visit, they demo'ed ADVENTURE somebody had found on Stanford AI PDP10 and ported to CMS and I got a copy ... which I made available inside IBM (people that got all points, I would send a copy of source).

TYMSHARE also told story that executive learning that customers were playing games, directed that TYMSHARE was for business and all games had to be removed. He changed his mind after being told that game playing had grown to something like 30% of revenue.

trivia: I had ordered IBM/PC on announce through employee plan (with employee discount). However, by the time it arrived, IBM/PC street price had dropped below the employee discount. IBM provided 2400 baud Hayes compatible modem that supported hardware encryption (for the home terminal emuplation progam). Terminal emulator did software compression and both sides kept cache of couple thousand recently transmitted characters ... and could send index into string cache (rather than compressed string).

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

360 plug compatible controller posts

https://www.garlic.com/~lynn/submain.html#360pcm

recent post mentioning 709/1401/mpio, 360/67, & boeing cfo

https://www.garlic.com/~lynn/2023e.html#99 Mainframe Tapes

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#83 Typing, Keyboards, Computers

https://www.garlic.com/~lynn/2023d.html#69 Fortran, IBM 1130

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023c.html#73 Dataprocessing 48hr shift

https://www.garlic.com/~lynn/2023b.html#91 360 Announce Stories

https://www.garlic.com/~lynn/2023b.html#75 IBM Mainframe

https://www.garlic.com/~lynn/2023.html#118 Google Tells Some Employees to Share Desks After Pushing Return-to-Office Plan

https://www.garlic.com/~lynn/2023.html#63 Boeing to deliver last 747, the plane that democratized flying

https://www.garlic.com/~lynn/2023.html#54 Classified Material and Security

https://www.garlic.com/~lynn/2023.html#5 1403 printer

recent post mentioning TYMSHARE, vmshare, and adventure

https://www.garlic.com/~lynn/2023e.html#9 Tymshare

https://www.garlic.com/~lynn/2023d.html#115 ADVENTURE

https://www.garlic.com/~lynn/2023c.html#14 Adventure

https://www.garlic.com/~lynn/2023b.html#86 Online systems fostering online communication

https://www.garlic.com/~lynn/2023.html#37 Adventure Game

https://www.garlic.com/~lynn/2022e.html#1 IBM Games

https://www.garlic.com/~lynn/2022c.html#28 IBM Cambridge Science Center

https://www.garlic.com/~lynn/2022b.html#107 15 Examples of How Different Life Was Before The Internet

https://www.garlic.com/~lynn/2022b.html#28 Early Online

https://www.garlic.com/~lynn/2022.html#123 SHARE LSRAD Report

https://www.garlic.com/~lynn/2022.html#57 Computer Security

https://www.garlic.com/~lynn/2021k.html#102 IBM CSO

https://www.garlic.com/~lynn/2021h.html#68 TYMSHARE, VMSHARE, and Adventure

https://www.garlic.com/~lynn/2021e.html#8 Online Computer Conferencing

https://www.garlic.com/~lynn/2021b.html#84 1977: Zork

https://www.garlic.com/~lynn/2021.html#85 IBM Auditors and Games

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 11 Oct, 2023 Blog: Facebookre:

note some of the MIT CTSS/7094 had gone to 5th flr to do multics,

others went to the IBM cambridge science center on the 4th and did

internal network (larger than arpanet/internet from just about

beginning until sometime mid/late 80s, technology also used for

corporate sponsored univ BITNET), virtual machines (initially cp40/cms

on 360/40 with hardware to add virtual memory, morphs into cp67/cms

when 360/67, standard with virtual memory, becomes available). CTSS

RUNOFF was redone for CMS as SCRIPT. GML was invented at science

center in 1969 and GML tag processing added to SCRIPT (GML chosen

because 1st letters of inventors' last name). I've regularly cited

Goldfarb's SGML website ... but checking just now, not responding

... so most recent page from wayback machine

https://web.archive.org/web/20230602063701/http://www.sgmlsource.com/

SGML is the International Standard (ISO 8879) language for structured

data and document representation, the basis of HTML and XML and many

others. I invented SGML in 1974 and led a 12-year technical effort by

several hundred people to develop its present form as an International

Standard.

... snip ...

SGML history

https://web.archive.org/web/20230402213042/http://www.sgmlsource.com/history/index.htm

https://web.archive.org/web/20230703135955/http://www.sgmlsource.com/history/index.htm

Welcome to the SGML History Niche. It contains some reliable papers on

the early history of SGML, and its precursor, IBM's Generalized Markup

Language, GML.

... snip ...

papers about early GML

https://web.archive.org/web/20230402212558/http://www.sgmlsource.com/history/jasis.htm

Actually, the law office application was the original motivation for

the project, something I was allowed to do part-time because of my

knowledge of the user requirements. My real job was to encourage the

staffs of the various scientific centers to make use of the

CP-67-based Wide Area Network that was centered in Cambridge.

... snip ...

... see "Conclusions" section in above webpage. ... then decade after

the morph of GML into SGML and after another decade, SGML morphs into

HTML at CERN. Trivia: 1st webserver in the US is on (Stanford) SLAC's

VM370 system (CP67 having morphed into VM370) ... and CP67's WAN had

morphed into company internal network & BITNET).

https://ahro.slac.stanford.edu/wwwslac-exhibit

https://ahro.slac.stanford.edu/wwwslac-exhibit/early-web-chronology-and-documents-1991-1994

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

GML, SGML, HTML, XML, ... etc, posts

https://www.garlic.com/~lynn/submain.html#sgml

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

bitnet posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

trivia: last product we did at IBM before leaving was HA/CMP (now

PowerHA)

https://en.wikipedia.org/wiki/IBM_High_Availability_Cluster_Multiprocessing

it had started out as HA/6000 for the NYTimes to move their newspaper system (ATEX) off VAXCluster to RS/6000. I rename it HA/CMP when started doing technical/scientific cluster scale-up with national labs and commercial cluster scale-up with RDBMS vendors (Oracle, Sybase, Informix, Ingres). Meeting early Jan1992, AWD VP Hester tells Oracle CEO Ellison that there would be 16 processor cluster mid-92 and 128 processor cluster ye-92. A couple weeks later cluster scale-up is transferred for announce as IBM supercomputer (for technical/scientific only) and we are told we couldn't work on anything with more than four processors (we leave IBM a few months later).

Later we are brought into a small client/server startup as

consultants, two former oracle people (that we had worked with on

cluster scale-up) are there responsible for something called "commerce

server" and they wanted to do payment transactions on the server,

startup had also invented this technology they called "SSL" they

wanted to use, it is now frequently called "electronic commerce". I

had responsibility for everything between webservers and financial

payment networks. Later I did a talk on "Why Internet Isn't Business

Critical Dataprocessing" ... based on the compensating processes and

software I had to do ... Postel sponsored talk at ISI/USC (sometimes

Postel also would let me help with RFCs)

https://en.wikipedia.org/wiki/Jon_Postel

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

financial payment network gateway

https://www.garlic.com/~lynn/subnetwork.html#gateway

some recent posts mentioning postel, Internet & business critical

dataprocessing

https://www.garlic.com/~lynn/2023e.html#37 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#17 Maneuver Warfare as a Tradition. A Blast from the Past

https://www.garlic.com/~lynn/2023d.html#85 Airline Reservation System

https://www.garlic.com/~lynn/2023d.html#81 Taligent and Pink

https://www.garlic.com/~lynn/2023d.html#56 How the Net Was Won

https://www.garlic.com/~lynn/2023d.html#46 wallpaper updater

https://www.garlic.com/~lynn/2023c.html#34 30 years ago, one decision altered the course of our connected world

https://www.garlic.com/~lynn/2023.html#54 Classified Material and Security

https://www.garlic.com/~lynn/2023.html#31 IBM Change

https://www.garlic.com/~lynn/2022g.html#90 IBM Cambridge Science Center Performance Technology

https://www.garlic.com/~lynn/2022g.html#26 Why Things Fail

https://www.garlic.com/~lynn/2022f.html#46 What's something from the early days of the Internet which younger generations may not know about?

https://www.garlic.com/~lynn/2022f.html#33 IBM "nine-net"

https://www.garlic.com/~lynn/2022e.html#28 IBM "nine-net"

https://www.garlic.com/~lynn/2022c.html#14 IBM z16: Built to Build the Future of Your Business

https://www.garlic.com/~lynn/2022b.html#68 ARPANET pioneer Jack Haverty says the internet was never finished

https://www.garlic.com/~lynn/2022b.html#38 Security

https://www.garlic.com/~lynn/2022.html#129 Dataprocessing Career

https://www.garlic.com/~lynn/2021k.html#128 The Network Nation

https://www.garlic.com/~lynn/2021k.html#87 IBM and Internet Old Farts

https://www.garlic.com/~lynn/2021k.html#57 System Availability

https://www.garlic.com/~lynn/2021j.html#55 ESnet

https://www.garlic.com/~lynn/2021j.html#42 IBM Business School Cases

https://www.garlic.com/~lynn/2021j.html#10 System Availability

https://www.garlic.com/~lynn/2021h.html#72 IBM Research, Adtech, Science Center

https://www.garlic.com/~lynn/2021h.html#24 NOW the web is 30 years old: When Tim Berners-Lee switched on the first World Wide Web server

https://www.garlic.com/~lynn/2021e.html#74 WEB Security

https://www.garlic.com/~lynn/2021e.html#56 Hacking, Exploits and Vulnerabilities

https://www.garlic.com/~lynn/2021e.html#7 IBM100 - Rise of the Internet

https://www.garlic.com/~lynn/2021d.html#16 The Rise of the Internet

https://www.garlic.com/~lynn/2021c.html#68 Online History

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 11 Oct, 2023 Blog: Facebookre:

X.25 topic drift, my wife did a short stint as Amadeus (EU airline system built off the old Eastern Airline System/One) chief architect ... however she sided with Europe on X.25 (instead of IBM SNA). The IBM communication group got her replaced, but it didn't do them much good, Europe went with X.25 anyway and her SNA replacement got replaced.

some amadeus/x.25 posts

https://www.garlic.com/~lynn/2023d.html#80 Airline Reservation System

https://www.garlic.com/~lynn/2023d.html#35 Eastern Airlines 370/195 System/One

https://www.garlic.com/~lynn/2023c.html#48 Conflicts with IBM Communication Group

https://www.garlic.com/~lynn/2023c.html#47 IBM ACIS

https://www.garlic.com/~lynn/2023c.html#8 IBM Downfall

https://www.garlic.com/~lynn/2023.html#96 Mainframe Assembler

https://www.garlic.com/~lynn/2022h.html#97 IBM 360

https://www.garlic.com/~lynn/2022h.html#10 Google Cloud Launches Service to Simplify Mainframe Modernization

https://www.garlic.com/~lynn/2022f.html#113 360/67 Virtual Memory

https://www.garlic.com/~lynn/2022c.html#76 lock me up, was IBM Mainframe market

https://www.garlic.com/~lynn/2022c.html#75 lock me up, was IBM Mainframe market

https://www.garlic.com/~lynn/2021b.html#0 Will The Cloud Take Down The Mainframe?

https://www.garlic.com/~lynn/2021.html#71 Airline Reservation System

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 12 Oct, 2023 Blog: Facebookre:

Mid-80s, the communication group was fiercely fighting off client/server and distributed computing and trying to block release of mainframe TCP/IP support. Apparently some influential customers got that reversed, and the communication group changed their tactic and said that since they have corporate strategic responsibility for everything that crossed datacenter walls, it had to be released through them. What shipped got 44kbytes/sec aggregate throughput using nearly whole 3090 cpu. I then did RFC1044 support and in some tuning tests at Cray Research between 4341 and a Cray, got sustained channel throughput using only modest amount of 4341 ... something like 500 times improvement in bytes moved per instruction executed.

In any case, contributed to BITNET transition to BITNET2 supporting TCP/IP.

RFC1044 posts

https://www.garlic.com/~lynn/subnetwork.html#1044

BITNET posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

communication group fiercely fighting off client/server and

distributed computing posts

https://www.garlic.com/~lynn/subnetwork.html#terminal

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 12 Oct, 2023 Blog: Facebookre:

I've got a whole collection of (AUP) policy files. I've conjectured that some of the commercial use restrictions is (tax-free?) contributions to various networks. Scenario is that telcos had fixed infrastructure costs (staff, equipment, etc) that were significantly paid for by bandwidth use charges ... they also had enormous unused capacity in dark fiber. There was chicken&egg, to promote the unused capacity they needed bandwidth hungry applications, to promote the bandwidth hungry applications they needed to drastically reduce bandwidth charges (which could mean operating at a loss for years). Contributing resources for non-commercial use, could promote bandwidth hungry applications w/o impacting existing revenue. Folklore is that NSFnet (suppercomputer access network, evolving into NSFNET backbone as regional networks connect in) got resource/bandwidth contributions that were 4-5 times the winning bid.

Preliminary Announcement (28Mar1986):

https://www.garlic.com/~lynn/2002k.html#12

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

NEARnet:

29 October 1990

NEARnet - ACCEPTABLE USE POLICY

This statement represents a guide to the acceptable use of NEARnet for

data communications. It is only intended to address the issue of

NEARnet use. In those cases where data communications are carried

across other regional networks or the Internet, NEARnet users are

advised that acceptable use policies of those other networks apply and

may limit use.

NEARnet member organizations are expected to inform their users of

both the NEARnet and the NSFnet acceptable use policies.

1. NEARnet Primary Goals

1.1 NEARnet, the New England Academic and Research Network, has been

established to enhance educational and research activities in New

England, and to promote regional and national innovation and

competitiveness. NEARnet provides access to regional and national

resources to its members, and access to regional resources from

organizations throughout the United States and the world.

2. NEARnet Acceptable Use Policy

2.1 All use of NEARnet must be consistent with NEARnet's primary

goals.

2.2 It is not acceptable to use NEARnet for illegal purposes.

2.3 It is not acceptable to use NEARnet to transmit threatening,

obscene, or harassing materials.

2.4 It is not acceptable to use NEARnet so as to interfere with or

disrupt network users, services or equipment. Disruptions include,

but are not limited to, distribution of unsolicited advertizing,

propagation of computer worms and viruses, and using the network to

make unauthorized entry to any other machine accessible via the

network.

2.5 It is assumed that information and resources accessible via

NEARnet are private to the individuals and organizations which own or

hold rights to those resources and information unless specifically

stated otherwise by the owners or holders of rights. It is therefore

not acceptable for an individual to use NEARnet to access information

or resources unless permission to do so has been granted by the owners

or holders of rights to those resources or information.

3. Violation of Policy

3.1 NEARnet will review alleged violations of Acceptable Use Policy on

a case-by-case basis. Clear violations of policy which are not

promptly remedied by member organization may result in termination of

NEARnet membership and network services to member.

... snip ...

some past posts mentioning network AUP

https://www.garlic.com/~lynn/2023d.html#55 How the Net Was Won

https://www.garlic.com/~lynn/2022h.html#91 AL Gore Invented The Internet

https://www.garlic.com/~lynn/2021k.html#130 NSFNET

https://www.garlic.com/~lynn/2013n.html#18 z/OS is antique WAS: Aging Sysprogs = Aging Farmers

https://www.garlic.com/~lynn/2010g.html#75 What is the protocal for GMT offset in SMTP (e-mail) header header time-stamp?

https://www.garlic.com/~lynn/2010d.html#71 LPARs: More or Less?

https://www.garlic.com/~lynn/2010b.html#33 Happy DEC-10 Day

https://www.garlic.com/~lynn/2006j.html#46 Arpa address

https://www.garlic.com/~lynn/2000e.html#29 Vint Cerf and Robert Kahn and their political opinions

https://www.garlic.com/~lynn/2000e.html#5 Is Al Gore The Father of the Internet?^

https://www.garlic.com/~lynn/2000c.html#26 The first "internet" companies?

https://www.garlic.com/~lynn/aadsm12.htm#23 10 choices that were critical to the Net's success

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 12 Oct, 2023 Blog: Facebookre:

archived post with decade of VAX numbers, sliced and diced by model,

year, us/non-us

https://www.garlic.com/~lynn/2002f.html#0

IBM 4300s and VAX sold in the same mid-range market and in similar numbers for small unit numbers. Big difference was large organizations with orders having hundreds of 4300s at a time. As seen in (VAX) numbers, mid-range market started to change (to workstations and large PC servers) in the 2nd half of the 80s.

Jan1979, I got con'ed into doing benchmarks on engineering 4341 (before first customer ship) for national lab that was looking at getting 70 for compute farm (sort of the leading edge of the coming cluster supercomputing tsunami).

old email spring 1979 about USAFDS coming by to talk about 20 4341s

https://www.garlic.com/~lynn/2001m.html#email790404b

... but by the time they got around to stopping by in the fall, it had

morphed into 210 4341s (part of the leading edge of the coming

departmental computing tsunami). USAFDS was MULTICS poster child

... and some rivalry between MULTICS on the 5th flr and IBM Science

Center on the 4th flr,

https://www.multicians.org/site-afdsc.html

4341s & IBM 3370 (FBA, fixed-block disks) didn't require datacenter

provisioning, able to place out in departmental areas. Inside IBM,

departmental conference rooms became scarce with so many being

converted into distributed 4341 rooms ... and part of big explosion in

the internal network starting in the early 80s, archived post passing

1000 nodes in 1983

https://www.garlic.com/~lynn/2006k.html#8

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

posts mentioning 4300 & leading edge of (cluster supercomputing and

departmental computing) tsunamis

https://www.garlic.com/~lynn/2023e.html#80 microcomputers, minicomputers, mainframes, supercomputers

https://www.garlic.com/~lynn/2023e.html#71 microcomputers, minicomputers, mainframes, supercomputers

https://www.garlic.com/~lynn/2023e.html#59 801/RISC and Mid-range

https://www.garlic.com/~lynn/2023e.html#52 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023d.html#102 Typing, Keyboards, Computers

https://www.garlic.com/~lynn/2023d.html#93 The IBM mainframe: How it runs and why it survives

https://www.garlic.com/~lynn/2023c.html#46 IBM DASD

https://www.garlic.com/~lynn/2023b.html#78 IBM 158-3 (& 4341)

https://www.garlic.com/~lynn/2023.html#74 IBM 4341

https://www.garlic.com/~lynn/2023.html#71 IBM 4341

https://www.garlic.com/~lynn/2023.html#18 PROFS trivia

https://www.garlic.com/~lynn/2022h.html#108 IBM 360

https://www.garlic.com/~lynn/2022f.html#92 CDC6600, Cray, Thornton

https://www.garlic.com/~lynn/2022e.html#67 SHARE LSRAD Report

https://www.garlic.com/~lynn/2022d.html#66 VM/370 Turns 50 2Aug2022

https://www.garlic.com/~lynn/2022c.html#19 Telum & z16

https://www.garlic.com/~lynn/2022c.html#18 IBM Left Behind

https://www.garlic.com/~lynn/2022c.html#5 4361/3092

https://www.garlic.com/~lynn/2022.html#124 TCP/IP and Mid-range market

https://www.garlic.com/~lynn/2022.html#15 Mainframe I/O

https://www.garlic.com/~lynn/2021f.html#84 Mainframe mid-range computing market

https://www.garlic.com/~lynn/2021c.html#47 MAINFRAME (4341) History

https://www.garlic.com/~lynn/2021b.html#24 IBM Recruiting

https://www.garlic.com/~lynn/2021.html#53 Amdahl Computers

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 12 Oct, 2023 Blog: Facebookre:

Before doing XTP,, Greg Chesson was involved in doing UUCP. In 1993, I got a full (PAGESAT sat) usenet feed at home, in return for doing sat. modem drivers and doing articles for industry trade magazines.

xtp/hsp posts

https://www.garlic.com/~lynn/subnetwork.html#xtphsp

posts mentioning pagesat, uucp usenet

https://www.garlic.com/~lynn/2023e.html#58 USENET, the OG social network, rises again like a text-only phoenix

https://www.garlic.com/~lynn/2022e.html#40 Best dumb terminal for serial connections

https://www.garlic.com/~lynn/2022b.html#7 USENET still around

https://www.garlic.com/~lynn/2022.html#11 Home Computers

https://www.garlic.com/~lynn/2021i.html#99 SUSE Reviving Usenet

https://www.garlic.com/~lynn/2021i.html#95 SUSE Reviving Usenet

https://www.garlic.com/~lynn/2018e.html#51 usenet history, was 1958 Crisis in education

https://www.garlic.com/~lynn/2017h.html#110 private thread drift--Re: Demolishing the Tile Turtle

https://www.garlic.com/~lynn/2017g.html#51 Stopping the Internet of noise

https://www.garlic.com/~lynn/2017b.html#21 Pre-internet email and usenet (was Re: How to choose the best news server for this newsgroup in 40tude Dialog?)

https://www.garlic.com/~lynn/2016g.html#59 The Forgotten World of BBS Door Games - Slideshow from PCMag.com

https://www.garlic.com/~lynn/2015h.html#109 25 Years: How the Web began

https://www.garlic.com/~lynn/2015d.html#57 email security re: hotmail.com

https://www.garlic.com/~lynn/2013l.html#26 Anyone here run UUCP?

https://www.garlic.com/~lynn/2012b.html#92 The PC industry is heading for collapse

https://www.garlic.com/~lynn/2009j.html#19 Another one bites the dust

https://www.garlic.com/~lynn/2006m.html#11 An Out-of-the-Main Activity

https://www.garlic.com/~lynn/2001h.html#66 UUCP email

https://www.garlic.com/~lynn/2000e.html#39 I'll Be! Al Gore DID Invent the Internet After All ! NOT

https://www.garlic.com/~lynn/aepay4.htm#miscdns misc. other DNS

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Video terminals Newsgroups: alt.folklore.computers Date: Thu, 12 Oct 2023 16:05:39 -1000Peter Flass <peter_flass@yahoo.com> writes:

Had Assembler options that generated two versions of MPIO; 1) BPS stand-alone (low-level; device drivers, interrupt handlers, etc) and 2) OS/360 with system services, I/O macros.

Under OS/360 on 360/30, the BPS stand-alone version took 30mins to assemble, while the OS/360 version took an hour to assemble ... nearly all difference was each OS/360 DCB macro took 5mins to assemble.

recent post mentioning MPIO

https://www.garlic.com/~lynn/2023e.html#99 Mainframe Tapes

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#54 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#7 HASP, JES, MVT, 370 Virtual Memory, VS2

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#98 IBM DASD, Virtual Memory

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#83 Typing, Keyboards, Computers

https://www.garlic.com/~lynn/2023d.html#79 IBM System/360 JCL

https://www.garlic.com/~lynn/2023d.html#69 Fortran, IBM 1130

https://www.garlic.com/~lynn/2023d.html#64 IBM System/360, 1964

https://www.garlic.com/~lynn/2023d.html#60 CICS Product 54yrs old today

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023d.html#14 Rent/Leased IBM 360

https://www.garlic.com/~lynn/2023c.html#96 Fortran

https://www.garlic.com/~lynn/2023c.html#82 Dataprocessing Career

https://www.garlic.com/~lynn/2023c.html#73 Dataprocessing 48hr shift

https://www.garlic.com/~lynn/2023c.html#28 Punch Cards

https://www.garlic.com/~lynn/2023b.html#91 360 Announce Stories

https://www.garlic.com/~lynn/2023b.html#75 IBM Mainframe

https://www.garlic.com/~lynn/2023b.html#15 IBM User Group, SHARE

https://www.garlic.com/~lynn/2023.html#118 Google Tells Some Employees to Share Desks After Pushing Return-to-Office Plan

https://www.garlic.com/~lynn/2023.html#96 Mainframe Assembler

https://www.garlic.com/~lynn/2023.html#65 7090/7044 Direct Couple

https://www.garlic.com/~lynn/2023.html#63 Boeing to deliver last 747, the plane that democratized flying

https://www.garlic.com/~lynn/2023.html#58 Almost IBM class student

https://www.garlic.com/~lynn/2023.html#54 Classified Material and Security

https://www.garlic.com/~lynn/2023.html#38 Disk optimization

https://www.garlic.com/~lynn/2023.html#22 IBM Punch Cards

https://www.garlic.com/~lynn/2023.html#5 1403 printer

https://www.garlic.com/~lynn/2023.html#2 big and little, Can BCD and binary multipliers share circuitry?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Audit failure is not down to any one firm: the whole audit system is designed to fail Date: 13 Oct, 2023 Blog: FacebookAudit failure is not down to any one firm: the whole audit system is designed to fail to suit the interests of big business and their auditors

... rhetoric on floor of congress was that Sarbanes-Oxley would prevent future ENRONs and guarantee that auditors and executives did jailtime (however, there were jokes that congress felt badly that one of the big firms went out of business, and wanted to increase audit business to help them).

Possibly even GAO didn't believe SOX would make any difference and started doing reports of public company fraudulent financial filings, even showing they increased after SOX went into effect (and nobody doing jailtime).

sarbanes-oxley posts

https://www.garlic.com/~lynn/submisc.html#sarbanes-oxley

public company fraudulent financial reports

https://www.garlic.com/~lynn/submisc.html#fraudulent.financial.filings

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Internet Date: 13 Oct, 2023 Blog: Facebookre:

1980 IBM STL (on west coast) and Hursley (in England) was looking at off-shift sharing operation ... via double-hop satellite 56kbit link (west/east coast up/down; east coast/england up/down). It was first connected via VNET/RSCS and it worked fine, then SNA/JES2 bigot executive insisted that it be a JES2 connection and it didn't work, they then moved it back to VNET/RSCS and everything was flowing just fine. Then the executive was quoted as saying that VNET/RSCS was so stupid that it didn't know that it wasn't working (problem was that SNA/JES2 had window pacing algorithm with ACK time-outs and couldn't handle the double-hop round-trip latency) .. I wrote up our dynamic adaptive rate-based pacing algorithm for inclusion in (Chesson's) XTP.

One of my HSDT 1st T1 satellite links was between Los Gatos (on west

coast) to Clementi's

https://en.wikipedia.org/wiki/Enrico_Clementi

E&S lab in IBM Kingston (on east coast) that had a boat load of

floating post system boxes

https://en.wikipedia.org/wiki/Floating_Point_Systems

It was T1 tail-circuit on T3 microwave between Los Gatos and IBM San Jose plant site to the T3 satellite 10m dish to the IBM Kingston T3 satellite 10m dish. HSDT then got its own Ku-band TDMA satellite system, 4.5m dishes in Los Gatos and IBM Yorktown Research (on east coast) and 7m dish in Austin with the workstation division. There was LSM and EVE (hardware VLSI logic verification, ran something like 50,000 times faster than logic verification software on largest mainframe) and claims that Austin being able to use the boxes remotely, help bring the RIOS (RS/6000) chipset in a year early. HSDT had two separate custom spec'ed TDMA systems built, one by subsidiary of Canadian firm and another by a Japanese firm.

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

xtp/hsp posts

https://www.garlic.com/~lynn/subnetwork.html#xtphsp

post mentioning Ku-band TDMA system

https://www.garlic.com/~lynn/2021b.html#22 IBM Recruiting

https://www.garlic.com/~lynn/2021.html#62 Mainframe IPL

https://www.garlic.com/~lynn/2014b.html#67 Royal Pardon For Turing

https://www.garlic.com/~lynn/2010k.html#12 taking down the machine - z9 series

https://www.garlic.com/~lynn/2008m.html#44 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008m.html#20 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008m.html#19 IBM-MAIN longevity

https://www.garlic.com/~lynn/2006k.html#55 5963 (computer grade dual triode) production dates?

https://www.garlic.com/~lynn/2006.html#26 IBM microwave application--early data communications

https://www.garlic.com/~lynn/2003k.html#14 Ping: Anne & Lynn Wheeler

https://www.garlic.com/~lynn/2003j.html#76 1950s AT&T/IBM lack of collaboration?

https://www.garlic.com/~lynn/94.html#25 CP spooling & programming technology

https://www.garlic.com/~lynn/93.html#28 Log Structured filesystems -- think twice

other posts mentioning Clementi & satellite link

https://www.garlic.com/~lynn/2023d.html#120 Science Center, SCRIPT, GML, SGML, HTML, RSCS/VNET

https://www.garlic.com/~lynn/2023d.html#104 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023b.html#57 Ethernet (& CAT5)

https://www.garlic.com/~lynn/2022h.html#26 Inventing the Internet

https://www.garlic.com/~lynn/2022f.html#5 What is IBM SNA?

https://www.garlic.com/~lynn/2022e.html#103 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022e.html#88 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022e.html#33 IBM 37x5 Boxes

https://www.garlic.com/~lynn/2022e.html#27 IBM "nine-net"

https://www.garlic.com/~lynn/2022d.html#73 WAIS. Z39.50

https://www.garlic.com/~lynn/2022d.html#29 Network Congestion

https://www.garlic.com/~lynn/2022c.html#57 ASCI White

https://www.garlic.com/~lynn/2022c.html#52 IBM Personal Computing

https://www.garlic.com/~lynn/2022c.html#22 Telum & z16

https://www.garlic.com/~lynn/2022b.html#79 Channel I/O

https://www.garlic.com/~lynn/2022b.html#69 ARPANET pioneer Jack Haverty says the internet was never finished

https://www.garlic.com/~lynn/2022b.html#16 Channel I/O

https://www.garlic.com/~lynn/2022.html#121 HSDT & Clementi's Kingston E&S lab

https://www.garlic.com/~lynn/2022.html#95 Latency and Throughput

https://www.garlic.com/~lynn/2021j.html#32 IBM Downturn

https://www.garlic.com/~lynn/2021j.html#31 IBM Downturn

https://www.garlic.com/~lynn/2021e.html#28 IBM Cottle Plant Site

https://www.garlic.com/~lynn/2021e.html#14 IBM Internal Network

https://www.garlic.com/~lynn/2021.html#62 Mainframe IPL

https://www.garlic.com/~lynn/2019c.html#48 IBM NUMBERS BIPOLAR'S DAYS WITH G5 CMOS MAINFRAMES

https://www.garlic.com/~lynn/2019.html#32 Cluster Systems

https://www.garlic.com/~lynn/2018f.html#110 IBM Token-RIng

https://www.garlic.com/~lynn/2018f.html#109 IBM Token-Ring

https://www.garlic.com/~lynn/2017h.html#50 System/360--detailed engineering description (AFIPS 1964)

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Video terminals Newsgroups: alt.folklore.computers Date: Fri, 13 Oct 2023 09:39:26 -1000Charlie Gibbs <cgibbs@kltpzyxm.invalid> writes:

folklore is that person writing the assembler op-code lookup was told that it had to be done in 256bytes (so assembler could run in minimum memory 360) ... as a result had to sequentially read a dataset of op-codes for each statement. Some time later, assembler got huge speed-up by having op-codes part of the assembler.

Within year of taking intro class, the 360/67 arrived and I was hired fulltime responsible for os/360 (tss/360 never came production fruition so ran as 360/65 with os/360). non-resident SVCs had to fit in 2k ... as a result things like file open/close had to stream through an enormous number of 2k pieces.

student fortran jobs had run well under a second on 709 tape->tape. Initially on os/360 they ran well over a minute. I installed HASP and it cut the time in half. I would reworked STAGE2 SYSGENs so could run in production job stream and ordering of datasets and PDS members optimized for arm seek and multi-track search, cutting elapsed time another 2/3rds to 12.9secs. Student fortran never got better than 709 until I installed Univ. of Waterloo's WATFOR.

archived (a.f.c.) post with part of SHARE presentation I gave on

performance work (both os/360 and some work playing with CP/67).

https://www.garlic.com/~lynn/94.html#18

CP/67 I started out cutting pathlengths for running OS/360 in virtual machine. Stand alone ran 322secs, CP67 initially ran 856secs (CP67 534secs CPU). After a couple months I had cut it to 435secs (CP67 113secs CPU, 421secs CPU reduction).

I then redid dasd i/o. Originally FIFO queuing & single 4k page transfer at time. I redid all 2314 I/O to ordered seek queueing and multiple 4k page transfers in single I/O optimized for transfer per revolution (paging "DRUM" was originally about 70 4k transfers/sec, got it up to peak around 270 ... nearly channel transfer capacity).

a few posts mentioning undergraduate work

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#29 Copyright Software

https://www.garlic.com/~lynn/2023e.html#12 Tymshare

https://www.garlic.com/~lynn/2023e.html#10 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2021i.html#61 Virtual Machine Debugging

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: MOSAIC Date: 13 Oct, 2023 Blog: FacebookI got brought into small client/server startup as consultant. Two former Oracle people (we had worked with on RDBMS cluster scale-up) were there responsible for something they called "commerce server" and they wanted to do payment transactions. The startup had also invented something they called "SSL" they wanted to use ... it is now frequently called "electronic commerce" I had responsibility for everything between webservers and the financial industry payment networks.

Somewhat because of having been involved in "electronic commerce", I was asked to participate in financial industry standards. Gov. agency up at Fort Meade also had participants (possibly because of crypto activities). My perception was that agency assurance and agency collections were on different sides ... most of the interaction was with assurance ... and they seemed to have battles over confusing authentication (financial wanted authenticated transactions) and identification. I won some number of battles requiring high-integrity authentication w/o needing identification (claiming requiring both for a transaction would violate some security principles).

note 28Mar1986 preliminary announce:

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

with national center supercomputer application at

http://www.ncsa.illinois.edu/enabling/mosaic

then some spin-off to silicon valley and NCSA complained about spin-off using the "MOSAIC" term; trivia: from what silicon valley company did they get the term "NETSCAPE"?.

First few months of netscape webserver increasing load ... there was big TCP/IP performance problem with platforms (using BSD TCP/IP) ... HTTP/HTTPS was supposedly atomic web transactions ... but using TCP sessions. FINWAIT list of closing sessions was linear scan to see if there was dangling packet (assumption that there would never be more than a few sessions on FINWAIT list). However with HTTP/HTTPS increasing load, there would be (tens of?) thousands and webservers started spending 95% of the CPU running FINWAIT list. NETSCAPE was increasingly adding SUN webservers as countermeasure to the enormous CPU bottleneck. Finally they were replaced with large SEQUENT multiprocessor which had fixed the FINWAIT problem in DYNIX some time before. Eventually the other BSD-based server vendors shipped a FINWAIT "fix".

internet payment gateway posts

https://www.garlic.com/~lynn/subnetwork.html#gateway

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

HA/CMP technical/scientific and commercial (RDBMS) cluster scale-up

posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

assurance posts

https://www.garlic.com/~lynn/subintegrity.html#assurance

authentication, identification, privacy posts

https://www.garlic.com/~lynn/subpubkey.html#privacy

posts mentioning mosaic, netscape & finwait

https://www.garlic.com/~lynn/2023.html#82 Memories of Mosaic

https://www.garlic.com/~lynn/2018f.html#102 Netscape: The Fire That Filled Silicon Valley's First Bubble

https://www.garlic.com/~lynn/2017c.html#54 The ICL 2900

https://www.garlic.com/~lynn/2015h.html#113 Is there a source for detailed, instruction-level performance info?

https://www.garlic.com/~lynn/2014g.html#13 Is it time for a revolution to replace TLS?

https://www.garlic.com/~lynn/2013i.html#46 OT: "Highway Patrol" back on TV

https://www.garlic.com/~lynn/2012d.html#20 Writing article on telework/telecommuting

https://www.garlic.com/~lynn/2005c.html#70 [Lit.] Buffer overruns

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Typing & Computer Literacy Date: 13 Oct, 2023 Blog: FacebookIn middle school taught myself to type on an old typewriter I found in the dump. Highschool they were replacing typewriters for typing classes and I managed to get one of the replaced machines (w/o letters on the keys). My father had died when I was in middle school and I was the oldest; I had jobs after school and weekends and during the winter, cut wood after supper and got up early to restart fire. In highschool worked for the local hardware store and would get loaned out to local contractors; concrete (driveways/foundations), framing, flooring, roofing, siding, electrical, plumbing, etc. Saved enough money to attend univ. Summer after freshman year was foreman on construction job, was way behind schedule because really wet spring and job was quickly 80+ hr weeks.

I then took two credit hr fortran/computer intro class ... at the end of the semester I was hired to rewrite 1401 MPIO for 360/30. Univ. had been sold a 360/67 for tss/360 to replace 709/1401. The 1401 was temporarily replaced with 360/30 (pending arrival of 360/67). The univ. shutdown the datacenter over the weekend and I would have it dedicated all to myself (although 48hrs w/o sleep made monday classes hard). I was given lots of hardware&software manuals and got to design my own monitor, device drivers, interrupt handlers, error recovery, storage manager, etc ... and within a few weeks, I had a 2000 card assembler program. Then within a year of taking intro course, the 360/67 arrives and I was hired fulltime responsible for OS/360 (tss/360 never came to production fruition, so ran as 360/65 w/os360) ... and I continued to have my datacenter weekend dedicated time.

Then before I graduate, I was hired fulltime into a small group in the Boeing CFO office to help with formation of Boeing Computer Services (consolidate all dataprocessing into independent business unit to better monetize the investment). I thought Renton datacenter was possibly largest in the world, couple hundred million in IBM gear, 360/65s arriving faster than could be installed, boxes constantly being staged in hallways around machine room. Disaster plan was to replicate Renton at the new 747 plant at Paine field (Mt. Rainier heats up and the resulting mud slide could take out Renton datacenter). Lots of politics between Renton datacenter manager and CFO, who only had a 360/30 up at Boeing field for payroll, although they enlarge it to install a 360/67 that I could play with when I wasn't doing other things. When graduate I join IBM Cambridge Science Center (instead of staying at Boeing) ... which included getting 2741 selectric at home.

Late 70s and early 80s blamed for online computer conferencing on the IBM internal network (larger than arpanet/internet from just about beginning until sometime mid/late 80s). It really took off spring 1981, when I distributed trip report of visit to Jim Gray at Tandem; claims that upwards of 25,000 were reading even though only about 300 participated (& folklore when the corporate executive committee was told, 5of6 wanted to fire me).

a little topic drift, was introduced to John Boyd in early 80s and

would sponsored his briefings. Tome about both Boyd and Learson

(Learson failing to block bureaucrats, careerists, and MBAs from

destroying Watson legacy and two decades later has one of the largest

losses in history of US companies).

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

online computer conferencing posts

https://www.garlic.com/~lynn/subnetwork.html#cmc

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

ibm downfall, breakup, controlling market posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

Some recent posts mentioning Boeing CFO, BCS, & Renton

https://www.garlic.com/~lynn/2023e.html#99 Mainframe Tapes

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#11 Tymshare

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#101 Operating System/360

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#83 Typing, Keyboards, Computers

https://www.garlic.com/~lynn/2023d.html#69 Fortran, IBM 1130

https://www.garlic.com/~lynn/2023d.html#66 IBM System/360, 1964

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023d.html#15 Boeing 747

https://www.garlic.com/~lynn/2023c.html#86 IBM Commission and Quota

https://www.garlic.com/~lynn/2023c.html#73 Dataprocessing 48hr shift

https://www.garlic.com/~lynn/2023c.html#68 VM/370 3270 Terminal

https://www.garlic.com/~lynn/2023c.html#66 Economic Mess and IBM Science Center

https://www.garlic.com/~lynn/2023c.html#15 IBM Downfall

https://www.garlic.com/~lynn/2023b.html#101 IBM Oxymoron

https://www.garlic.com/~lynn/2023b.html#91 360 Announce Stories

https://www.garlic.com/~lynn/2023b.html#75 IBM Mainframe

https://www.garlic.com/~lynn/2023.html#118 Google Tells Some Employees to Share Desks After Pushing Return-to-Office Plan

https://www.garlic.com/~lynn/2023.html#63 Boeing to deliver last 747, the plane that democratized flying

https://www.garlic.com/~lynn/2023.html#54 Classified Material and Security

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: I've Been Moved Date: 14 Oct, 2023 Blog: Facebookjoined ibm cambridge science center (from west coast, before graduating I was working in small group in Boeing CFO office helping with the formation of Boeing Computer Services), 7yrs later transferred back to west coast at san jose reseach.

Early 80s, Los Gatos lab let me have offices and labs there, about the same time I was transferred to Yorktown Research on the east coast (for various transgressions, including being blamed for online computer conferencing on the internal network, folklore is when corporate executive committee was told, 5of6 wanted to fire me) and left to live in san jose (and kept my SJR and LSG space) but had to commute to YKT a couple times a month. My SJR office was moved up the hill when new Almaden research was built.

I then spent two yrs with AWD in Austin (gave up my ALM office, but kept my LSG space) and then moved back to San Jose and my LSG offices and labs.

In 1992 IBM had one of the largest losses in history of US companies

and was being reorged into the 13 baby blues in preparation for

breaking up the company; had already left the company (although LSG

let me keep space for another couple yrs), but get a call from the

bowels of Armonk about helping with the breakup (before get started,

new CEO was brought in and sort-of reverses the breakup) ... longer

tome:

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

ibm science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

online computer conferencing posts

https://www.garlic.com/~lynn/subnetwork.html#cmc

ibm downfall, breakup, controlling market posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Video terminals Newsgroups: alt.folklore.computers Date: Sat, 14 Oct 2023 15:15:02 -1000Peter Flass <peter_flass@yahoo.com> writes:

student fortran tended to be 30-50 statements ... OS/360 3step fortgclg, almost all job step overhead (speeded up by careful ordering of mostly datasets and PDS members), with a little bit of compiled output to linkedit step output to execution step;

WATFOR was single execution step (around 12secs before speedup, 4secs after speedup) for a card tray of batched jobs feed from HASP (say 40-70 jobs) ... which were handled as stream ... WATFOR clocked/rated at 20,000 cards/min on 360/65 (w/HASP), 333cards/sec. So 4secs single jobstep overhead plus 2000/333, around 6-7secs for tray of cards ... or around total 10-11secs avg for batched tray of student fortran (40-70 jobs)

other recent posts mentioning WATFOR

https://www.garlic.com/~lynn/2023e.html#88 CP/67, VM/370, VM/SP, VM/XA

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#54 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#29 Copyright Software

https://www.garlic.com/~lynn/2023e.html#12 Tymshare

https://www.garlic.com/~lynn/2023e.html#10 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023e.html#7 HASP, JES, MVT, 370 Virtual Memory, VS2

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: We have entered a second Gilded Age Date: 15 Oct, 2023 Blog: FacebookRobert Reich: Vast inequality. Billionaires buying off Supreme Court justices. Rampant voter supression. Worker exploitation. Unions getting busted. Child labor returning. There is no question about it: We have entered a second Gilded Age.

The Price of Inequality: How Today's Divided Society Endangers Our Future

https://www.amazon.com/Price-Inequality-Divided-Society-Endangers-ebook/dp/B007MKCQ30/

pg35/loc1169-73:

In business school we teach students how to recognize, and create,

barriers to competition -- including barriers to entry -- that help

ensure that profits won't be eroded. Indeed, as we shall shortly see,

some of the most important innovations in business in the last three

decades have centered not on making the economy more efficient but on

how better to ensure monopoly power or how better to circumvent

government regulations intended to align social returns and private

rewards

... snip ...

How Economists Turned Corporations into Predators

https://www.nakedcapitalism.com/2017/10/economists-turned-corporations-predators.html

Since the 1980s, business schools have touted "agency theory," a

controversial set of ideas meant to explain how corporations best

operate. Proponents say that you run a business with the goal of

channeling money to shareholders instead of, say, creating great

products or making any efforts at socially responsible actions such as

taking account of climate change.

... snip ...

stock buybacks use to be illegal (because it was too easy for

executives to manipulate the market ... aka banned in the wake of

'29crash)

https://corpgov.law.harvard.edu/2020/10/23/the-dangers-of-buybacks-mitigating-common-pitfalls/

Buybacks are a fairly new phenomenon and have been gaining in

popularity relative to dividends recently. All but banned in the US

during the 1930s, buybacks were seen as a form of market

manipulation. Buybacks were largely illegal until 1982, when the SEC

adopted Rule 10B-18 (the safe-harbor provision) under the Reagan