From: lynn@garlic.com Newsgroups: alt.folklore.computers Date: Sun, 17 Aug 2008 11:23:54 -0700 (PDT) Subject: Re: Fraud due to stupid failure to test for negativere:

not transit specific ... but how payment things can go wrong ... lots

of past posts referencing YES CARD vulnerability:

https://www.garlic.com/~lynn/subintegrity.html#yescard

it was chip card solution effort started in the mid-90s (about the same time we got involved in x9a10 financial standard working group) and we've characterized the effort as being focused on countermeasure to lost/stolen magstripe cards.

the issue was that by the mid-90s, additional kinds of fraud had also become quite common place.

in the x9a10 financial standard woking group scenaio ... it had been given the requirement to preserve the integrity of the financial infrastructure for all retail payments ... aka ALL, as in ALL (not just point-of-sale, not just internet, not just face-to-face, not just credit, not just debit, not just stored-value, etc). as a result, x9a10 working group had to do detailed end-to-end threat and vulnerability studies of multiple different kinds of retail payments ... and come up with a solution that addressed everything (and also be superfast and super inexpensive ... w/o sacrificing security and integrity).

with the intense myopic concentration on chipcard as solution to lost/ stolen magstripe card vulnerability ... it appeared to lead to situation where the rest of the infrastructure was made more vulnerable. in fact early part of this decade, at an atm industry presentation on YES CARD fraud ... as it started to dawn on the audience the actual circumstance ... there was a spontaneous outburst from somebody in the audience ... "do you mean that they managed to spend billions of dollars to prove that chipcards are less secure than magstripe cards"

in that timeframe there was also a large pilot deployment in the states with a million or so cards. when the YES CARD scenario was explained to people doing the deployment ... the reaction was to make configuration changes in the issuing process of valid cards .... which actually had absolutely no effect on the YES CARD fraud ... which was basically a new kind of point-of-sale terminal vulnerability that had been created as a side-effect of the chipcard specification ... and involved counterfeit cards (not valid, issued cards).

misc. past posts mentioning the x9.59 financial transaction standard

(that was product of the x9a10 financial standard working group)

https://www.garlic.com/~lynn/x959.html#x959

some number of other URLs referencing the boston transit:

MIT case shows folly of suing security researchers

http://searchsecurity.techtarget.com/news/column/0,294698,sid14_gci1325406,00.html

Massachusetts: MIT students deserve 'no First Amendment protection'

http://news.cnet.com/8301-1009_3-10017438-83.html?hhTest=1

MIT Subway Hack Paper Published on the Web

http://www.pcmag.com/article2/0%2c2817%2c2327898%2c00.asp

Judge refuses to lift gag order on MIT students in Boston subway-hack case

http://www.computerworld.com/action/article.do?command=viewArticleBasic&articleId=9112641

MIT Presentation on Subway Hack Leaks Out

http://www.darkreading.com/document.asp?doc_id=161424

Exploits & Vulnerabilities: Subway Hack Gets 'A' From Professor, TRO From Judge

http://www.technewsworld.com/story/64118.html?welcome=1218494580

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: lynn@garlic.com Newsgroups: alt.folklore.computers Date: Mon, 18 Aug 2008 07:58:51 -0700 (PDT) Subject: Re: Yet another squirrel question - Results (very very long post)On Aug 18, 9:51 am, Quadibloc wrote:

lots of past posts about unbundling

https://www.garlic.com/~lynn/submain.html#unbundle

one of the unbundling issues was software engineering services ... before unbundling ... groups of SEs would work at the customer site ... and brand new SEs would effectively get apprentice training as part of such a team. After unbundling ... all the SE time spent at the customer had to be charged for ... and nobody came up with a good mechanism for charging for SEs in training.

This was what spawned the original idea for HONE (Hands-On Network Environment)

... basically some number of (virtual machine) CP67

datacenters around the country providing SEs with hands-on operating

system experience (dos, mft, mvt, etc). lots of past HONE postings

https://www.garlic.com/~lynn/subtopic.html#hone

i've mentioned recently that as undergraduate in the 60s, i was also

involved in doing a mainframe clone controller ...

https://www.garlic.com/~lynn/2008l.html#23 Memories of ACC, IBM Channels and Mainframe Internet Devices

and a write-up listing us as cause of the clone-controller (or pcm,

plug-compatible) business.

https://www.garlic.com/~lynn/submain.html#360pcm

The 360 pcm/clone controller business was a major motivation behind

the future system effort

https://www.garlic.com/~lynn/submain.html#futuresys

however, the distraction of the future system business then

helped/allowed some number of plug-compatible computers (as opposed to

controllers) to gain a market foot-hold. After future system effort

was killed, there was a mad rush to get stuff back into the 370

product pipeline and also figure out how to deal with the

plug-compatible computers. Part of this was decision to start charging

for kernel software (reverse earlier justification to not unbundle

kernel software). Recent reference to talk that Amdahl gave at MIT in

the early 70s about his justification (for plug-compatible mainframe

company) that was used with the VCs/investors:

https://www.garlic.com/~lynn/2008g.html#54 performance of hardware dynamic scheduling

As i've recently mentioned, the mad rush to get stuff back into the

370 product pipeline ... appeared to contribute to picking up a lot of

370 stuff i'd been doing for "CSC/VM" (all during the future system

period).

https://www.garlic.com/~lynn/2008l.html#72 Error handling for system calls

https://www.garlic.com/~lynn/2008l.html#82 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#85 old 370 info

and releasing as products. Part of the "CSC/VM" work was the "resource manager" which was packaged for release as a separate kernel product ... and it was also selected to be the guinea pig for change (in policy) to start charging for kernel software (effectively in reaction to the plug compatible processors that got a foot-hold in the market during the period of the future system distraction)

as an aside ... a lot of what was in the "resource manager", i had

earlier done as undergraduate in the 60s and had been released as part

of cp67 ... but was dropped in the morph of cp67 to vm370. misc.

past posts mentioning scheduler (major component of the resource

manager)

https://www.garlic.com/~lynn/subtopic.html#fairshare

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Yet another squirrel question - Results (very very long post) Newsgroups: alt.folklore.computers Date: Tue, 19 Aug 2008 08:03:08 -0400lynn writes:

a lot of the resource manager was actually stuff that i had done as undergraduate in the 60s on cp67 and released in that product ... but dropped in the morph from cp67 to vm370 ... which included some amount of simplification. For instance, the morph from cp67 to vm370 also dropped much of the fastpath stuff I had done in cp67 (especially in the interrupt handlers). One of the first thing I had done (once the science center had gotten a 370) was to re-implement a lot of fastpath stuff in vm370. That actually had been incorporated and shipped in something like release 1plc9 (i.e. "PLCs" were monthly updates ... plc9, would have been the 9th monthly update to the initial vm370 release).

one of the of the other things that i got roped into ... besides

some of the stuff mentioned in this recent post

https://www.garlic.com/~lynn/2008l.html#83 old 370 info

https://www.garlic.com/~lynn/2008l.html#85 old 370 info

was a 5-way SMP project, code named VAMPS ... which was canceled

before it shipped ... misc. past posts

https://www.garlic.com/~lynn/submain.html#bounce

the basic design was then picked up when it was decided to release SMP

support in the standard vm370 product. A problem was that the resource

manager had already been shipped as guinea pig for charging for kernel

software. As part of that activity, i got to spend a lot of time with

contracts and legal people working on policies for kernel software

charging. The "initial" pass (charging for kernel software) was that

kernel software directly related to hardware operation would still be

free (device drivers, smp support, etc) ... but other stuff could be

charged for. misc. past posts mentioning unbundling and/or my resource

manager being the guinea pig for change to start charging for kernel

software

https://www.garlic.com/~lynn/submain.html#unbundle

The already shipped resource manager didn't directly contain any SMP support ... but it did have some amount of kernel reorg and facilities that the SMP design was dependent on. When it came time to ship the SMP code ... it created something of a dilemma ... since it would violate policy to require the customer to purchase the "resource manager" in order for (the free) multiprocessing support to work. The dilemma was resolved by moving all the dependant code out of the resource manager and into the free kernel base (which was 80-90 percent of the actual lines of code in the initial/original resource manager release).

past posts mentioning SMP (and/or charlie inventing the compare&swap

instruction)

https://www.garlic.com/~lynn/subtopic.html#smp

other past posts in this thread:

https://www.garlic.com/~lynn/2008l.html#78 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#84 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#86 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#87 Yet another squirrel question - Results (very very long post)

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Medical care Newsgroups: alt.folklore.computers Date: Tue, 19 Aug 2008 08:58:53 -0400Lars Poulsen <lars@beagle-ears.com> writes:

The other issue is that Medicare reimbursements are typically actually below cost of services .... forcing establishments to subsidize Medicare patients from other income sources ... or refusing to take Medicare patients.

There have been some number of articles that one of the Japanese motivations for work on robots ... is to fill the gap in providing services to the geriatric generation.

past posts mentioning baby boomer retirement

https://www.garlic.com/~lynn/2008b.html#3 on-demand computing

https://www.garlic.com/~lynn/2008c.html#16 Toyota Sales for 2007 May Surpass GM

https://www.garlic.com/~lynn/2008c.html#69 Toyota Beats GM in Global Production

https://www.garlic.com/~lynn/2008f.html#99 The Workplace War for Age and Talent

https://www.garlic.com/~lynn/2008g.html#1 The Workplace War for Age and Talent

https://www.garlic.com/~lynn/2008g.html#50 CA ESD files Options

https://www.garlic.com/~lynn/2008h.html#3 America's Prophet of Fiscal Doom

https://www.garlic.com/~lynn/2008h.html#11 The Return of Ada

https://www.garlic.com/~lynn/2008h.html#26 The Return of Ada

https://www.garlic.com/~lynn/2008h.html#57 our Barb: WWII

https://www.garlic.com/~lynn/2008i.html#56 The Price Of Oil --- going beyong US$130 a barrel

https://www.garlic.com/~lynn/2008i.html#98 dollar coins

https://www.garlic.com/~lynn/2008j.html#80 dollar coins

https://www.garlic.com/~lynn/2008k.html#5 Republican accomplishments and Hoover

https://www.garlic.com/~lynn/2008l.html#37 dollar coins

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Tue, 19 Aug 2008 09:46:02 -0400Charlton Wilbur <cwilbur@chromatico.net> writes:

it typically is only in changing environment ... where there is a higher premium placed on actually being able to solve problems ... than being able to get along & support the other members.

for other drift ... a frequent example used was great britain appointing lords as military leaders going into WW1.

there has been some suggestion that natural selection similarly contributes to the distribution of "myers-briggs" personality types ... that relatively static environments tend to favor the "social member" types ... as opposed to the "solve problem" types (which are frequently also labeled "independent" ... another indication of where society places its value)

there then can be discontinuities ... when new problems actually need to be solved ... and frequently the knee-jerk response is to blame the ones that actually exposed the problems (as opposed to the bureaucracy responsible for the problems). There is a little of the emperor's new clothes parable in this.

for other drift, Boyd saw a huge amount of this in attempting to address

problems in large military bureaucracy) ... misc. past posts mentioning

Boyd (and/or OODA-loops)

https://www.garlic.com/~lynn/subboyd.html

and for more Boyd topic drift, there was recent note that Boyd's

strategy and OODA-loops is now cornerstone of this executive MBA program

http://www.familybusinessmba.kennesaw.edu/home

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Tue, 19 Aug 2008 14:54:08 -0400lynn writes:

a post from more than two years ago discussing (new) appearance of

flaws and vulnerabilities

https://www.garlic.com/~lynn/2006l.html#33

including this reference to trials held in 1997

http://www-03.ibm.com/industries/financialservices/doc/content/solution/1026217103.html

and reports of flaws, exploits, and vulnerabilities started to appear within a year or so of the trials (aka decade ago).

now comes reports that flaws and vulnerabilities are a brand new discovery

Criminal gangs in new Chip and Pin fraud

http://www.workplacelaw.net/news/display/id/16140

Chip and pin fraud could hit city stores

http://www.walesonline.co.uk/news/wales-news/2008/08/15/chip-and-pin-fraud-could-hit-city-stores-91466-21537769/

Probe uncovers first chip-and-pin card fraud

http://www.financialdirector.co.uk/accountancyage/news/2224006/probe-uncovers-first-chip-pin

Chip And Pin Fraud On The Increase

http://financialadvice.co.uk/news/2/creditcards/7542/Chip-And-Pin-Fraud-On-The-Increase.html

Fraudsters hijacking Chip and Pin

http://www.metro.co.uk/news/article.html?in_article_id=263563&in_page_id=34

Police warn of new chip-and-pin fraud

http://www.financemarkets.co.uk/2008/08/13/police-warn-of-new-chip-and-pin-fraud/

Gangs develop new chip-and-pin fraud

http://business.timesonline.co.uk/tol/business/industry_sectors/technology/article4525429.ece

Criminals Crack Chip-and-Pin Technology Wide Open

http://security.itproportal.com/articles/2008/08/14/criminals-crack-chip-and-pin-technology-wide-open/

Fraudsters have hijacked Chip and PIN

http://security.itproportal.com/articles/2008/08/14/fraudsters-have-hijacked-chip-and-pin/

Police warn of security threat to every chip-and-Pin terminal

http://www.computerweekly.com/Articles/2008/08/18/231841/police-warn-of-security-threat-to-every-chip-and-pin.htm

Police Warns About Chip and Pin Shortcomings While More Scam Suspects

Arrested

http://security.itproportal.com/articles/2008/08/19/police-warns-about-chip-and-pin-shortcomings-while-more-scam-suspects-arrested/

Major bank card scam uncovered

http://www.irishtimes.com/newspaper/breaking/2008/0818/breaking84.htm

Chip and Pin protection cracked like a rotten foreign egg

http://www.itwire.com/content/view/20035/53/

Gangs have cracked Chip and PIN cards, say police

http://www.computerweekly.com/Articles/2008/08/13/231816/gangs-have-cracked-chip-and-pin-cards-say-police.htm

Chip and PIN gang busted by specialist police unit

http://www.theinquirer.net/gb/inquirer/news/2008/08/14/chip-pin-gang-busted-police

New chip-and-pin danger

http://www.qas.co.uk/company/data-quality-news/new_chip_and_pin_danger_2574.htm

Credit card code? What code?

http://www.latimes.com/business/investing/la-tr-insider17-2008aug17,0,6886084.story

Analysis: The rise (and fall) of Chip and PIN

http://www.itpro.co.uk/605568/analysis-the-rise-and-fall-of-chip-and-pin

Warning as gang clone bank cards

http://ukpress.google.com/article/ALeqM5jZIV6H0MsQgs6-4vl4_tATlpXn_g

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Yet another squirrel question - Results (very very long post) Newsgroups: alt.folklore.computers Date: Wed, 20 Aug 2008 08:47:00 -0400Roland Hutchinson <my.spamtrap@verizon.net> writes:

somewhat in conjunction with talking to the people at NLM (middle of

last decade) ... we also dropped by people at lane medical library a

couple of times ... there was small play related to superman. this

timeline has it going online in '87.

http://lane.stanford.edu/portals/history/chronlane.html

and

http://lane.stanford.edu/100years/history.html

from above ... related to NLM (as opposed to LOIS)

In the 1950s and 1960s, Lane's one and only reference librarian (Anna

Hoen) spent her mornings scanning new journal arrivals and telephoning

individual faculty to help them stay abreast of the current literature.

In 1971, Lane joined a handful of experimental libraries to use AIM-TWX,

the first computerized search protocol for Index Medicus (the precursor

to MEDLINE). With the web revolution in the 1990s, Lane rapidly expanded

its online journal subscriptions and provided access for physicians and

students.

... snip ...

a couple weeks ago we got a tour of LOC ... including going into the (physical) card catalog (1980 and earlier)

http://www.loc.gov/rr/main/inforeas/card.html

from above:

The Main Card Catalog, located adjacent to the Main Reading Room on the

first floor of the Jefferson Building, contains subject, author, title,

and some other cards for most books cataloged by the Library through

1980 (1978 for subject cards). Each work cataloged is represented by a

card or set of cards showing the name of the author, the title of the

book, the place of publication, the publisher, and the date of

publication. This information is followed by the number of pages or

volumes, a brief description of the illustrative material, and the

height in centimeters. If the book is part of a series, the name of the

series is shown in parentheses after the size. A call number, consisting

of a combination of letters and numbers, appears in the upper left-hand

corner of the card and/or is printed in the lower portion of the card.

... snip ...

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Future architectures Newsgroups: alt.comp.hardware.pc-homebuilt,comp.arch,sci.electronics.design Date: Wed, 20 Aug 2008 09:15:47 -0400nmm1@cus.cam.ac.uk (Nick Maclaren) writes:

some of the people from ctss had gone to the science center on the 4th flr ... and some went to multics on the 5th flr.

science center had done virtual machine implementation in the mid-60s. original was cp40 ... running on a modified 360/40 with address relocation hardware ... and morphed into cp67 when 360/67 (with standard address relocation hardware) became available.

as undergraduate in the late 60s, i rewrote much of cp67 code ... including the virtual memory management and things like page replacement (including creating a global LRU page replacement ... when much of the academic efforts of the period were directed at local LRU page replacement).

this showed up later in the early 80s ... when one of Jim's co-workers

at Tandem had done his stanford phd thesis on page replacement

algorithms (very similar to what i had done as undergraduate in the late

60s) and there was enormous pressure not to grant a phd on something

that wasn't local LRU ... old communication

https://www.garlic.com/~lynn/2006w.html#email821019

in this post

https://www.garlic.com/~lynn/2006w.html#46

a lot of the work that i had done as undergraduate in the 60s (that had been picked up and shipped cp67 product) ... was dropped in the simplification morph of cp67 (from 360/67) to vm370 (when general availability of address relocation was announced for 370 computers, i.e. 360/67 was only 360 model that had address relocation as standard feature).

for other drift ... a recent folklore post about that period (mostly

related to unbundling announcement and starting to charge for software)

https://www.garlic.com/~lynn/2008m.html#1

https://www.garlic.com/~lynn/2008m.html#2

for other folklore ... the announcement that all 370s would ship with virtual memory support ... required that all the other operating systems had to now add support for address relocation. one of the big issues was the heritage of application programs creating (i/o) channel programs and passing them to the supervisor for initiation/execution. While instruction addresses went through address relocation ... i/o channel programs didn't ... they continued to be "real". This created a disconnect ... since application programs (running in virtual address mode) would now be creating the channel programs with virtual addresses. This required the supervisor to create a copy of the passed i/o channel programs (created by applications) and substituting real addresses for the virtual addresses.

CP67 had this kind of translation mechanism from the very beginning ...

since it had to take the I/O channel programs created in the virtual

machines ... make a copy ... coverting all the virtual machine "virtual"

addresses into real addresses. The initial transition of the flagship

batch operating system (MVT) to virtual memory operation ... involved

some simple stub code in MVT ... giving it a single large virtual

address space (majority of code continued to run as if it was on real

machine that had real storage equivalent to large address space) and

crafting "CCWTRANS" (from cp67) into the i/o supervisor (for making the

copies of application i/o channel programs, substituting real addresses

for virtual). some recent posts mentioning "CCWTRANS"

https://www.garlic.com/~lynn/2008g.html#45 authoritative IEFBR14 reference

https://www.garlic.com/~lynn/2008i.html#68 EXCP access methos

https://www.garlic.com/~lynn/2008i.html#69 EXCP access methos

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Wed, 20 Aug 2008 09:34:30 -0400"Joe Morris" <j.c.morris@verizon.net> writes:

what is black hat and what is defcon can get quite blurred ... since they are held in conjunction.

black hat, las vegas, 2-7aug

http://www.blackhat.com/

defcon, las vegas, 8-10aug

http://www.defcon.org/

picture shows DEFCON

Federal Judge Throws Out Gag Order Against Boston Students in Subway Case

http://blog.wired.com/27bstroke6/2008/08/federal-judge-t.html

this talks about DNS exploit:

Black Hat 2008 Aftermath

http://www.law.com/jsp/legaltechnology/pubArticleLT.jsp?id=1202423911432

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Unbelievable Patent for JCL Newsgroups: bit.listserv.ibm-main Date: Wed, 20 Aug 2008 11:37:04 -0400howard.brazee@CUSYS.EDU (Howard Brazee) writes:

I've seen some past references to bayesian cluster analysis of patent applications ... that found possibly 30percent of computer &/or software related patents filed in other categories.

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Unbelievable Patent for JCL Newsgroups: bit.listserv.ibm-main Date: Wed, 20 Aug 2008 13:25:27 -0400John.Mckown@HEALTHMARKETS.COM (McKown, John) writes:

I continued to do 360/370 (cp67 & vm370) stuff during the future system

era ... recent discussion of the period related to unbundling:

https://www.garlic.com/~lynn/2008m.html#1

https://www.garlic.com/~lynn/2008m.html#2

after future system effort was killed ... misc. past post

https://www.garlic.com/~lynn/submain.html#futuresys

there was a mad rush to get stuff back into the 370 product pipeline (both software & hardware) ... and this was somewhat behind motivation to (re)releasing the stuff as "resource manager". Also, the distraction during the future system period is claimed to have significantly contributed to clone processors gaining market foothold. The original 23jun69 unbundling (response to various litigaction) managed to make the case that kernel software should still be free. However, the appearance of clone processors appeared to motivate change in policy and start to also charge for kernel software ... and my "resource manager" got selected to be guinea pig for kernel software charging.

I also got told by people from corporate hdqtrs that my resource manager wasn't sophisticated enough ... that all the other resource managers in that era had lots of (manual) "tuning knobs" ... and my resource manager was deficient in the number of such "tuning knobs". It fell on deaf ears that the resource manager implemented its own dynamic adaptive scheduling ... and therefor didn't require all those manual tuning knobs ... and so I had to retrofit (at least the appearance) of some number of manual tuning knobs to get it by the corporate hdqtrs experts.

Nearly a decade later (and nearly two decades after I had done the original work as an undergraduate for cp67), some corporate lawyers contacted me for examples of my original work. It supposedly represented "prior art" in some (scheduling) patent litigation that was going on at the time.

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Yet another squirrel question - Results (very very long post) Newsgroups: alt.folklore.computers Date: Wed, 20 Aug 2008 21:16:48 -0400Rich Alderson <news@alderson.users.panix.com> writes:

from above (survey extract):

Stanford Name CPU Mips Memory Disk Total Concurrent (megs) (megs) Users Users SAIL KL10(2) 3.6 10 1600 230 70 SCORE 20/60 2.0 4 400 230 55 VAX1 11/780 1.1 4 400 ? small VAX2 11/780 1.1 2 200 ? small IBM 4331 0.5 4 8-3310s 30 8 (16) Alto 0.3/4.8 0.25/4 2/32 16 16... snip ...

the 4331 was part of a joint study with PASC and only in use by people involved in the study.

the previous posting listed tables of machines at the mentioned

institutions (from the survey). the survey also included descriptions of

some number of other institutions ... including xerox sdd ... from that

survey:

They have more machines than people. There are 300 machines for 200

employees. At least five of the machines are DORADOs (3 mips); the rest

are a mixture of ALTOs, D machines, and Stars. Everyone has at least an

ALTO in his office. All the machines are tied together with a 10

megabit Ethernet. On the net there are at least two file servers and

various xerographic printers including a color printer

... snip ...

In addition to the table of machines at MIT ... the survey also

mentioned (at MIT):

The 26 LISP machines are connected to the CHAOS net, and

thus to several of the KA10s. Most of the VLSI work is being

done on these machines. MIT is currently building them at

the rate of two per month, at a cost of $50k to $100k each.

... snip ...

Visit to Larry Landweber at Univ of Wisc ... the computer science dept:

CPU Mips VAX 11/780 1.1 PDP 11/70 1.1 PDP 11/45 0.5 PDP 11/40 0.4 LSI 11/23 (8) 0.3 UNIVAC 1100/82 HP 3000... snip ...

also mentioned in the survey (regarding univ. of wisc):

NSF has also just given Wisconsin, the Rand Corporation, and a few other

smaller universities a grant to develop CSNET, a network to connect

Computer Science research facilities. CSNET will connect ARPANET and

other existing networks together. (This is not the same as BITNET, the

RSCS based network being developed by CUNY and Yale). CSNET will be

used to send messages, mail, and files between all computer science

research groups.

... snip ...

part of the survey was looking at split between institutions going to

individual (networked) personal computers ... versis terminals into

shared machines (in bell labs case "project" machines) ... much more

detailed Bell Labs portion of the summer '81 survey reproduced here

https://www.garlic.com/~lynn/2006n.html#56 AT&T Labs vs. Google Labs - R&D History

other past posts in this thread:

https://www.garlic.com/~lynn/2008l.html#78 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#82 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#84 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#86 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008l.html#87 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008m.html#1 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008m.html#2 Yet another squirrel question - Results (very very long post)

https://www.garlic.com/~lynn/2008m.html#6 Yet another squirrel question - Results (very very long post)

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Thu, 21 Aug 2008 09:14:08 -0400jmfbahciv <jmfbahciv@aol> writes:

one possible scenario is that some amount of DOD networking is concentrated on cyber warfare ... both offensive and defensive. Boyd's OODA-loops evolved out of military conflict ... but his briefings started to get into applicability of OODA-loops to other types of competitive environments (commercial, business).

a recent example

Buzz of the Week: A cyberwar paradox

http://www.fcw.com/print/22_26/news/153509-1.html?topic=security

the above makes references to earlier articles about Air Force touting

is cyber command and then article that it was suspending it:

So it was curious that on Aug. 12, the same day of the New York Times

story, former FCW reporter Bob Brewin broke the story for Government

Executive — confirmed by FCW — that the Air Force was suspending its

cyber command program. As trumpeted in Air Force TV ads, the Cyber

Command was seen as a way for DOD to coordinate its cyber warfare

initiatives, both offensive and defensive. In October 2007, FCW named

Air Force Maj. Gen. William Lord, who was leading the command, as a

government Power Player.

... snip ...

additional conjecture is OODA-loop possibly being used out-of-context with no reference to its history and origin.

as to my original post that also drifted into emperor's new clothes parable ... there is always the frequent references to what happens to the messenger (bearer of bad news). there could be more than a little of that in the injunction response to the MIT/transit presentation.

i've also referenced the emperor's new clothes parable and long-winded

decade old post that included mention of need for visibility into

underlying values of CDO-like instruments (in part, because two decades

ago, toxic CDOs had been used in the S&L crisis to obfuscate underlying values

... and "unload" the properties ... for significant more than they were

actually worth).

https://www.garlic.com/~lynn/aepay3.htm#riskm

there was an article in the washington post a couple days ago about documents from 2006 by GSE executives about their brilliant/wonderful strategy moving into subprime mortgage (toxic) CDOs ... sort of left hanging in the air was obviously the strategy wasn't that wonderful ... but no comment about replacing those executives (which has been happening at other institutions that had followed similar strategy).

recent posts mentioning emperor's new clothes parable

https://www.garlic.com/~lynn/2008j.html#40 dollar coins

https://www.garlic.com/~lynn/2008j.html#60 dollar coins

https://www.garlic.com/~lynn/2008j.html#69 lack of information accuracy

https://www.garlic.com/~lynn/2008k.html#10 Why do Banks lend poorly in the sub-prime market? Because they are not in Banking!

https://www.garlic.com/~lynn/2008k.html#16 dollar coins

https://www.garlic.com/~lynn/2008k.html#27 dollar coins

other past posts in this thread:

https://www.garlic.com/~lynn/2008l.html#89 Fraud due to stupid failure to test for negative

https://www.garlic.com/~lynn/2008m.html#0 Fraud due to stupid failure to test for negative

https://www.garlic.com/~lynn/2008m.html#5 Fraud due to stupid failure to test for negative

https://www.garlic.com/~lynn/2008m.html#8 Fraud due to stupid failure to test for negative

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Yet another squirrel question - Results (very very long post) Newsgroups: alt.folklore.computers Date: Thu, 21 Aug 2008 12:44:22 -0400Quadibloc <jsavard@ecn.ab.ca> writes:

note that original 23jun69 unbundling announcement was in response to

various litigation by the gov. and others. they had managed to make the

case that kernel software sould still be free. however, with the

distraction of future system

https://www.garlic.com/~lynn/submain.html#futuresys

it is claimed to have significantly contributed to clone processors gaining foot-hold in the market. those clone processors then contributed to the decision to (also) start charging for kernel software (initially just kernel software that wasn't directly involved in low-level hardware support).

somewhat related recent archeological post in comp.arch

https://www.garlic.com/~lynn/2008m.html#7 Future architectures

and recent resource manager archeological post in bit.listserv.ibm-main

https://www.garlic.com/~lynn/2008m.html#10 Unbelievable Patent for JCL

referencing in the mid-80s, being contacted by corporate lawyers involved in some sort scheduling related patent litigation and looking for copies of stuff that I had done nearly two decades earlier as undergraducate (as example of prior art)

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Anyone heard of a company called TIBCO ? Newsgroups: bit.listserv.ibm-main Date: Thu, 21 Aug 2008 15:27:05 -0400tbabonas@COMCAST.NET (Tony B.) writes:

and from some '97 archive:

Internet Publish and Subscribe Protocol

TIBCO Inc., and more than a dozen Internet companies have endorsed a

proposed new industry standard for the "push" model of information

distribution over the Internet. The proposed standard, called publish

and subscribe, will reduce Internet traffic and make it easier to find

and receive information on-line. The companies, which include Cisco

Systems, Inc., CyberCash, Informix, Infoseek, JavaSoft, Sun

Microsystems, Verisign, NETCOM, and others in addition to TIBCO,

announced plans, products and support for publish and subscribe. TIBCO

and Cisco Systems are developing an open reference specification for

publish and subscribe technology.

... snip ...

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Thu, 21 Aug 2008 16:25:31 -0400Anne & Lynn Wheeler <lynn@garlic.com> writes:

business news program just "asked" what did the GSEs do wrong? ... and their immediate answer: they bought $5bil in (toxic) CDOs with $80mil of capital ... i.e. heavily leveraged -- not quite 100times (the potential $25bil bailout estimates for GSEs seems to be all holdings)

this seems penny-ante stuff compared to other institutions that have already taken approx. $500bil in write-downs (in frequently, previously triple-A rated toxic CDOs, and projections will eventually be $1-$2 trillion.

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Fri, 22 Aug 2008 10:47:46 -0400jmfbahciv <jmfbahciv@aol> writes:

try here:

http://www.pbs.org/wgbh/pages/frontline/shows/wallstreet/weill

as well as

http://www.pbs.org/wgbh/pages/frontline/shows/wallstreet/weill/demise.html

news this morning is that Bernanke is saying that there won't be anymore making investment banks whole. a possible clinker is that with the repeal of Glass-Steagall (i.e. passed in the wake of the crash of '29 to keep the safety&soundness of regulated banking separate from unregulated, risky investment banking), there are now some regulated banking that have merged/acquired investment banking units (that got heavily leveraged into toxic CDOs ... like did the GSEs).

some recent references to some of the process of repealing Glass-Steagall:

https://www.garlic.com/~lynn/2008k.html#36 dollar coins

https://www.garlic.com/~lynn/2008k.html#41 dollar coins

another post in a different thread:

https://www.garlic.com/~lynn/2008g.html#66 independent appraisers

above references Citigroup paid $400mil fine in 2002 and the CEO was forbidden from communicating with various people in the company.

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Fri, 22 Aug 2008 13:30:39 -0400Anne & Lynn Wheeler <lynn@garlic.com> writes:

any issue about GSEs executives loosing their jobs ... after bragging about how wonderful it was their (heavy leveraged) buying $5bil in toxic CDOs with only $80mil in capital (when similar activity by executives at other institutions were loosing their jobs) ... news today attributes comments by Buffett that if the GSEs weren't gov't backed institutions, they would have already been gone .... his company had been the largest Freddie shareholder around 2000 and 2001, but sold its shares after realizing that both companies were trying "to report quarterly earnings to please Wall Street" ... they needed to keep earnings growing to keep stock market happy and turned to accounting to do it.

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: IBM-MAIN longevity Newsgroups: bit.listserv.ibm-main,alt.folklore.computers Date: Sat, 23 Aug 2008 16:48:13 -0400Anne & Lynn Wheeler <lynn@garlic.com> writes:

BITNET 435 ARPAnet 1155 CSnet 104 (excluding ARPAnet overlap) VNET 1650 EasyNet 4200 UUCP 6000 USENET 1150 (excluding UUCP nodes)re:

for a little *arpanet* (arpanet pre-tcp/ip made a distinction between the number of network IMP nodes and the number of hosts connected to IMPs) from RFC:

https://www.garlic.com/~lynn/rfcidx4.htm#1296

1296 I Internet Growth (1981-1991), Lottor M., 1992/01/29 (9pp) (.txt=20103) (Refs 921, 1031, 1034, 1035, 1178) 08/81 213 Host table #152 05/82 235 Host table #166 08/83 562 Host table #300 10/84 1,024 Host table #392 10/85 1,961 Host table #485 02/86 2,308 Host table #515 11/86 5,089 12/87 28,174 07/88 33,000 10/88 56,000 01/89 80,000 07/89 130,000 10/89 159,000 10/90 313,000 01/91 376,000 07/91 535,000 10/91 617,000 01/92 727,000... snip ...

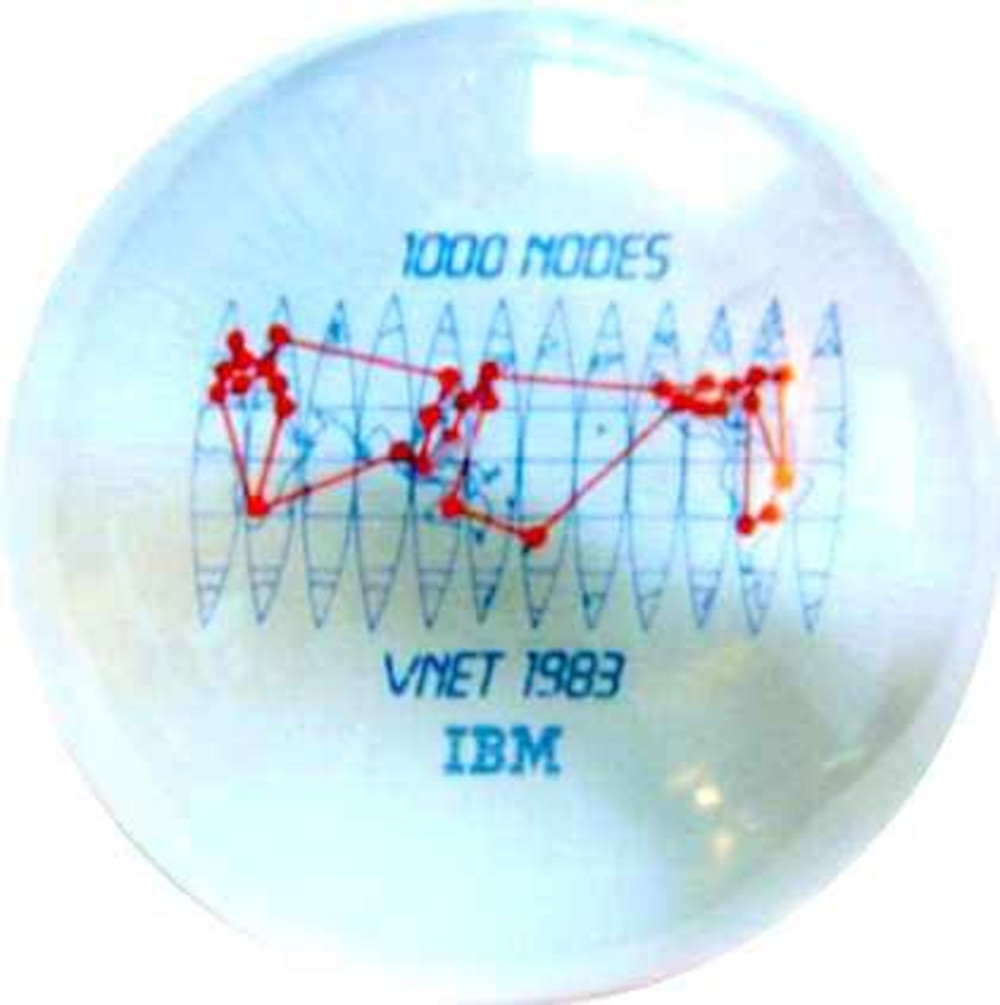

by comparison VNET (internal network hosts and nodes were equivalent):

reference to more than 300 nodes in 1979

https://www.garlic.com/~lynn/2006r.html#7

reference to 1000 nodes in 1983:

https://www.garlic.com/~lynn/internet.htm#22

https://www.garlic.com/~lynn/99.html#112

reference to nodes approaching 2000 in 1985

https://www.garlic.com/~lynn/2006t.html#49

other internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: IBM-MAIN longevity Newsgroups: bit.listserv.ibm-main,alt.folklore.computers Date: Sun, 24 Aug 2008 08:50:19 -0400Morten Reistad <first@last.name> writes:

dish was significantly smaller than the 4.5m dishes for tdma system on

internal network (working with nearly a decade earlier) ... had

started with some telco T1 circuits, some T1 circuits on campus T3

collins digital radio (microwave, multiple locations in south san

jose) and some T1 circuits on existing C-band system that used 10m

dishes (west cost / east coast). then got to work on design of tdma

system with 4.5m dishes for Ku-band system and a transponder on sbs-4

(that went up on 41-d, 5sep84). misc. past posts mentioning 41-d:

https://www.garlic.com/~lynn/2000b.html#27 Tysons Corner, Virginia

https://www.garlic.com/~lynn/2002p.html#28 Western Union data communications?

https://www.garlic.com/~lynn/2003j.html#29 IBM 3725 Comms. controller - Worth saving?

https://www.garlic.com/~lynn/2003k.html#14 Ping: Anne & Lynn Wheeler

https://www.garlic.com/~lynn/2004b.html#23 Health care and lies

https://www.garlic.com/~lynn/2004o.html#60 JES2 NJE setup

https://www.garlic.com/~lynn/2005h.html#21 Thou shalt have no other gods before the ANSI C standard

https://www.garlic.com/~lynn/2005q.html#17 Ethernet, Aloha and CSMA/CD -

https://www.garlic.com/~lynn/2006k.html#55 5963 (computer grade dual triode) production dates?

https://www.garlic.com/~lynn/2006m.html#11 An Out-of-the-Main Activity

https://www.garlic.com/~lynn/2006m.html#16 Why I use a Mac, anno 2006

https://www.garlic.com/~lynn/2006p.html#31 "25th Anniversary of the Personal Computer"

https://www.garlic.com/~lynn/2006v.html#41 Year-end computer bug could ground Shuttle

https://www.garlic.com/~lynn/2007p.html#61 Damn

past posts in thread:

https://www.garlic.com/~lynn/2008k.html#81 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008k.html#83 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008k.html#85 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#0 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#1 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#2 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#3 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#4 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#5 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#6 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#7 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#8 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#9 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#10 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#12 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#13 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#16 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#17 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#19 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008l.html#20 IBM-MAIN longevity

https://www.garlic.com/~lynn/2008m.html#18 IBM-MAIN longevity

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: IBM-MAIN longevity Newsgroups: bit.listserv.ibm-main,alt.folklore.computers Date: Sun, 24 Aug 2008 12:19:33 -0400Anne & Lynn Wheeler <lynn@garlic.com> writes:

other posts mentioning various "HSDT" activities

https://www.garlic.com/~lynn/subnetwork.html#hsdt

in 1980, the STL lab was starting to burst at the seams (it had only opened 4yrs earlier ... dedicated the same week the smithsonian air&space opened) ... and the decision was made to move 300 people from the IMS database group to offsite location. The group looked at using "remote" 3270s into the STL mainframes ... but found the response totally unacceptable. The decision was then made to go with local 3270s at the remote location using HYPERchannel as (mainframe) channel extender ... over a T1 circuit (on the campus T3 collins digital radio serving the area).

I got involved to write the driver support for HYPERchannel. The channel extender support wasn't (totally) software transparent. HYPERchannel had a (remote) A51x channel emulation box that (mainframe) controllers could connect to. Normal mainframe channel operation executed channel programs directly out of mainframe memory. However, the latency over remote connections made this infeasible ... so the mainframe device driver had to scan the channel program and make a emulated copy which was downloaded to the memory of the HYPERchannel A51x box ... and then executed direclty out of A51x memory.

This is analogous to virtual machine operating system has to do scanning

channel program and making a shadow copy ... which has real addresses

sustituted for the virtual machine's "virtual" addresses. recent

discussion (in comp.arch) of virtual machine requirement creating

channel program copies

https://www.garlic.com/~lynn/2008m.html#7 Future architectures

shot of 3270 logo screen used:

https://www.garlic.com/~lynn/vmhyper.jpg

as i've mentioned in the past, there was no noticeable difference in 3270 terminal response ... and overall system thruput actually increased 10-15 percent (the issue being that the HYPERchannel A220 local channel interface had much lower channel busy overhead than the 327x controller boxes ... doing the same operations).

misc past references:

https://www.garlic.com/~lynn/94.html#24 CP spooling & programming technology

https://www.garlic.com/~lynn/96.html#27 Mainframes & Unix

https://www.garlic.com/~lynn/2000b.html#38 How to learn assembler language for OS/390 ?

https://www.garlic.com/~lynn/2000c.html#68 Does the word "mainframe" still have a meaning?

https://www.garlic.com/~lynn/2001.html#22 Disk caching and file systems. Disk history...people forget

https://www.garlic.com/~lynn/2001k.html#46 3270 protocol

https://www.garlic.com/~lynn/2002f.html#7 Blade architectures

https://www.garlic.com/~lynn/2002f.html#60 Mainframes and "mini-computers"

https://www.garlic.com/~lynn/2002g.html#61 GE 625/635 Reference + Smart Hardware

https://www.garlic.com/~lynn/2002i.html#43 CDC6600 - just how powerful a machine was it?

https://www.garlic.com/~lynn/2002j.html#67 Total Computing Power

https://www.garlic.com/~lynn/2003g.html#22 303x, idals, dat, disk head settle, and other rambling folklore

https://www.garlic.com/~lynn/2004e.html#33 The attack of the killer mainframes

https://www.garlic.com/~lynn/2004p.html#29 FW: Is FICON good enough, or is it the only choice we get?

https://www.garlic.com/~lynn/2005e.html#13 Device and channel

https://www.garlic.com/~lynn/2005u.html#22 Channel Distances

https://www.garlic.com/~lynn/2005u.html#23 Channel Distances

https://www.garlic.com/~lynn/2006i.html#34 TOD clock discussion

https://www.garlic.com/~lynn/2006u.html#19 Why so little parallelism?

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Sun, 24 Aug 2008 15:19:40 -0400jmfbahciv <jmfbahciv@aol> writes:

there are some others that can be found:

http://www.youtube.com/watch?v=qh0k9kc3EY0

past posts mentioning Boyd

https://www.garlic.com/~lynn/subboyd.html

misc. recent posts mentioning US automobile C4 effort:

https://www.garlic.com/~lynn/2008.html#84 Toyota Sales for 2007 May Surpass GM

https://www.garlic.com/~lynn/2008.html#85 Toyota Sales for 2007 May Surpass GM

https://www.garlic.com/~lynn/2008b.html#9 folklore indeed

https://www.garlic.com/~lynn/2008c.html#22 Toyota Beats GM in Global Production

https://www.garlic.com/~lynn/2008c.html#68 Toyota Beats GM in Global Production

https://www.garlic.com/~lynn/2008e.html#30 VMware signs deal to embed software in HP servers

https://www.garlic.com/~lynn/2008e.html#31 IBM announced z10 ..why so fast...any problem on z 9

https://www.garlic.com/~lynn/2008f.html#50 Toyota's Value Innovation: The Art of Tension

https://www.garlic.com/~lynn/2008h.html#65 Is a military model of leadership adequate to any company, as far as it based most on authority and discipline?

https://www.garlic.com/~lynn/2008i.html#31 Mastering the Dynamics of Innovation

https://www.garlic.com/~lynn/2008k.html#2 Republican accomplishments and Hoover

https://www.garlic.com/~lynn/2008k.html#50 update on old (GM) competitiveness thread

https://www.garlic.com/~lynn/2008k.html#58 Mulally motors on at Ford

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Future architectures Newsgroups: alt.comp.hardware.pc-homebuilt,comp.arch,sci.electronics.design Date: Mon, 25 Aug 2008 10:10:07 -0400nmm1@cus.cam.ac.uk (Nick Maclaren) writes:

from long ago and far away (with regard to 3090):

Date: 11/17/83 13:40:41

To: wheeler

The machine has a split cache, the instruction cache is managed with

real addresses. No problems.

The operand cache is managed with two directories: one holds LOGICAL

addresses (i.e. mixture of real and virtual), and the other holds real

addresses. It appears to the outside world to be managed with real

addresses. I can think of no reason why shared pages will be peculiar

in this environment.

... snip ... top of post, old email index

related old email about the 3090 cache operation

https://www.garlic.com/~lynn/2003j.html#email831118

in this post, also mentioning 801 (separate I&D cache) from 1975:

https://www.garlic.com/~lynn/2003j.html#42 Flash 10208

this (earlier) email mentions 5880 (Amdahl mainframe clone) having

separate I & D caches

https://www.garlic.com/~lynn/2006b.html#email810318

in this post

https://www.garlic.com/~lynn/2006b.html#38 blast from the past ... macrocode

misc. posts mentioning 801 (romp, rios, power/pc, etc).

https://www.garlic.com/~lynn/subtopic.html#801

One of the differences between 801 split cache and the 3090 (5880) split cache ... was that 3090 (& 5880) managed cache consistency (between I & D caches) in hardware ...while 801 required software to flush D-cache & invalidate I-cache (like program loaders which may have modified instruction streams ... in the data cache ... in order to make sure that modifications in the D-cache were correctly reflected in the I-cache instruction stream).

other old email mentioning 801

https://www.garlic.com/~lynn/lhwemail.html#801

semi-related recent post in this thread (discussing virtual memory &

paging from the 60s):

https://www.garlic.com/~lynn/2008m.html#7 Future architecture

for related topic drift ... "small" shared segments in ROMP chip (801

used later in PC/RT)

https://www.garlic.com/~lynn/2006y.html#email841114c

https://www.garlic.com/~lynn/2006y.html#email841127

in this post:

https://www.garlic.com/~lynn/2006y.html#36 Multiple mappings

and (this time, Iliad chip ... another 801)

https://www.garlic.com/~lynn/2006u.html#email830420

in this post:

https://www.garlic.com/~lynn/2006u.html#37 To RISC or not to RISC

similar post along this line

https://www.garlic.com/~lynn/2007f.html#22 The Perfect Computer - 36 bits?

https://www.garlic.com/~lynn/2008j.html#82 Taxes

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Blinkylights Newsgroups: alt.folklore.computers Date: Mon, 25 Aug 2008 10:43:30 -0400Greg Menke <gusenet@comcast.net> writes:

the "real" indicator (since all the links required link encryptors) was when the sync light went out on the link encryptors. getting link encryptors back in sync was more painful than simply resending block-in-error ... part of the motivation for 1) FEC (forward error encrypting) and 2) transition away from link encryptors to (strong) packet encryption. other motivation was a lot of money was being spent on link encryptors (circa 85/86, there was some comment that the internal network had over half of all the link encryptors in the world).

old email mentioning PGP-like public key encryption

https://www.garlic.com/~lynn/2006w.html#email810515

in this post

https://www.garlic.com/~lynn/2006w.html#12 more secure communication over the network

other old email mentioning public key and/or crypto

https://www.garlic.com/~lynn/lhwemail.html#crypto

recent crypto related thread drift:

https://www.garlic.com/~lynn/2008h.html#87 New test attempt

https://www.garlic.com/~lynn/2008i.html#86 Own a piece of the crypto wars

https://www.garlic.com/~lynn/2008j.html#43 What is "timesharing" (Re: OS X Finder windows vs terminal window weirdness)

and for other drift, some old posts mentioning working with cyclotomics

regarding FEC:

https://www.garlic.com/~lynn/2001.html#1 4M pages are a bad idea (was Re: AMD 64bit Hammer CPU and VM)

https://www.garlic.com/~lynn/2002p.html#53 Free Desktop Cyber emulation on PC before Christmas

https://www.garlic.com/~lynn/2003e.html#27 shirts

https://www.garlic.com/~lynn/2004f.html#37 Why doesn't Infiniband supports RDMA multicast

https://www.garlic.com/~lynn/2004o.html#43 360 longevity, was RISCs too close to hardware?

https://www.garlic.com/~lynn/2005n.html#27 Data communications over telegraph circuits

https://www.garlic.com/~lynn/2007.html#29 Just another example of mainframe costs

https://www.garlic.com/~lynn/2007j.html#4 Even worse than UNIX

https://www.garlic.com/~lynn/2007v.html#82 folklore indeed

https://www.garlic.com/~lynn/2008l.html#19 IBM-MAIN longevity

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Some confusion about virtual cache Newsgroups: comp.arch,alt.folklore.computers Date: Mon, 25 Aug 2008 13:30:52 -0400nmm1@cus.cam.ac.uk (Nick Maclaren) writes:

we had done something analogous for 370 16-way SMP more than a decade earlier (that didn't ship as a product).

another example of restructuring data (for 801/risc rios) was the aix journaled filesystem ... where all the unix filesystem metadata was collected in storage area that was flagged as "transaction" memory i.e. allowed identifying changed/modified filesystem metadata for logging/journaling ... w/o requring explicit logging calls whenever there was modification of transaction data.

misc. past posts mentioning 801, risc, romp, rios, power, power/pc, etc

https://www.garlic.com/~lynn/subtopic.html#801

misc. past posts mentioning "live oak" (four processor, single-chip

rios)

https://www.garlic.com/~lynn/2000c.html#21 Cache coherence [was Re: TF-1]

https://www.garlic.com/~lynn/2001j.html#17 I hate Compaq

https://www.garlic.com/~lynn/2003d.html#57 Another light on the map going out

https://www.garlic.com/~lynn/2004q.html#40 Tru64 and the DECSYSTEM 20

https://www.garlic.com/~lynn/2006w.html#40 Why so little parallelism?

https://www.garlic.com/~lynn/2006w.html#41 Why so little parallelism?

some of the above makes reference to the ("alternative") cluster

approach ... trying to heavily leverage commodity priced components (w/o

cache consistency ... that was eventually announced as the corporate

supercomputer) ... misc. old email

https://www.garlic.com/~lynn/lhwemail.html#medusa

for other topic drift

https://www.garlic.com/~lynn/2008m.html#22 Future architectures

... reference more detailed 3090 cache description ... has a small

"fast" logical (aka virtual) index ... that was kept consistent with

the larger real index

https://www.garlic.com/~lynn/2003j.html#email831118

in this post

https://www.garlic.com/~lynn/2003j.html#42 Flash 10208

the 370 16-way SMP effort in the mid-70s ... leveraged charlie's

invention of the compare&swap instruction ("CAS" was chosen because they

are charlie's initials) ... misc. past posts mentioning SMP and/or

compare&swap instruction

https://www.garlic.com/~lynn/subtopic.html#smp

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Taxes Newsgroups: alt.folklore.computers Date: Mon, 25 Aug 2008 14:03:55 -0400Peter Flass <Peter_Flass@Yahoo.com> writes:

the author was interviewed on tv business news show this morning along with a lobbyist. the author used the line that there are some secretaries (in financial institutions) paying a higher tax rate than their CEO bosses. The lobbyist attempted to position the argument in terms of CEOs reasonably should have larger salaries than secretaries ... obfuscating the reference to "loop-holes" congress have passed that allows CEOs to have a lower tax rate. It wasn't directly the size of the salary ... but it could be reasonably expected to see both at least having the same tax rate.

this is separate to past references regarding executives now have a

salary ratio that is 400:1 that of standard workers ... up from ratio

of 20:1 ... and much more than then 10:1 found in other

cultures/countries

https://www.garlic.com/~lynn/2008i.html#73 Should The CEO Have the Lowest Pay In Senior Management?

https://www.garlic.com/~lynn/2008j.html#24 To: Graymouse -- Ireland and the EU, What in the H... is all this about?

https://www.garlic.com/~lynn/2008j.html#76 lack of information accuracy

https://www.garlic.com/~lynn/2008k.html#71 Cormpany sponsored insurance

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Mon, 25 Aug 2008 14:54:45 -0400jmfbahciv <jmfbahciv@aol> writes:

i've noted before about past comment (on one of the tv business news

shows) regarding Bernanke's litany about needing new regulations

... that american bankers are the most inventive in the world and

they've managed to totally screwup the system at least once a decade

regardless of the measures put in place attempting to prevent it:

https://www.garlic.com/~lynn/2008h.html#90 subprime write-down sweepstakes

https://www.garlic.com/~lynn/2008i.html#30 subprime write-down sweepstakes

https://www.garlic.com/~lynn/2008i.html#77 Do you think the change in bankrupcy laws has exacerbated the problems in the housing market leading more people into forclosure?

Looking at various recent articles ... there are a couple of items that I found (interesting?):

1) claims that the current credit problem was because (toxic) CDOs were too hard to evaluate

2) that wall street doesn't see the enormous profits going into the future (that they saw in the earlier part of this decade by heavily leveraging toxic CDOs).

wallstreet supposedly had the creme de la creme of financial experts, earning enormous compensation (took in well over hundred billion in just bonuses in 2002-2007 period) ... and they supposedly weren't able to figure out that trillions of dollars in poor quality (&/or subprime) mortgages were disappearing and then reappearing as triple-A rated toxic CDOs.

assuming purely random difficulty with evaluating (triple-A rated) toxic CDOs ... then there should be as much under-evaluation as there was over-evaluation ... implying that there would be as much "write-ups" (i.e. selling toxic CDOs at 200percent profit) as there are "write-downs" (selling toxic CDOs at 22cents on the dollar ... eventually there will possibly be $1tril - $2tril in write-downs)

an alternative interpretation was that (triple-A rated) toxic CDOs were being used just like toxic CDOs were used two decades ago during the S&L crisis to unload property at significant higher value (people selling the toxic CDOs understood the value, leveraging toxic CDOs so that the buyers would pay a much higher premium ... obfuscating the actual underlying value).

as to profit/earnings ... from an institutional standpoint, it would look like the profits of a couple yrs ago ... are turning out actually to be enormous losses (to the institution, it isn't likely the responsible individuals are going to return their salaries and bonuses).

long-winded, decade old post discussing various things ... including

needing visiability into underlying values of CDO-like instruments

https://www.garlic.com/~lynn/aepay3.htm#riskm

misc. past posts mentioning the $137bil in wall street bonuses for

2002-2007:

https://www.garlic.com/~lynn/2008f.html#76 Bush - place in history

https://www.garlic.com/~lynn/2008f.html#95 Bush - place in history

https://www.garlic.com/~lynn/2008g.html#32 independent appraisers

https://www.garlic.com/~lynn/2008g.html#52 IBM CEO's remuneration last year ?

https://www.garlic.com/~lynn/2008g.html#66 independent appraisers

https://www.garlic.com/~lynn/2008h.html#42 The Return of Ada

https://www.garlic.com/~lynn/2008i.html#73 Should The CEO Have the Lowest Pay In Senior Management?

https://www.garlic.com/~lynn/2008j.html#3 dollar coins

https://www.garlic.com/~lynn/2008j.html#24 To: Graymouse -- Ireland and the EU, What in the H... is all this about?

https://www.garlic.com/~lynn/2008j.html#75 lack of information accuracy

https://www.garlic.com/~lynn/2008k.html#11 dollar coins

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Fraud due to stupid failure to test for negative Newsgroups: alt.folklore.computers Date: Mon, 25 Aug 2008 16:24:05 -0400Anne & Lynn Wheeler <lynn@garlic.com> writes:

for other recent news tidbits ...

Report: FBI saw mortgage crisis coming in '04

http://latimesblogs.latimes.com/laland/2008/08/report-fbi-saw.html

Anyone smell a stench? The FBI knew about the housing scams in 2004

http://www.digitaljournal.com/article/258992

FBI saw threat of mortgage crisis

http://www.latimes.com/business/la-fi-mortgagefraud25-2008aug25,1,4792318.story

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Yet another squirrel question - Results (very very long post) Newsgroups: alt.folklore.computers Date: Tue, 26 Aug 2008 09:32:46 -0400Louis Krupp <lkrupp@pssw.nospam.com.invalid> writes:

advent of web usage was affecting other session protocols also. i've

mentioned being called in to consult with small client/server startup

that wanted to do payment transactions ... which is frequently now

referred to as electronic commerce ... misc. past posts mentioning part

of that infrastructure called payment gateway

https://www.garlic.com/~lynn/subnetwork.html#gateway

they had growing number of FTP (download) servers ... started out people "purchasing" the browser and downloading. this was before front-end boundary routers doing load-balancing routing of incoming transactions to pool of backend servers (first saw being developed and deployed at Google). (Growing number of ) Server names were qualified with numeric suffix; 1, 2, ... 10, etc. The last one I remember was large sequent box (I think given "20" suffix in the server name). The sequent people said that they had previously had to deal with large number of unix scale-up issues ... having commercial customers with heavy loads ... things like 20,000 telnet sessions.

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Quality of IBM school clock systems? Newsgroups: alt.folklore.computers Date: Tue, 26 Aug 2008 10:14:04 -0400Roland Hutchinson <my.spamtrap@verizon.net> writes:

'Perfect Pitch' In Humans Far More Prevalent Than Expected

http://www.sciencedaily.com/releases/2008/08/080826080600.htm

from above:

Humans are unique in that we possess the ability to identify pitches

based on their relation to other pitches, an ability called relative

pitch. Previous studies had shown that animals such as birds, for

instance, can identify a series of repeated notes with ease, but when

the notes are transposed up or down even a small amount, the melody

becomes completely foreign to the bird.

... snip ...

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Taxes Newsgroups: alt.folklore.computers Date: Tue, 26 Aug 2008 11:41:34 -0400greymaus <greymausg@mail.com> writes:

old line about being told that they could have forgiven you for being

wrong, but they were never going to forgive you for being right ...

a few past references:

https://www.garlic.com/~lynn/2002k.html#61 arrogance metrics (Benoits) was: general networking

https://www.garlic.com/~lynn/2002q.html#16 cost of crossing kernel/user boundary

https://www.garlic.com/~lynn/2003i.html#71 Offshore IT

https://www.garlic.com/~lynn/2004k.html#14 I am an ageing techy, expert on everything. Let me explain the

https://www.garlic.com/~lynn/2007.html#26 MS to world: Stop sending money, we have enough - was Re: Most ... can't run Vista

https://www.garlic.com/~lynn/2007e.html#48 time spent/day on a computer

https://www.garlic.com/~lynn/2007k.html#3 IBM Unionization

https://www.garlic.com/~lynn/2007r.html#6 The history of Structure capabilities

https://www.garlic.com/~lynn/2008c.html#34 was: 1975 movie "Three Days of the Condor" tech stuff

the other line from dedication Boyd Hall at USAF weapons school:

https://www.garlic.com/~lynn/2000e.html#35 War, Chaos, & Business (web site), or Col John Boyd

https://www.garlic.com/~lynn/2007.html#20 MS to world: Stop sending money, we have enough - was Re: Most ... can't run Vista

https://www.garlic.com/~lynn/2007h.html#74 John W. Backus, 82, Fortran developer, dies

https://www.garlic.com/~lynn/2007j.html#61 Lean and Mean: 150,000 U.S. layoffs for IBM?

https://www.garlic.com/~lynn/2007j.html#77 IBM Unionization

https://www.garlic.com/~lynn/2007k.html#3 IBM Unionization

https://www.garlic.com/~lynn/2007k.html#5 IBM Unionization

https://www.garlic.com/~lynn/2007n.html#4 the Depression WWII

https://www.garlic.com/~lynn/2007n.html#44 the Depression WWII

https://www.garlic.com/~lynn/2008b.html#45 windows time service

other posts referencing Boyd

https://www.garlic.com/~lynn/subboyd.html

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Baudot code direct to computers? Newsgroups: alt.folklore.computers Date: Tue, 26 Aug 2008 16:31:16 -0400hancock4 writes:

Date: 12 April 1985, 20:07:33 EST

To: wheeler

Hi!

...

The feed pipe is now 14.4kbps async. 5 bit Baudot.

They are going to convert but WE feel it might not be on the same

schedule as we are, sooo.. we feel the Series/1 is required to convert

the 5bit Baudot to ASCII until the other system is complete.

... snip ... top of post, old email index

later that year ...

http://query.nytimes.com/gst/fullpage.html?res=9F06E7D9133BF931A2575AC0A963948260

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: IBM THINK original equipment sign Newsgroups: bit.listserv.ibm-main Date: Wed, 27 Aug 2008 09:26:45 -0400ibm-main@TPG.COM.AU (Shane) writes:

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Taxes Newsgroups: alt.folklore.computers Date: Wed, 27 Aug 2008 09:55:01 -0400jmfbahciv <jmfbahciv@aol> writes:

the referenced article

http://www.consumeraffairs.com/news04/2008/08/ceo_taxpayers.html

lists $20billion in executive compensation tax loop-holes. the article does contribute to confusing effective tax rate (i.e. actual tax paid divided by total compensation) by mentioning "encourage excessive executive pay".

Lower effective tax rate (because of tax loop-holes) is separate issue from the dramatic change in ratio of executive pay to worker pay ... exploding from ratio of 20:1 to 400:1 (compared to ratio of 10:1 in most of the rest of the world).

One could make the case that with a lower effective tax rate (than workers) ... that the effective ratio of executive pay to worker pay is actually larger than the gross (before tax) ratio (if the 400:1 ratio is gross before tax compensation ... might the after tax compensation ratio be more like 500:1?).

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Future architectures Newsgroups: alt.comp.hardware.pc-homebuilt,comp.arch,sci.electronics.design,alt.folklore.computers Date: Wed, 27 Aug 2008 10:37:00 -0400rpw3@rpw3.org (Rob Warnock) writes:

besides the mentioned paging algorithm work as undergraduate in the 60s, i had also done a lot of scheduling algorithm and other performance related work (all of it shipping in cp67 product). in the (simplification) morph from cp67 to vm370 ... a lot of that work was dropped.

i had moved a lot of the work (that had been dropped in the morph) to

vm370 and made it available in internally distributed systems ... some

recent posts with references

https://www.garlic.com/~lynn/2008l.html#72 Error handling for system calls

https://www.garlic.com/~lynn/2008l.html#82 Yet another squirrel question

when the future system project failed

https://www.garlic.com/~lynn/submain.html#futuresys

there was something of a mad rush to get stuff back into the 370 product

pipeline (which had been neglected ... some assumptions that future

system would replace 370). this was possibly some of the motivation to

pickup & release much of the stuff that I had been doing (during the

future system period). some recent references:

https://www.garlic.com/~lynn/2008m.html#1 Yet another squirrel question

https://www.garlic.com/~lynn/2008m.html#10 Unbelievable Patent for JCL

one of the features that I had added with moving a lot of my stuff from cp67 to vm370 ... was some scheduling cache optimization (with the increasing use of caches on 370 processors). Nominally, system was enabled for (asynchronous) i/o interrupts ... which can have lot of downside pressure on cache hit ratio. The scheduler would look at relative i/o interrupt rates ... and change from general enabled for i/o interrupts to mostly disabled for i/o interrupts with periodic check for pending i/o interrupts. This traded off cache-hit performance against i/o service time latency.

for other topic drift ... there was survey of some number of operations

during the summer of '81 (which included some KL10 and Vax systems)

this post has some excerpts from that survey (along with some comments

about time-sharing comparison between cp67 and some KL10 systems):

https://www.garlic.com/~lynn/2001l.html#61

other posts with other excerpts from that survey

https://www.garlic.com/~lynn/2006n.html#56

https://www.garlic.com/~lynn/2008m.html#11

other past posts mentioning scheduling/performance work

https://www.garlic.com/~lynn/subtopic.html#fairshare

other past posts mentioning paging algorithm work

https://www.garlic.com/~lynn/subtopic.html#wsclock

--

40+yrs virtualization experience (since Jan68), online at home since Mar70

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: IBM THINK original equipment sign Newsgroups: bit.listserv.ibm-main,alt.folklore.computers Date: Thu, 28 Aug 2008 11:00:46 -0400sebastian@WELTON.DE (Sebastian Welton) writes:

it has gotten a little dinged over the years.

the internal network

https://www.garlic.com/~lynn/subnetwork.html#internalnet

was larger than arpanet/internet from just about the beginning until sometime possibly late '85 or early '86.

past reference mentioning the 1000th node

https://www.garlic.com/~lynn/99.html#112

https://www.garlic.com/~lynn/internet.htm#22

another post mentioning corporate locations that added one or more new

hosts/nodes on the internal network that year

https://www.garlic.com/~lynn/2006k.html#8 Arpa address

the internal network was originally developed at the science center

https://www.garlic.com/~lynn/subtopic.html#545tech

the same place that originated virtual machines, GML, lots of interactive stuff.

for recent slightly related networking post about a couple yrs earlier

(1980) ... 300 people from the IMS group having to be moved to offsite

location ... because STL had filled up (includes screen shot of

the 3270 logon logo):

https://www.garlic.com/~lynn/2008m.html#20 IBM-MAIN longevity

One of the interesting aspects of the internal network implementation was that it effectively had a form of gateway implementation in every node. this became important when interfacing with hasp/jes networking implementations.

part of the issue was that hasp/jes networking started off defining nodes using spare slots in the 255-entry table for psuedo (unit record) devices ... typical hasp/jes might have only 150 entries available for defining network nodes. hasp/jes implementation also had a habit of discarding traffic where the originating node and/or the destination node wasn't in its internal table. the internal network quickly exceeded the number of nodes that could be defined in hasp/jes ... and its proclivity for discarding traffic ... pretty much regulated hasp/jes to boundary nodes. by the time hasp/jes got around to increasing the limit to 999 nodes ... the internal network was already over 1000 nodes ... and by the time it was further increased to 1999 nodes ... the internal network was over 2000 nodes.

hasp/jes implementation also had a design flaw where the network information was intermingled with other hasp/jes processing control information (as opposed to clean separation). the periodic outcome that two has/jes systems at different release levels were typically unable to communicate ... and in some cases, release incompatibilities could cause other hasp/jes systems to crash (there is infamous scenario where a san jose hasp/jes system was crashing Hursley hasp/jes systems).

The combination of the internal networking support started accumulating some number of "release-specific" hasp/jes "drivers" ... where an intermediate internal network node was configured to start the corresponding hasp/jes driver for the system on the other end of the wire. As the problems with release incompatibilities between hasp/jes systems increased ... the internal network code evolved a canonical hasp/jes representation ... and drivers would translate format to the specific hasp/jes release (as appropriate). In the hursley crashing scenario ... somebody even got around to blaming the internal network code for not preventing a san jose hasp/jes systems from crashing Hursley hasp/jes systems.

By the time, BITNET started

https://www.garlic.com/~lynn/subnetwork.html#bitnet