From: Lynn Wheeler <lynn@garlic.com> Subject: Assembler language and code optimization Date: 22 Feb, 2024 Blog: Facebookre:

topic drift, early 80s, co-worker left IBM SJR and was doing contracting work in silicon valley, optimizing (fortran) HSPICE, lots of work for the senior engineering VP at major VLSI company, etc. He did a lot of work on AT&T C compiler (bug fixes and code optimization) getting running on CMS ... and then ported a lot of the BSD chip tools to CMS.

One day the IBM rep came through and asked him what he was doing ... he said ethernet support for using SGI workstations as graphical frontends. The IBM rep told him that instead he should be doing token-ring support or otherwise the company might not find its mainframe support as timely as it has been in the past. I then get a hour long phone call listening to four letter words. The next morning the senior VP of engineering calls a press conference to say the company is completely moving off all IBM mainframes to SUN servers. There were then IBM studies why silicon valley wasn't using IBM mainframes ... but they weren't allowed to consider branch office marketing issues.

some past posts mentioning HSPICE

https://www.garlic.com~lynn/2021j.html#36 Programming Languages in IBM

https://www.garlic.com/~lynn/2021h.html#69 IBM Graphical Workstation

https://www.garlic.com/~lynn/2021d.html#42 IBM Powerpoint sales presentations

https://www.garlic.com/~lynn/2021.html#77 IBM Tokenring

https://www.garlic.com/~lynn/2011p.html#119 Start Interpretive Execution

https://www.garlic.com/~lynn/2003n.html#26 Good news for SPARC

https://www.garlic.com/~lynn/2002h.html#4 Future architecture

https://www.garlic.com/~lynn/2002h.html#3 Future architecture

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Vintage REXX Date: 22 Feb, 2024 Blog: FacebookIn the very early 80s, I wanted to demonstrate REX(X) was not just another pretty scripting language (before renamed REXX and released to customers). I decided on redoing a large assembler application (dump processor & fault analysis) in REX with ten times the function and ten times the performance (lot of hacks and slight of hand done to make interpreted REX run faster than the assembler version), working half time over three months elapsed. I finished early so started writing automated script that searched for most common failure signatures. It also included a pseudo dis-assembler ... converting storage areas into instruction sequences and would format storage according to specified dsects. I got softcopy of messages&codes and could index applicable information. I had thought that it would be released to customers, but for what ever reasons it wasn't (even tho it was in use by most PSRs and internal datacenters) ... however, I finally did get permission to give talks on the implementation at user group meetings ... and within a few months similar implementations started showing up at customer shops.

... oh and it could be run either as consol command/session. ... or as xedit macro ... so everything was captured as xedit file

.. later the 3090 service processor guys (3092) asked to ship it installed on the service processor

dumprx posts

https://www.garlic.com/~lynn/submain.html#dumprx

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Can a compiler work without an Operating System? Newsgroups: alt.folklore.computers Date: Thu, 22 Feb 2024 23:48:01 -1000John Levine <johnl@taugh.com> writes:

I then modified it with assembler option that generated either the stand alone version (took 30mins to assemble) or OS/360 with system services macros (took 60mins to assemble, each DCB macro taking 5-6mins). I quickly learned 1st thing coming in sat. morning was clean the tape drives and printers, disassemble the 2540 printer/punch, clean it and reassemble. Also sometimes sat. morning, production had finished early and everything was powered off. Sometimes 360/30 wouldn't power on and reading manuals and trail&error would place all the controllers in CE mode, power-on the 360/30, individually power-on each controller, then placing them back in normal mode.

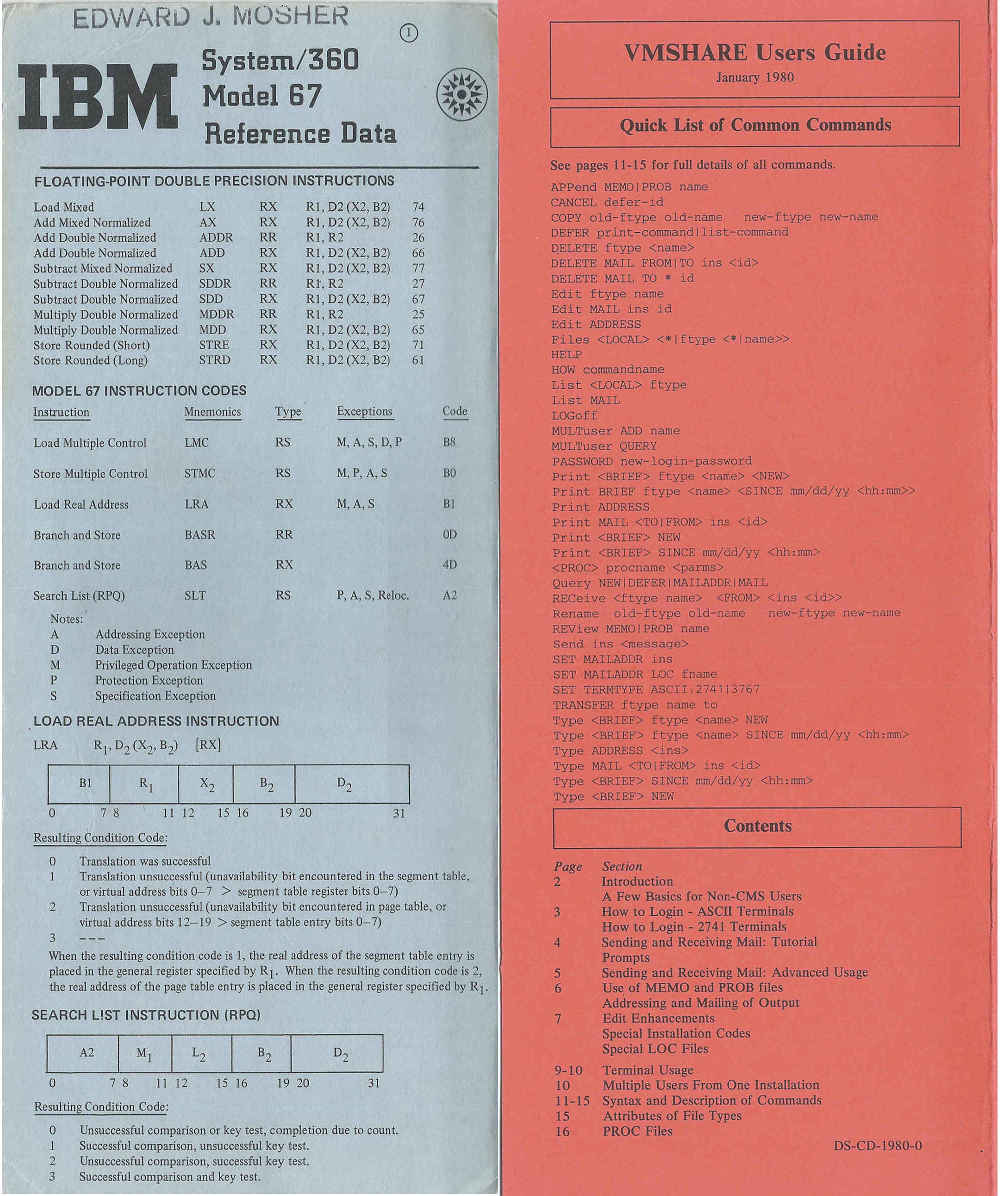

Within a year of the intro class, the 360/67 arrived and I was hired fulltime responsible for os/360 (TSS/360 never came to production so ran as 360/65). Then some people from science center came out to install CP67 (precursor to vm370) ... 3rd after CSC itself and MIT Lincoln labs; and I mostly played with it in my 48hr weekend window. Initially cp67 kernel was couple dozen assembler source routines originally kept on os/360 ... individually assembled and txt output placed in card tray in correct order behind BPS loader to IPL kernel... which writes memory image to disk. disk then can be IPLed to run CP67. Each module TXT deck had diagonal stripe and module name across top ... so updating and replacing individual module (in card tray) can be easily identify/done. Later CP67 source was moved to CMS and it was possible to assemble and then virtually punch BPS loader and TXT output that is transferred to an input reader, (virtually) IPL it and have it written to disk (potentially even the production system disk) for re-ipl.

One of the things I worked on was a mechanism for paging part of the CP67 kernel (reducing fixed storage requirement) ... reworking some code into 4kbyte segments ... which increased the number of ESD entry symbols ... and eventually found I started running into the BPS loader 255 ESD limit.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

posts mentioning BPS loader, MPIO work and CP67

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2023g.html#39 Vintage Mainframe

https://www.garlic.com/~lynn/2023f.html#102 MPIO, Student Fortran, SYSGENS, CP67, 370 Virtual Memory

https://www.garlic.com/~lynn/2023f.html#83 360 CARD IPL

https://www.garlic.com/~lynn/2022.html#26 Is this group only about older computers?

https://www.garlic.com/~lynn/2021i.html#61 Virtual Machine Debugging

https://www.garlic.com/~lynn/2021b.html#27 DEBE?

https://www.garlic.com/~lynn/2020.html#32 IBM TSS

https://www.garlic.com/~lynn/2019e.html#19 All programmers that developed in machine code and Assembly in the 1940s, 1950s and 1960s died?

https://www.garlic.com/~lynn/2019b.html#51 System/360 consoles

https://www.garlic.com/~lynn/2018f.html#75 CP67 & EMAIL history

https://www.garlic.com/~lynn/2018f.html#51 All programmers that developed in machine code and Assembly in the 1940s, 1950s and 1960s died?

https://www.garlic.com/~lynn/2018d.html#104 OS/360 PCP JCL

https://www.garlic.com/~lynn/2017h.html#49 System/360--detailed engineering description (AFIPS 1964)

https://www.garlic.com/~lynn/2015b.html#15 What were the complaints of binary code programmers that not accept Assembly?

https://www.garlic.com/~lynn/2009h.html#12 IBM Mainframe: 50 Years of Big Iron Innovation

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Bypassing VM Newsgroups: comp.arch Date: Fri, 23 Feb 2024 09:47:29 -1000jgd@cix.co.uk (John Dallman) writes:

a little more than decade ago, I was asked to tract down decision to add virtual memory to all IBM 370s. Turns out MVT storage management was so bad, that program execution "regions" had to be specified four times larger than used ... a typical one mbyte 370/165 would only run four concurrently executing regions, insufficient to keep machine busy and justified. Running MVT in a 16mbyte virtual memory would allow increasing concurrently executing regions by four times with little or no paging (similar to running MVT in CP67 16mbyte virtual machine).

archived post with pieces of email exchange with somebody that reported

to IBM executive making the 370 virtual memory decision

https://www.garlic.com/~lynn/2011d.html#73

a few recent posts mentioning Boeing Huntsville MVT13 work and

decision adding virtual memory to all 370s:

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2023g.html#81 MVT, MVS, MVS/XA & Posix support

https://www.garlic.com/~lynn/2023g.html#39 Vintage Mainframe

https://www.garlic.com/~lynn/2023g.html#19 OS/360 Bloat

https://www.garlic.com/~lynn/2023f.html#110 CSC, HONE, 23Jun69 Unbundling, Future System

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2021k.html#106 IBM Future System

360/67

https://en.wikipedia.org/wiki/IBM_System/360-67

CP67/CMS history

https://en.wikipedia.org/wiki/History_of_CP/CMS

science center (responsible for cp67/cms) posts

https://www.garlic.com/~lynn/subtopic.html#545tech

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: "Under God" (1954), "In God We Trust" (1956) Date: 23 Feb, 2024 Blog: FacebookPledge of Allegiance (1923)

In God We Trust (1956) ... replacing "Out of many, one"

https://en.wikipedia.org/wiki/In_God_We_Trust

note that John Foster Dulles played major role rebuilding Germany

economy, industry, military from the 20s up through the early 40s

https://www.amazon.com/Brothers-Foster-Dulles-Allen-Secret-ebook/dp/B00BY5QX1K/

loc865-68:

In mid-1931 a consortium of American banks, eager to safeguard their

investments in Germany, persuaded the German government to accept a

loan of nearly $500 million to prevent default. Foster was their

agent. His ties to the German government tightened after Hitler took

power at the beginning of 1933 and appointed Foster's old friend

Hjalmar Schacht as minister of economics.

loc905-7:

Foster was stunned by his brother's suggestion that Sullivan &

Cromwell quit Germany. Many of his clients with interests there,

including not just banks but corporations like Standard Oil and

General Electric, wished Sullivan & Cromwell to remain active

regardless of political conditions.

loc938-40:

At least one other senior partner at Sullivan & Cromwell, Eustace

Seligman, was equally disturbed. In October 1939, six weeks after the

Nazi invasion of Poland, he took the extraordinary step of sending

Foster a formal memorandum disavowing what his old friend was saying

about Nazism

... snip ...

June1940, Germany had a victory celebration at the NYC Waldorf-Astoria

with major industrialists. Lots of them were there to hear how to do

business with the Nazis

https://www.amazon.com/Man-Called-Intrepid-Incredible-Narrative-ebook/dp/B00V9QVE5O/

somewhat replay of the Nazi celebration, after the war, 5000

industrialists and corporations from across the US had conference at

the Waldorf-Astoria, and in part because they had gotten such a bad

reputation for the depression and supporting Nazis, as part of

attempting to refurbish their horribly corrupt and venal image, they

approved a major propaganda campaign to equate Capitalism with

Christianity.

https://www.amazon.com/One-Nation-Under-God-Corporate-ebook/dp/B00PWX7R56/

... other trivia, from the law of unintended consequences, when US 1943 Strategic Bombing program needed targets in Germany, they got plans and coordinates from wallstreet.

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

inequality posts

https://www.garlic.com/~lynn/submisc.html#inequality

racism posts

https://www.garlic.com/~lynn/submisc.html#racism

specific posts mentioning Facism and/or Nazism

https://www.garlic.com/~lynn/2023c.html#80 Charlie Kirk's 'Turning Point' Pivots to Christian Nationalism

https://www.garlic.com/~lynn/2022g.html#39 New Ken Burns Documentary Explores the U.S. and the Holocaust

https://www.garlic.com/~lynn/2022g.html#28 New Ken Burns Documentary Explores the U.S. and the Holocaust

https://www.garlic.com/~lynn/2022g.html#19 no, Socialism and Fascism Are Not the Same

https://www.garlic.com/~lynn/2022e.html#62 Empire Burlesque. What comes after the American Century?

https://www.garlic.com/~lynn/2022e.html#38 Wall Street's Plot to Seize the White House

https://www.garlic.com/~lynn/2022.html#28 Capitol rioters' tears, remorse don't spare them from jail

https://www.garlic.com/~lynn/2021k.html#7 The COVID Supply Chain Breakdown Can Be Traced to Capitalist Globalization

https://www.garlic.com/~lynn/2021j.html#104 Who Knew ?

https://www.garlic.com/~lynn/2021j.html#80 "The Spoils of War": How Profits Rather Than Empire Define Success for the Pentagon

https://www.garlic.com/~lynn/2021i.html#56 "We are on the way to a right-wing coup:" Milley secured Nuclear Codes, Allayed China fears of Trump Strike

https://www.garlic.com/~lynn/2021f.html#80 After WW2, US Antifa come home

https://www.garlic.com/~lynn/2021d.html#11 tablets and desktops was Has Microsoft

https://www.garlic.com/~lynn/2021c.html#96 How Ike Led

https://www.garlic.com/~lynn/2021c.html#23 When Nazis Took Manhattan

https://www.garlic.com/~lynn/2021c.html#18 When Nazis Took Manhattan

https://www.garlic.com/~lynn/2021b.html#91 American Nazis Rally in New York City

https://www.garlic.com/~lynn/2021.html#66 Democracy is a threat to white supremacy--and that is the cause of America's crisis

https://www.garlic.com/~lynn/2021.html#51 Sacking the Capital and Honor

https://www.garlic.com/~lynn/2021.html#46 Barbarians Sacked The Capital

https://www.garlic.com/~lynn/2021.html#44 American Fascism

https://www.garlic.com/~lynn/2021.html#34 Fascism

https://www.garlic.com/~lynn/2021.html#32 Fascism

https://www.garlic.com/~lynn/2020.html#0 The modern education system was designed to teach future factory workers to be "punctual, docile, and sober"

https://www.garlic.com/~lynn/2019e.html#161 Fascists

https://www.garlic.com/~lynn/2019e.html#112 When The Bankers Plotted To Overthrow FDR

https://www.garlic.com/~lynn/2019e.html#107 The Great Scandal: Christianity's Role in the Rise of the Nazis

https://www.garlic.com/~lynn/2019e.html#63 Profit propaganda ads witch-hunt era

https://www.garlic.com/~lynn/2019e.html#43 Corporations Are People

https://www.garlic.com/~lynn/2019d.html#98 How Journalists Covered the Rise of Mussolini and Hitler

https://www.garlic.com/~lynn/2019d.html#94 The War Was Won Before Hiroshima--And the Generals Who Dropped the Bomb Knew It

https://www.garlic.com/~lynn/2019d.html#75 The Coming of American Fascism, 1920-1940

https://www.garlic.com/~lynn/2019c.html#36 Is America A Christian Nation?

https://www.garlic.com/~lynn/2019c.html#17 Family of Secrets

https://www.garlic.com/~lynn/2017e.html#23 Ironic old "fortune"

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Vintage REXX Date: 23 Feb, 2024 Blog: Facebookre:

Some of the MIT 7094/CTSS folks went to the 5th flr to do MULTICS

https://en.wikipedia.org/wiki/Multics

... others went to the IBM Science Center on the 4th flr, did virtual

machines, CP40/CMS, CP67/CMS, bunch of online apps, etc

https://en.wikipedia.org/wiki/Cambridge_Scientific_Center

https://en.wikipedia.org/wiki/History_of_CP/CMS

... and the IBM Boston Programming System was on the 3rd flr that did

CPS

https://en.wikipedia.org/wiki/Conversational_Programming_System

... although a lot was subcontracted out to Allen-Babcock (including

the CPS microcde assist for the 360/50)

https://www.bitsavers.org/pdf/allen-babcock/cps/

https://www.bitsavers.org/pdf/allen-babcock/cps/CPS_Progress_Report_may66.pdf

When decision was made to add virtual memory to all 370s, we started on modification to CP/67 (CP67H) to support 370 virtual machines (simulating the difference in architecture) and a version of CP/67 that ran on 370 (CP67I, was in regular use a year before the 1st engineering 370 machine with virtual memory was operational, later "CP370" was in wide use on real 370s inside IBM). A decision was made to do official product, morphing CP67->VM370 (dropping and/or simplifying lots of features) and some of the people moved to the 3rd flr to take over the IBM Boston Programming Center ... becoming the VM370 Development group ... when they outgrew the 3rd flr, they moved out to the empty IBM SBC bldg at Burlington Mall on rt128.

After the FS failure and mad rush to get stuff back into the 370 product pipelines, the head of POK convinced corporate to kill vm370 product, shutdown the development group, and move all the people to POK for MVS/XA ... or supposedly MVS/XA wouldn't be able to ship on time ... endicott managed to save the VM370 product mission, but had to reconstitute a development group from scratch).

trivia: some of the former BPC people did get CPS running on CMS.

IBM science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

some posts mentioning Boston Programming Center, CPS, and Allen-Babcock

https://www.garlic.com/~lynn/2023d.html#87 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2022e.html#31 Technology Flashback

https://www.garlic.com/~lynn/2022d.html#58 IBM 360/50 Simulation From Its Microcode

https://www.garlic.com/~lynn/2016d.html#35 PL/I advertising

https://www.garlic.com/~lynn/2016d.html#34 The Network Nation, Revised Edition

https://www.garlic.com/~lynn/2014f.html#4 Another Golden Anniversary - Dartmouth BASIC

https://www.garlic.com/~lynn/2014e.html#74 Another Golden Anniversary - Dartmouth BASIC

https://www.garlic.com/~lynn/2013l.html#28 World's worst programming environment?

https://www.garlic.com/~lynn/2013l.html#24 Teletypewriter Model 33

https://www.garlic.com/~lynn/2013c.html#36 Lisp machines, was What Makes an Architecture Bizarre?

https://www.garlic.com/~lynn/2013c.html#8 OT: CPL on LCM systems [was Re: COBOL will outlive us all]

https://www.garlic.com/~lynn/2012n.html#26 Is there a correspondence between 64-bit IBM mainframes and PoOps editions levels?

https://www.garlic.com/~lynn/2012e.html#100 Indirect Bit

https://www.garlic.com/~lynn/2010p.html#42 Which non-IBM software products (from ISVs) have been most significant to the mainframe's success?

https://www.garlic.com/~lynn/2010e.html#14 Senior Java Developer vs. MVS Systems Programmer (warning: Conley rant)

https://www.garlic.com/~lynn/2008s.html#71 Is SUN going to become x86'ed ??

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: "Under God" (1954), "In God We Trust" (1956) Date: 24 Feb, 2024 Blog: Facebookre:

The Admissions Game

https://www.nakedcapitalism.com/2023/07/the-admissions-game.html

Back in 1813, Thomas Jefferson and John Adams exchanged a series of

letters on what would later come to be called metritocracy. Jefferson

argued for a robust system of public education so that a "natural

aristocacy" based on virtue and talents could be empowered, rather

than an "artificial aristocracy founded on wealth and birth." Adams

was skeptical that one could so easily displace an entrenched elite:

... snip ...

... "fake news" dates back to at least founding of the country, both

Jefferson and Burr biographies, Hamilton and Federalists are portrayed

as masters of "fake news". Also portrayed that Hamilton believed

himself to be an honorable man, but also that in political and other

conflicts, he apparently believed that the ends justified the

means. Jefferson constantly battling for separation of church & state

and individual freedom, Thomas Jefferson: The Art of Power,

https://www.amazon.com/Thomas-Jefferson-Power-Jon-Meacham-ebook/dp/B0089EHKE8/

loc6457-59:

For Federalists, Jefferson was a dangerous infidel. The Gazette of the

United States told voters to choose GOD AND A RELIGIOUS PRESIDENT or

impiously declare for "JEFFERSON-AND NO GOD."

... snip ...

.... Jefferson targeted as the prime mover behind the separation of

church and state. Also Hamilton/Federalists wanting supreme monarch

(above the law) loc5584-88:

The battles seemed endless, victory elusive. James Monroe fed

Jefferson's worries, saying he was concerned that America was being

"torn to pieces as we are, by a malignant monarchy faction." 34 A

rumor reached Jefferson that Alexander Hamilton and the Federalists

Rufus King and William Smith "had secured an asylum to themselves in

England" should the Jefferson faction prevail in the government.

... snip ...

In the 1880s, Supreme Court were scammed (by the railroads) to give

corporations "person rights" under the 14th amendment.

https://www.amazon.com/We-Corporations-American-Businesses-Rights-ebook/dp/B01M64LRDJ/

pgxiii/loc45-50:

IN DECEMBER 1882, ROSCOE CONKLING, A FORMER SENATOR and close

confidant of President Chester Arthur, appeared before the justices of

the Supreme Court of the United States to argue that corporations like

his client, the Southern Pacific Railroad Company, were entitled to

equal rights under the Fourteenth Amendment. Although that provision

of the Constitution said that no state shall "deprive any person of

life, liberty, or property, without due process of law" or "deny to

any person within its jurisdiction the equal protection of the laws,"

Conkling insisted the amendment's drafters intended to cover business

corporations too.

... snip ...

... testimony falsely claiming authors of 14th amendment intended to

include corporations pgxiv/loc74-78:

Between 1868, when the amendment was ratified, and 1912, when a

scholar set out to identify every Fourteenth Amendment case heard by

the Supreme Court, the justices decided 28 cases dealing with the

rights of African Americans--and an astonishing 312 cases dealing with

the rights of corporations.

pg36/loc726-28:

On this issue, Hamiltonians were corporationalists--proponents of

corporate enterprise who advocated for expansive constitutional rights

for business. Jeffersonians, meanwhile, were populists--opponents of

corporate power who sought to limit corporate rights in the name of

the people.

pg229/loc3667-68:

IN THE TWENTIETH CENTURY, CORPORATIONS WON LIBERTY RIGHTS, SUCH AS

FREEDOM OF SPEECH AND RELIGION, WITH THE HELP OF ORGANIZATIONS LIKE

THE CHAMBER OF COMMERCE.

... snip ...

False Profits: Reviving the Corporation's Public Purpose

https://www.uclalawreview.org/false-profits-reviving-the-corporations-public-purpose/

I Origins of the Corporation. Although the corporate structure dates

back as far as the Greek and Roman Empires, characteristics of the

modern corporation began to appear in England in the mid-thirteenth

century.[4] "Merchant guilds" were loose organizations of merchants

"governed through a council somewhat akin to a board of directors,"

and organized to "achieve a common purpose"[5] that was public in

nature. Indeed, merchant guilds registered with the state and were

approved only if they were "serving national purposes."[6]

... snip ..

"Why Nations Fail"

https://www.amazon.com/Why-Nations-Fail-Origins-Prosperity-ebook/dp/B0058Z4NR8/

original settlement, Jamestown ... English planning on emulating the

Spanish model, enslave the local population to support the

settlement. Unfortunately the North American natives weren't as

cooperative and the settlement nearly starved. Then they switched to

sending over some of the other populations from the British Isles

essentially as slaves ... the English Crown charters had them as

"leet-man" ... pg27:

The clauses of the Fundamental Constitutions laid out a rigid social

structure. At the bottom were the leet-men, with clause 23

noting, "All the children of leet-men shall be leet-men,

and so to all generations."

... snip ...

My wife's father was presented with a set of 1880 history books for

some distinction at West Point by the Daughters Of the 17th Century

http://www.colonialdaughters17th.org/

which refer to if it hadn't been for the influence of the Scottish

settlers from the mid-atlantic states, the northern/English states

would have prevailed and the US would look much more like England with

monarch ("above the law") and strict class hierarchy. His Scottish

ancestors came over after their clan was "broken". Blackadder WW1

episode had "what does English do when they see a man in a skirt?,

they run him through and nick his land". Other history was the Scotts

were so displaced that about the only thing left for men, was the

military.

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

inequality posts

https://www.garlic.com/~lynn/submisc.html#inequality

racism posts

https://www.garlic.com/~lynn/submisc.html#racism

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Tapes Date: 24 Feb, 2024 Blog: FacebookMelinda ... and her history efforts:

in mid-80s, sent me email asking if I had copy of the original CMS multi-level source update implementation ... which was exec implementation repeatedly/incrementally applying the updates ... first for original source, creating temporary file, and then repeatedly applying updates to series of the temporary files. I had nearly dozen tapes in the IBM Almaden Research tape library with (replicated) archives of files from the 60&70s ... and was able to pull off the original (multi-level source update) implementation. Melinda was fortunate since a few weeks later, Almaden had a operational problem where random tapes were being mounted as scratch ... and I lost all my 60&70s archive files.

trivia: late 70s, I had done CMSBACK (incremental backup/archive) for

internal datacenters (starting with research and the internal US

consolidated online sales&marketing support HONE systems up in Palo

Alto) ... and emulated/used standard VOL1 labels. It went through a

couple of internal releases and then a group did PC & workstation

clients and it was released to customers as Workstations Datasave

Facility (WDSF). Then GPD/AdStar took it over and renamed it ADSM

... and when disk division was unloaded, it became TSM and now IBM

Storage Protect.

https://en.wikipedia.org/wiki/IBM_Tivoli_Storage_Manager

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

hone posts

https://www.garlic.com/~lynn/subtopic.html#hone

CMSBACK, backup/archived, storage management

https://www.garlic.com/~lynn/submain.html#backup

posts mentioning Almaden operations mounting random

tapes as scratch and loosing my 60s&70s archive

https://www.garlic.com/~lynn/2024.html#39 Card Sequence Numbers

https://www.garlic.com/~lynn/2023e.html#82 Saving mainframe (EBCDIC) files

https://www.garlic.com/~lynn/2022e.html#94 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022c.html#83 VMworkshop.og 2022

https://www.garlic.com/~lynn/2022.html#65 CMSBACK

https://www.garlic.com/~lynn/2022.html#61 File Backup

https://www.garlic.com/~lynn/2021k.html#51 VM/SP crashing all over the place

https://www.garlic.com/~lynn/2021g.html#89 Keeping old (IBM) stuff

https://www.garlic.com/~lynn/2021.html#22 Almaden Tape Library

https://www.garlic.com/~lynn/2018e.html#86 History of Virtualization

https://www.garlic.com/~lynn/2014g.html#98 After the Sun (Microsystems) Sets, the Real Stories Come Out

https://www.garlic.com/~lynn/2014b.html#92 write rings

https://www.garlic.com/~lynn/2013n.html#60 Bridgestone Sues IBM For $600 Million Over Allegedly 'Defective' System That Plunged The Company Into 'Chaos'

https://www.garlic.com/~lynn/2011m.html#12 Selectric Typewriter--50th Anniversary

https://www.garlic.com/~lynn/2011c.html#4 If IBM Hadn't Bet the Company

https://www.garlic.com/~lynn/2010l.html#0 Old EMAIL Index

https://www.garlic.com/~lynn/2010d.html#65 Adventure - Or Colossal Cave Adventure

https://www.garlic.com/~lynn/2009s.html#17 old email

https://www.garlic.com/~lynn/2009n.html#66 Evolution of Floating Point

--

virtualization experience starting Jan1968, online at home since Mar1970

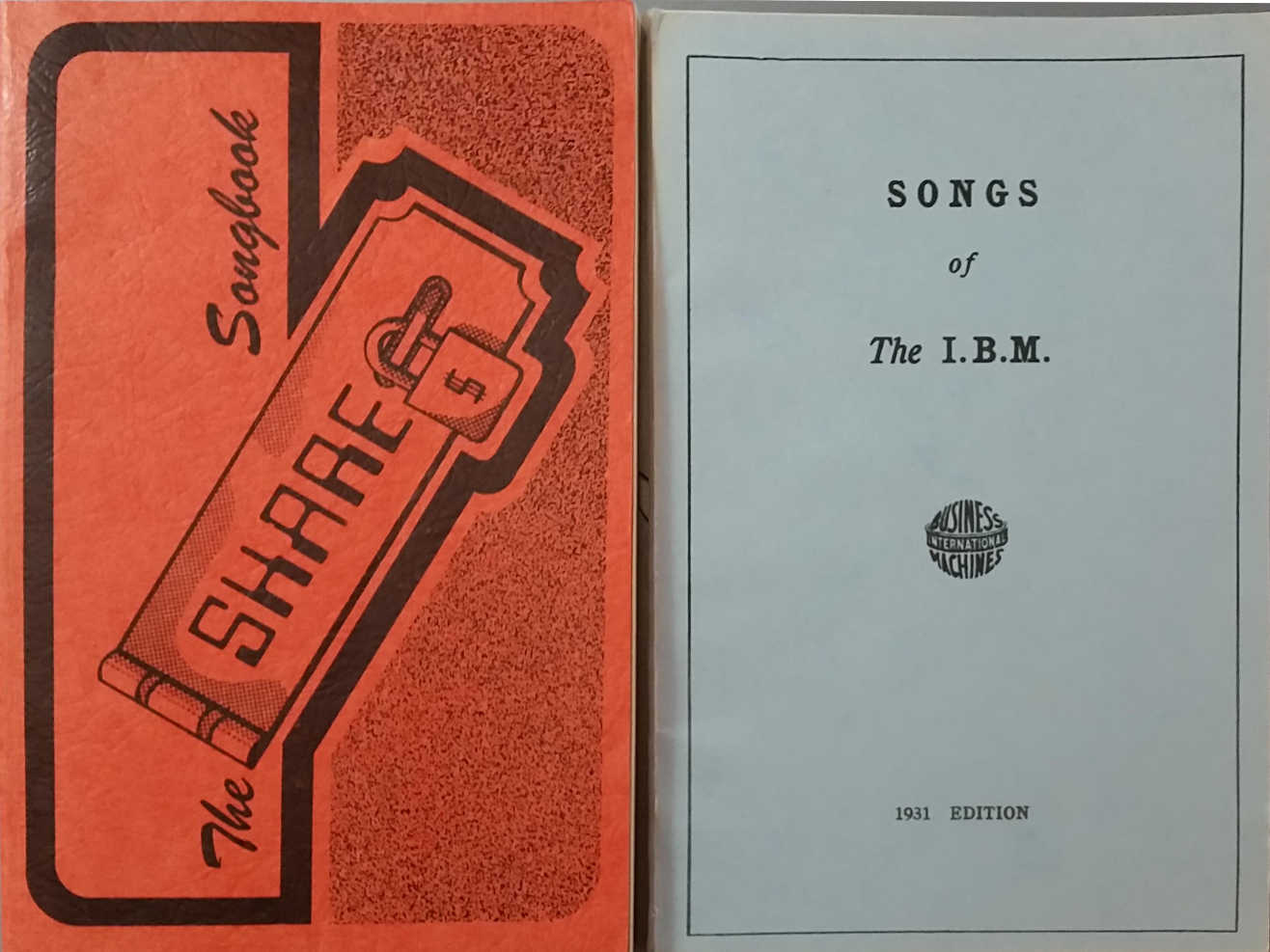

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM User Group SHARE Date: 25 Feb, 2024 Blog: Facebookre:

Melinda's response to earlier email

https://www.garlic.com/~lynn/2011c.html#email860407

https://www.garlic.com/~lynn/2021k.html#email860407

Date: 04/07/86 10:06:34

From: wheeler

re: hsdt; I'm responsible for an advanced technology project called

High Speed Data Transport. One of the things it supports is a 6mbit

TDMA satellite link (and can be configured with up to 4-6 such

links). Several satellite earth stations can share the same link using

a pre-slot allocated scheme (i.e. TDMA). The design is fully meshed

... somewhat like a LAN but with 3000 mile diameter (i.e. satellite

foot-print).

We have a new interface to the earth station internal bus that allows

us to emulate a LAN interface to all other earth stations on the same

trunk. Almost all other implementations support emulated

point-to-point copper wires ... for 20 node network, each earth

station requires 19 terrestrial interface ports ... i.e. (20*19)/2

links. We use a single interface that fits in an IBM/PC. A version is

also available that supports standard terrestrial T1 copper wires.

It has been presented to a number of IBM customers, Berkeley, NCAR,

NSF director and a couple of others. NSF finds it interesting since

6-36 megabits is 100* faster than the "fast" 56kbit links that a

number of other people are talking about. Some other government

agencies find it interesting since the programmable encryption

interface allows cryto-key to be changed on a packet basis. We have a

design that supports data-stream (or virtual circuit) specific

encryption keys and multi-cast protocols.

We also have normal HYPERChannel support ... and in fact have done our

own RSCS & PVM line drivers to directly support NSC's A220. Ed

Hendricks is under contract to the project doing a lot of the software

enhancements. We've also uncovered and are enhancing several spool

file performance bottlenecks. NSF is asking that we interface to

TCP/IP networks.

... snip ... top of post, old email index, NSFNET email

note: the communication group was fighting the release of mainframe TCP/IP, but then possibly some influential customers got that reversed ... then the communication group changed their tactics; ... since they had corporate strategic responsibility for everything that crossed datacenter walls, it had to be released through them. What shipped got 44kbites/sec aggregate using nearly whole 3090 processor. I then did the support for RFC1044 and in some tuning tests at Cray Research between Cray and IBM 4341, got sustained nearly full 4341 channel throughput using only modest about of 4341 CPU (something like 500 times improvement in bytes moved per instruction executed).

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

TCP RFC 1044 posts

https://www.garlic.com/~lynn/subnetwork.html#1044

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The Attack of the Killer Micros Newsgroups: comp.arch Date: Mon, 26 Feb 2024 07:58:42 -1000mitchalsup@aol.com (MitchAlsup1) writes:

2003, 32 processor, max. configured IBM mainframe Z990 benchmarked aggregate 9BIPS 2003, Pentium4 processor benchmarked 9.7BIPS

some other recent posts mentioning pentium4

https://www.garlic.com/~lynn/2024.html#44 RS/6000 Mainframe

https://www.garlic.com/~lynn/2024.html#52 RS/6000 Mainframe

https://www.garlic.com/~lynn/2024.html#67 VM Microcode Assist

https://www.garlic.com/~lynn/2024.html#81 Benchmarks

https://www.garlic.com/~lynn/2024.html#113 Cobol

Also 1988, ibm branch office asked if I could help LLNL standardized some serial stuff they were playing with which quickly becomes fibre channel standard (FCS, initial 1gbit, full-duplex, 200mbytes/sec aggregate). Then some IBM mainframe engineers become involved and define a heavy-weight protocol that significantly reduces the native throughput, which is released as FICON.

The most recent public benchmark I can find is "PEAK I/O" benchmark for max. configured z196 getting 2M IOPS using 104 FICON (running over 104 FCS). About the same time a FCS was announced for E5-2600 blades claiming over million IOPS (two having higher throughput than 104 FICON). Also IBM pubs recommend that System Assist Processors ("SAPs" that do the actual I/O), be kept to no more than 70% processor ... which would be about 1.5M IOPS).

FICON, FCS, posts

https://www.garlic.com/~lynn/submisc.html#ficon

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Some NSFNET, Internet, and other networking background. Date: 26 Feb, 2024 Blog: FacebookSome NSFNET, Internet, and other networking background.

Overview: IBM CEO Learson trying (& failed) to block bureaucrats,

careerists, and MBAs from destroying Watson culture/legacy.

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

Twenty years later, IBM has one of the largest losses in the history

of US companies and was being reorganized in the 13 "baby blues" in

preparation for breaking up the company.

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

We had already left IBM but get a call from the bowels of Armonk asking if we could help with the breakup of the company. Before we get started, the board brings in the former president of Amex as CEO, who (somewhat) reverses the breakup (although it wasn't long before the disk division is gone)

Inventing the Internet

https://www.linkedin.com/pulse/inventing-internet-lynn-wheeler/

z/VM 50th - part 3

https://www.linkedin.com/pulse/zvm-50th-part-3-lynn-wheeler/

Some NSFNET supercomputer interconnect background leading up to the

RFP awarded 24Nov87 (as regional networks connect in, it morphs

into the NSFNET "backbone", precursor to the current "Internet").

Date: 09/30/85 17:27:27

From: wheeler

To: CAMBRIDG xxxxx

re: channel attach box; fyi;

I'm meeting with NSF on weds. to negotiate joint project which will

install HSDT as backbone network to tie together all super-computer

centers ... and probably some number of others as well. Discussions

are pretty well along ... they have signed confidentiality agreements

and such.

For one piece of it, I would like to be able to use the cambridge

channel attach box.

I'll be up in Milford a week from weds. to present the details of the

NSF project to ACIS management.

... snip ... top of post, old email index, NSFNET email

Date: 11/14/85 09:33:21

From: wheeler

To: FSD

re: cp internals class;

I'm not sure about 3 days solid ... and/or how useful it might be all

at once ... but I might be able to do a couple of half days here and

there when I'm in washington for other reasons. I'm there (Alexandria)

next tues, weds, & some of thursday.

I expect ... when the NSF joint study for the super computer center

network gets signed ... i'll be down there more.

BTW, I'm looking for a IBM IBM 370 processor in the wash. DC area

running VM where I might be able to get a couple of userids and

install some hardware to connect to a satellite earth station & drive

PVM & RSCS networking. It would connect into the internal IBM pilot

... and possibly also the NSF supercomputer pilot.

... snip ... top of post, old email index, NSFNET email

In early 80s, I also had HSDT project (T1 and faster computer links)

and was working with NSF director and was suppose to get $20M for NSF

supercomputer center interconnects. Then congress cuts the budget,

some other things happen and eventually NSF releases RFP (in part

based on what we already had running). From 28Mar1986 Preliminary

Announcement:

https://www.garlic.com/~lynn//2002k.html#12

https://www.garlic.com/~lynn//2018d.html#33

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

IBM internal politics was not allowing us to bid (being blamed for

online computer conferencing inside IBM likely contributed). The NSF

director tried to help by writing the company a letter

(3Apr1986, NSF Director to IBM Chief Scientist and IBM Senior

VP and director of Research, copying IBM CEO) with support from other

gov. agencies ... but that just made the internal politics worse (as

did claims that what we already had operational was at least 5yrs

ahead of the winning bid, RFP awarded 24Nov87). As regional

networks connect in, it becomes the NSFNET backbone, precursor to

modern internet.

Date: 04/07/86 10:06:34

From: wheeler

To: Princeton

re: hsdt; I'm responsible for an advanced technology project called

High Speed Data Transport. One of the things it supports is a 6mbit

TDMA satellite link (and can be configured with up to 4-6 such

links). Several satellite earth stations can share the same link using

a pre-slot allocated scheme (i.e. TDMA). The design is fully meshed

... somewhat like a LAN but with 3000 mile diameter (i.e. satellite

foot-print).

We have a new interface to the earth station internal bus that allows

us to emulate a LAN interface to all other earth stations on the same

trunk. Almost all other implementations support emulated

point-to-point copper wires ... for 20 node network, each earth

station requires 19 terrestrial interface ports ... i.e. (20*19)/2

links. We use a single interface that fits in an IBM/PC. A version is

also available that supports standard terrestrial T1 copper wires.

It has been presented to a number of IBM customers, Berkeley, NCAR,

NSF director and a couple of others. NSF finds it interesting since

6-36 megabits is 100* faster than the "fast" 56kbit links that a

number of other people are talking about. Some other government

agencies find it interesting since the programmable encryption

interface allows cryto-key to be changed on a packet basis. We have a

design that supports data-stream (or virtual circuit) specific

encryption keys and multi-cast protocols.

We also have normal HYPERChannel support ... and in fact have done our

own RSCS & PVM line drivers to directly support NSC's A220. Ed

Hendricks is under contract to the project doing a lot of the software

enhancements. We've also uncovered and are enhancing several spool

file performance bottlenecks. NSF is asking that we interface to

TCP/IP networks.

... snip ... top of post, old email index, NSFNET email

Date: 05/05/86 07:19:20

From: wheeler

re: HSDT; For the past year, we have been working with Bob Shahan &

NSF to define joint-study with NSF for backbone on the

super-computers. There have been several meetings in Milford with ACIS

general manager (xxxxx) and the director of development (xxxxx). We

have also had a couple of meetings with the director of NSF.

Just recently we had a meeting with Ken King (from Cornell) and xxxxx

(from ACIS) to go over the details of who writes the joint study. ACIS

has also just brought on a new person to be assigned to this activity

(xxxxx). After reviewing some of the project details, King asked for a

meeting with 15-20 universities and labs. around the country to

discuss various joint-studies and the application of the technology to

several high-speed data transport related projects. That meeting is

scheduled to be held in June to discuss numerous university &/or NSF

related communication projects and the applicability of joint studies

with the IBM HSDT project.

I'm a little afraid that the June meeting might turn into a 3-ring

circus with so many people & different potential applications in one

meeting (who are also possibly being exposed to the technology &

concepts for the 1st time). I'm going to try and have some smaller

meetings with specific universities (prior to the big get together in

June) and attempt to iron out some details beforehand (to minimize the

confusion in the June meeting).

... snip ... top of post, old email index, NSFNET email

... somebody in Yorktown Research then called up all the invitees and

canceled the meeting

Date: 09/15/86 11:59:48

From: wheeler

To: somebody in paris

re: hsdt; another round of meetings with head of the national science

foundation ... funding of $20m for HSDT as experimental communications

(although it would be used to support inter-super-computer center

links). NSF says they will go ahead and fund. They will attempt to

work with DOE and turn this into federal government inter-agency

network standard (and get the extra funding).

... snip ... top of post, old email index, NSFNET email

Somebody had collected executive email with lots of how corporate

SNA/VTAM could apply to NSFNET (RFP awarded 24Nov1987) and

forwarded it to me ... previously posted to newsgroups, but heavily

clipped and redacted to protect the guilty

Date: 01/09/87 16:11:26

From: ?????

TO ALL IT MAY CONCERN-

I REC'D THIS TODAY. THEY HAVE CERTAINLY BEEN BUSY. THERE IS A HOST OF

MISINFORMATION IN THIS, INCLUDING THE ASSUMPTION THAT TCP/IP CAN RUN

ON TOP OF VTAM, AND THAT WOULD BE ACCEPTABLE TO NSF, AND THAT THE

UNIVERSITIES MENTIONED HAVE IBM HOSTS WITH VTAM INSTALLED.

Forwarded From: ***** To: ***** Date: 12/26/86 13:41

1. Your suggestions to start working with NSF immediately on high

speed (T1) networks is very good. In addition to ACIS I think that it

is important to have CPD Boca involved since they own the products you

suggest installing. I would suggest that ***** discuss this and plan

to have the kind of meeting with NSF that ***** proposes.

... snip ... top of post, old email index, NSFNET email

< ... great deal more of the same; several more appended emails from several participants in the MISINFORMATION ... >

RFP awarded 24nov87 and RFP kickoff meeting 7Jan1988

https://www.garlic.com/~lynn/2000e.html#email880104

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 3033 Date: 27 Feb, 2024 Blog: Facebooktrivia: 3033 was quick&dirty remap of 168 logic to 20% faster chips. The 303x channel director (external channels) was 158 engine with integrated channel microcode code (and no 370 microcode). 3031 was two 158 engines ... one with just 370 microcode and one with just integrated channel microcode. A 3032 was 168-3 using channel director for external channels.

when I transferred out to SJR I got to wander around IBM and non-IBM datacenters in silicon valley, including disk engineering (bldg14) and disk product test (bldg15) across the street. They were running 7x24, pre-scheduled, stand-alone testing and had mentioned that they had recently tried MVS ... but it had 15min mean-time-between-failure (in that environment) requiring manual re-ipl. I offered to rewrite I/O supervisor making it bullet proof and never fail, allowing any amount of on-demand, concurrent testing greatly improving productivity. Then bldg15 got 1st engineering 3033 outside POK processor engineering flr. Since testing took only percent or two of the processor, we scrounged up a 3830 disk controller and string of 3330 disk and setup our own private online service.

The channel director operation was still a little flaky and would sometimes hang and somebody would have to go over reset/re-impl to bring it back. We figured out if I did CLRCH in fast sequence to all six channels, it would provoke the channel director into doing its own re-impl (a 3033 could have three channel directors, so we could have online service on a channel director separate from the ones used for testing).

posts mentioning getting to play disk engineer in bldg14&15

https://www.garlic.com/~lynn/subtopic.html#disk

... before transferring to SJR, I got roped into 16processor, tightly-coupled (shared memory) multiprocessor and we con'ed the 3033 processor engineers into working on it in their spare time (a lot more interesting than the 168 logic remap). Everybody thought it was great until somebody told the head of POK that it could be decades before the POK favorite son operating system (MVS) had (effective) 16-way support (POK doesn't ship a 16-way machine until after the turn of the century). Then the head of POK invited some of us to never visit POK again and told the 3033 processor engineers to keep their heads down and focused only on 3033. Once the 3033 was out the door, they start on trout/3090.

SMP, multiprocessor, tightly-coupled, shared memory posts

https://www.garlic.com/~lynn/subtopic.html#smp

a few recent posts mentioning mainframe since the turn of century:

https://www.garlic.com/~lynn/2024.html#81 Benchmarks

https://www.garlic.com/~lynn/2024.html#52 RS/6000 Mainframe

https://www.garlic.com/~lynn/2024.html#46 RS/6000 Mainframe

https://www.garlic.com/~lynn/2023g.html#97 Shared Memory Feature

https://www.garlic.com/~lynn/2023g.html#85 Vintage DASD

https://www.garlic.com/~lynn/2023g.html#40 Vintage Mainframe

https://www.garlic.com/~lynn/2023d.html#47 AI Scale-up

https://www.garlic.com/~lynn/2022h.html#113 TOPS-20 Boot Camp for VMS Users 05-Mar-2022

https://www.garlic.com/~lynn/2022h.html#112 TOPS-20 Boot Camp for VMS Users 05-Mar-2022

https://www.garlic.com/~lynn/2022g.html#71 Mainframe and/or Cloud

https://www.garlic.com/~lynn/2022f.html#49 z/VM 50th - part 2

https://www.garlic.com/~lynn/2022f.html#12 What is IBM SNA?

https://www.garlic.com/~lynn/2022f.html#10 9 Mainframe Statistics That May Surprise You

https://www.garlic.com/~lynn/2022e.html#71 FedEx to Stop Using Mainframes, Close All Data Centers By 2024

https://www.garlic.com/~lynn/2022d.html#6 Computer Server Market

https://www.garlic.com/~lynn/2022c.html#111 Financial longevity that redhat gives IBM

https://www.garlic.com/~lynn/2022c.html#67 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2022c.html#54 IBM Z16 Mainframe

https://www.garlic.com/~lynn/2022c.html#19 Telum & z16

https://www.garlic.com/~lynn/2022c.html#12 IBM z16: Built to Build the Future of Your Business

https://www.garlic.com/~lynn/2022b.html#63 Mainframes

https://www.garlic.com/~lynn/2022b.html#57 Fujitsu confirms end date for mainframe and Unix systems

https://www.garlic.com/~lynn/2022b.html#45 Mainframe MIPS

https://www.garlic.com/~lynn/2022.html#96 370/195

https://www.garlic.com/~lynn/2022.html#84 Mainframe Benchmark

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 3033 Date: 28 Feb, 2024 Blog: Facebookre:

Note, POK favorite son operating system (MVT->SVS->MVS->MVS/XA) was motivation for doing or not doing lots of stuff, not just hardware, like not doing 16-way multiprocessor until over two decades later (after turn of the century with z900, dec2000).

A little over a decade ago, I was asked to track down decision to add virtual memory to all 370 ... basically MVT storage management was so bad that it required specifying region sizes four times larger than used ... as a result, typical 1mbyte 370/165 could only run four concurrent regions concurrently, insufficient to keep 165 busy and justified. Going to single 16mbyte virtual memory (SVS) would allow increasing number of concurrently running regions by a factor of four times with little or no paging (something like running MVT in a CP67 16mbyte virtual machine, precursor to VM370). However this still used 4bit storage keys to provide region protection (zero for kernel, 1-15 for regions).

Moving to MVS with a 16mbyte virtual memory for each application

further increased concurrently running programs using different

virtual address spaces for separation protection/security. Reference

here to customers not moving to MVS like IBM required ... so

sales&marketing were provided bonuses to get customers to move

http://www.mxg.com/thebuttonman/boney.asp

However, OS/360 had a pointer-passing API heritage ... so they placed a 8mbyte image of the MVS kernel in every virtual address space ... so the kernel code could directly address calling API data (as if it was still MVT running in real storage). That just left 8mbytes available for application. Also, subsystems were also moved into their own, separate address space. However for a subsystem to access application calling API data they invented the "Common Segment Area" ... a one mbyte segment mapped into every address space ... where applications would place API calling data so that subsystems could access it using the passed pointer. This reduced the the application 16mbyte by another mbyte leaving just seven mbytes. However the demand for "Common Segment Area" space turns out to be somewhat proportional to the number of subsystems and concurrently executing applications (machines were getting bigger and faster and needed increasing number of concurrent executing "regions" to keep them busy) ... and the "Common Segment Segment" quickly becomes "Common System Area" ... by 3033 it was pushing 5-6mbytes (leaving only 2-3mbytes for the application) and threatening to become 8mbytes (plus the 8mbyte kernel area) leaving zero/nothing (out of each application 16mbyte address space) for an application.

Lots of 370/XA and MVS/XA was defined (like 31bit addressing and access registers) to address structural/design issues inherited from OS/360. Even getting to MVS/XA was apparently the motivation to convince corporate to kill the VM370 product, shutdown the development group and transfer all the people to POK for MVS/XA (Endicott eventually managed to obtain the VM370 charter for midrange, but had to reconstitute a development group from scratch; senior POK executives were also going around internal datacenters bullying them to move from VM370 to MVS, since while there would be VM370 for mid-range, there would no longer be VM370 for POK high-end machines).

However, even after MVS/XA was available in early 80s, similar to customers not moving to MVS (boney fingers reference), POK was having problems with getting customers to move to MVS/XA. Amdahl was having better success since they had HYPERVISOR (multiple domain facility, virtual machine subset done in microcode) that could run MVS and MVS/XA on the same machine. Now there had been the VMTOOL (& SIE, note SIE was necessary for virtual machine operation on 3081, but 3081 lacked the necessary microcode space, so had to do microcode "paging" seriously affecting performance) virtual machine subset, done in POK supporting MVS/XA development ... but w/o the features and performance for VM370-like production use. To compete with Amdahl, eventually this is shipped as VM/MA (migration aid) and VM/SF (system facility) for running MVS & MVS/XA concurrently for migration support.

recent posts mentioning CSA (common segment/system area)

https://www.garlic.com/~lynn/2024.html#50 Slow MVS/TSO

https://www.garlic.com/~lynn/2023g.html#77 MVT, MVS, MVS/XA & Posix support

https://www.garlic.com/~lynn/2023g.html#48 Vintage Mainframe

https://www.garlic.com/~lynn/2023g.html#29 Another IBM Downturn

https://www.garlic.com/~lynn/2023g.html#2 Vintage TSS/360

https://www.garlic.com/~lynn/2023f.html#26 Ferranti Atlas

https://www.garlic.com/~lynn/2023d.html#36 "The Big One" (IBM 3033)

https://www.garlic.com/~lynn/2023d.html#22 IBM 360/195

https://www.garlic.com/~lynn/2023d.html#9 IBM MVS RAS

https://www.garlic.com/~lynn/2023d.html#0 Some 3033 (and other) Trivia

https://www.garlic.com/~lynn/2022h.html#27 370 virtual memory

https://www.garlic.com/~lynn/2022f.html#122 360/67 Virtual Memory

https://www.garlic.com/~lynn/2022d.html#93 Operating System File/Dataset I/O

https://www.garlic.com/~lynn/2022d.html#55 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022c.html#69 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2022c.html#64 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2022c.html#49 IBM 3033 Personal Computing

https://www.garlic.com/~lynn/2022b.html#19 Channel I/O

https://www.garlic.com/~lynn/2022.html#70 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2021k.html#113 IBM Future System

https://www.garlic.com/~lynn/2021i.html#17 Versatile Cache from IBM

https://www.garlic.com/~lynn/2021h.html#70 IBM Research, Adtech, Science Center

https://www.garlic.com/~lynn/2021b.html#63 Early Computer Use

https://www.garlic.com/~lynn/2020.html#36 IBM S/360 - 370

other posts mentioning vmtool, sie, vm/ma, vm/sf

https://www.garlic.com/~lynn/2024.html#121 IBM VM/370 and VM/XA

https://www.garlic.com/~lynn/2023g.html#78 MVT, MVS, MVS/XA & Posix support

https://www.garlic.com/~lynn/2023f.html#104 MVS versus VM370, PROFS and HONE

https://www.garlic.com/~lynn/2023e.html#87 CP/67, VM/370, VM/SP, VM/XA

https://www.garlic.com/~lynn/2023e.html#51 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023c.html#61 VM/370 3270 Terminal

https://www.garlic.com/~lynn/2023.html#55 z/VM 50th - Part 6, long winded zm story (before z/vm)

https://www.garlic.com/~lynn/2022f.html#49 z/VM 50th - part 2

https://www.garlic.com/~lynn/2022e.html#9 VM/370 Going Away

https://www.garlic.com/~lynn/2022d.html#56 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022.html#82 Virtual Machine SIE instruction

https://www.garlic.com/~lynn/2021k.html#119 70s & 80s mainframes

https://www.garlic.com/~lynn/2014d.html#17 Write Inhibit

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: rusty iron why ``folklore''? Newsgroups: alt.folklore.computers Date: Wed, 28 Feb 2024 12:06:29 -1000John Levine <johnl@taugh.com> writes:

Initially going to SVS single 16mbyte virtual memory (something like running MVT in a CP67 16mbyte virtual machine, precursor to VM370) would allow number of concurrently running regions to be inceased by a factor of four times with little or no paging.

problem then was region&kernel security/integrity was maintained by 4bit storage protection keys (zero for kernel, 1-15 for no. concurrent regions) and as systems got larger/faster, they needed further increase in concurrently running "regions" ... thus the SVS->MVS with each region getting its own 16mbyte virtual address space (which resulted in a number of additional problems).

old archived post:

https://www.garlic.com/~lynn//2011d.html#73

a few other recent posts referencing adding virtual memory to all 370s

https://www.garlic.com/~lynn/2024b.html#3 Bypassing VM

https://www.garlic.com/~lynn/2024.html#90 IBM, Unix, editors

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2024.html#50 Slow MVS/TSO

https://www.garlic.com/~lynn/2024.html#27 HASP, ASP, JES2, JES3

https://www.garlic.com/~lynn/2024.html#24 Tomasulo at IBM

https://www.garlic.com/~lynn/2024.html#21 1975: VM/370 and CMS Demo

https://www.garlic.com/~lynn/2024.html#17 IBM Embraces Virtual Memory -- Finally

https://www.garlic.com/~lynn/2023g.html#81 MVT, MVS, MVS/XA & Posix support

https://www.garlic.com/~lynn/2023g.html#39 Vintage Mainframe

https://www.garlic.com/~lynn/2023g.html#19 OS/360 Bloat

https://www.garlic.com/~lynn/2023g.html#6 Vintage Future System

https://www.garlic.com/~lynn/2023g.html#5 Vintage Future System

https://www.garlic.com/~lynn/2023f.html#110 CSC, HONE, 23Jun69 Unbundling, Future System

https://www.garlic.com/~lynn/2023f.html#102 MPIO, Student Fortran, SYSGENS, CP67, 370 Virtual Memory

https://www.garlic.com/~lynn/2023f.html#96 Conferences

https://www.garlic.com/~lynn/2023f.html#90 Vintage IBM HASP

https://www.garlic.com/~lynn/2023f.html#89 Vintage IBM 709

https://www.garlic.com/~lynn/2023f.html#69 Vintage TSS/360

https://www.garlic.com/~lynn/2023f.html#47 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2023f.html#40 Rise and Fall of IBM

https://www.garlic.com/~lynn/2023f.html#26 Ferranti Atlas

https://www.garlic.com/~lynn/2023f.html#24 Video terminals

https://www.garlic.com/~lynn/2023e.html#100 CP/67, VM/370, VM/SP, VM/XA

https://www.garlic.com/~lynn/2023e.html#70 The IBM System/360 Revolution

https://www.garlic.com/~lynn/2023e.html#65 PDP-6 Architecture, was ISA

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#49 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023e.html#43 IBM 360/65 & 360/67 Multiprocessors

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#15 Copyright Software

https://www.garlic.com/~lynn/2023e.html#4 HASP, JES, MVT, 370 Virtual Memory, VS2

https://www.garlic.com/~lynn/2023d.html#113 VM370

https://www.garlic.com/~lynn/2023d.html#98 IBM DASD, Virtual Memory

https://www.garlic.com/~lynn/2023d.html#90 IBM 3083

https://www.garlic.com/~lynn/2023d.html#71 IBM System/360, 1964

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023d.html#24 VM370, SMP, HONE

https://www.garlic.com/~lynn/2023d.html#20 IBM 360/195

https://www.garlic.com/~lynn/2023d.html#17 IBM MVS RAS

https://www.garlic.com/~lynn/2023d.html#9 IBM MVS RAS

https://www.garlic.com/~lynn/2023d.html#0 Some 3033 (and other) Trivia

https://www.garlic.com/~lynn/2023c.html#79 IBM TLA

https://www.garlic.com/~lynn/2023c.html#25 IBM Downfall

https://www.garlic.com/~lynn/2023b.html#103 2023 IBM Poughkeepsie, NY

https://www.garlic.com/~lynn/2023b.html#44 IBM 370

https://www.garlic.com/~lynn/2023b.html#41 Sunset IBM JES3

https://www.garlic.com/~lynn/2023b.html#24 IBM HASP (& 2780 terminal)

https://www.garlic.com/~lynn/2023b.html#15 IBM User Group, SHARE

https://www.garlic.com/~lynn/2023b.html#6 z/VM 50th - part 7

https://www.garlic.com/~lynn/2023b.html#0 IBM 370

https://www.garlic.com/~lynn/2023.html#76 IBM 4341

https://www.garlic.com/~lynn/2023.html#65 7090/7044 Direct Couple

https://www.garlic.com/~lynn/2023.html#50 370 Virtual Memory Decision

https://www.garlic.com/~lynn/2023.html#34 IBM Punch Cards

https://www.garlic.com/~lynn/2023.html#4 Mainrame Channel Redrive

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Machine Room Access Date: 28 Feb, 2024 Blog: Facebooksimilar but different ... people in bldg14 disk enginneering were not allowed in bldg15 disk product test (and vis-a-versa) to minimize complicit/collusion in product test certifying new hardware. My badge was enabled for just about every bldg and machine room (since most of them all ran my enhanced production systems). Recent comment mentioning the subject:

One monday morning I got an irate call from bldg15 asking what I did over the weekend to destroy 3033 throughput. It went back and forth a couple times about everybody denying having done anything ... until somebody in bldg15 admitted they had replaced the 3830 disk controller with a test 3880 controller (handling the 3330 string for private online service). 3830 had a super fast horizontal microcode processor for everything. The 3800 had a fast hardware path to handle 3380 3mbyte/sec transfers ... but everything else was handled by a really (, really) slow vertical microprocessor.

The 3880 engineers trying to mask how slow it really was, had a hack to present end-of-operation early ... hoping to be able to complete processing overlapped while the operating system was doing interrupt processing. It wasn't working out that way ... in my I/O supervisor rewrite ... besides making it bullet proof and never fail, I had radically cut the I/O interrupt processing pathlength. I had also added CP67 "CHFREE" back (in the morph of CP67->VM370, lots of stuff was dropped and/or greatly simplified; CP67 CHFREE was a macro that checked for channel I/O redrive was soon as it was "safe" ... as opposed to completely finishing processing latest device I/O interrupt). In any case, my I/O supervisor was attempting to restart any queued I/O while the 3880 was still trying to finalize processing of the previous I/O ... which required it to present CU busy (SM+BUSY), which required the system to requeue the attempted I/O, for retry later when the controller presented CUE interrupt (required after having presented SM+BUSY). The 3880 slow processing overhead reduced the number of I/Os compared to 3830 and the hack with presenting I/O complete early, drove up my system processing overhead.

They were increasingly blaming me for problems and I was increasingly

having to play disk engineer diagnosing their problems. playing

disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM 5100 Date: 28 Feb, 2024 Blog: Facebook5100

I've disclaimed that almost every time I make the wiki reference (let it slip this time). Even tho I was in research ... I was spending lot of time up at HONE (US consolidated online sales&marketing support, nearly all APL apps, across the back parking lot from PASC and PASC was helping a lot with APL consulting and optimization) ... as well as LSG (had let me have part of a wing with offices and labs)

As HONE clones were sprouting up all over the world ... I believe HONE became the largest use of APL anywhere.

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

A more interesting LSG ref (I find it interesting since after leaving

IBM, I was on the financial industry standards committee).

https://en.wikipedia.org/wiki/Magnetic_stripe_card#Further_developments_and_encoding_standards

LSG was ASD from the 60s ... it seems that ASD evaporated with the

Future System implosion and the mad rush to get stuff back into the

370 product pipelines ... and appeared that much of ASD was thrown

into the development breach (internal politics during FS period had

been shutting down and killing 370 efforts).

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

some posts mentioning 5100, PALM, SCAMP:

https://www.garlic.com/~lynn/2013o.html#82 One day, a computer will fit on a desk (1974) - YouTube

https://www.garlic.com/~lynn/2013o.html#11 'Free Unix!': The world-changing proclamation made30yearsagotoday

https://www.garlic.com/~lynn/2012e.html#100 Indirect Bit

https://www.garlic.com/~lynn/2011i.html#55 Architecture / Instruction Set / Language co-design

https://www.garlic.com/~lynn/2011h.html#53 Happy 100th Birthday, IBM!

https://www.garlic.com/~lynn/2010i.html#33 System/3--IBM compilers (languages) available?

https://www.garlic.com/~lynn/2010c.html#54 Processes' memory

https://www.garlic.com/~lynn/2007d.html#64 Is computer history taugh now?

https://www.garlic.com/~lynn/2003i.html#84 IBM 5100

some posts mentioning IBM LSG and magnetic stripe

https://www.garlic.com/~lynn/2017g.html#43 The most important invention from every state

https://www.garlic.com/~lynn/2016.html#100 3270 based ATMs

https://www.garlic.com/~lynn/2013j.html#28 more on the 1975 Transaction Telephone set

https://www.garlic.com/~lynn/2013g.html#57 banking fraud; regulation,bridges,streams

https://www.garlic.com/~lynn/2012g.html#51 Telephones--private networks, Independent companies?

https://www.garlic.com/~lynn/2011b.html#54 Credit cards with a proximity wifi chip can be as safe as walking around with your credit card number on a poster

https://www.garlic.com/~lynn/2010o.html#40 The Credit Card Criminals Are Getting Crafty

https://www.garlic.com/~lynn/2009q.html#78 70 Years of ATM Innovation

https://www.garlic.com/~lynn/2009i.html#75 IBM's 96 column punch card

https://www.garlic.com/~lynn/2009i.html#71 Barclays ATMs hit by computer fault

https://www.garlic.com/~lynn/2009h.html#55 Book on Poughkeepsie

https://www.garlic.com/~lynn/2009h.html#44 Book on Poughkeepsie

https://www.garlic.com/~lynn/2009f.html#39 PIN Crackers Nab Holy Grail of Bank Card Security

https://www.garlic.com/~lynn/2009e.html#51 Mainframe Hall of Fame: 17 New Members Added

https://www.garlic.com/~lynn/2008s.html#25 Web Security hasn't moved since 1995

https://www.garlic.com/~lynn/2006x.html#14 IBM ATM machines

https://www.garlic.com/~lynn/2004p.html#25 IBM 3614 and 3624 ATM's

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM 5100 Date: 28 Feb, 2024 Blog: Facebookre:

also from 5100 wiki

https://en.wikipedia.org/wiki/IBM_5100#Emulator_in_microcode

IBM later used the same approach for its 1983 introduction of the

XT/370 model of the IBM PC, which was a standard IBM PC XT with the

addition of a System/370 emulator card.

Endicott sent an early engineering box ... I did a bunch of benchmarks

and showed lots of things were page thrashing (aggravated by all I/O

was done by requesting it to be performed by CP88 on the XT side, and

for paging was mapped to the DOS 10mbyte hard disk that had 100ms

access) ... then they blamed me for 6month slip in first customer

ship, while they added another 128kbytes of memory.

I also did a super enhanced page replacement algorithm and a CMS page mapped filesystem ... both helping improve XT/370 throughput. The CMS page mapped filesystem I had originally done for CP67/CMS more than a decade earlier ... before moving it to VM370. FS had adopted a paged "single level store" somewhat from TSS/360; I continued to work on 360&370 all during the FS period, periodically ridiculing what they were doing ... including comments that for a paged-mapped filesystem, I learned some things not to do from TSS/360 (which nobody in FS appeared to understand).

My CMS page mapped filesystem would get 3-4 times throughput compared to standard CMS, on a somewhat moderate filesystem workload benchmark, and difference improved as filesystem workload got heavier ... was able to do all sorts of optimizations that the standard CMS filesystem didn't do, a couple examples: 1) contiguous allocation for multi-block transfers, 2) for one record files, FST pointed directly to the data block, 3) multi-user shared memory executables defined and loading directly from filesystem, 4) could do filesystem transfers overlapped with execution. The apparent problem, while contained in my enhanced production operating system distributed and used internally ... the FS implosion created bad reputation for anything that smacked, even a little, of (FS) single-level-store.

page mapped filesystem posts

https://www.garlic.com/~lynn/submain.html#mmap

page replacement algorithm posts

https://www.garlic.com/~lynn/subtopic.html#clock

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM 5100 Date: 29 Feb, 2024 Blog: Facebookre:

... this mentions "first financial language" at IDC (60s cp67/cms

spinoff from the IBM Cambridge Science Center)

https://www.computerhistory.org/collections/catalog/102658182

as an aside, a decade later, person doing FFL joins with another to

form startup and does the original spreadsheet

https://en.wikipedia.org/wiki/VisiCalc

from 1969 interview I did with IDC

another 60s CP67 spinoff of the IBM Cambridge Science Center was NCSS

... which is later bought by Dun & Bradstreet

https://en.wikipedia.org/wiki/National_CSS

trivia: I was undergraduate at univ (had gotten 360/67 for tss/360 to replace 709/1401, but ran as 360/65), when 360/67 came in, I was hired fulltime responsible for os/360 (univ. shutdown datacenter on weekends and I would have it dedicated, although 48hrs w/o sleep made monday classes hard). Then CSC came out to install cp/67 (3rd after CSC itself, and MIT Lincoln labs) and I mostly played with it on weekends. Over the next 6months, I rewrote a lot of CP67 code, mostly improving running OS/360 in virtual machine; OS/360 benchmark ran 322secs bare machine, initially virtually ran 856secs, CP67 CPU 534secs. Six months later had CP67 CPU down to 113secs (from 534), and CSC announced a week class at Beverly Hills Hilton. I arrived for class on Sunday and was asked to teach the CP67 class ... the CSC people that were to teach it, had resigned on Friday for NCSS.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

NSCC ref also mentions

https://en.wikipedia.org/wiki/Nomad_software

even before SQL (& RDBMS) originally done on VM370/CMS ... I had worked with Jim Gray and Vera Watson on System/R at IBM SJR, later the tech transfer to Endicott for SQL/DS "under the radar" while company was focused on next great DBMS "EAGLE", then when "EAGLE" implodes, there was request for how fast could System/R be ported to MVS, eventually ships as DB2, originally for decision-support only

system/r posts

https://www.garlic.com/~lynn/submain.html#systemr

... there were other "4th Generation Languages", one of the original

4th generation languages, Mathematica made available exclusively

through NCSS.

http://www.decosta.com/Nomad/tales/history.html

One could say PRINT ACROSS MONTH SUM SALES BY DIVISION and receive a

report that would have taken many hundreds of lines of Cobol to

produce. The product grew in capability and in revenue, both to NCSS

and to Mathematica, who enjoyed increasing royalty payments from the

sizable customer base. FOCUS from Information Builders, Inc (IBI),

did even better, with revenue approaching a reported $150M per

year. RAMIS moved among several owners, ending at Computer Associates

in 1990, and has had little limelight since. NOMAD's owners, Thomson,

continue to market the language from Aonix, Inc. While the three

continue to deliver 10-to-1 coding improvements over the 3GL

alternatives of Fortran, Cobol, or PL/1, the movements to object

orientation and outsourcing have stagnated acceptance.

... snip ...

other history

https://en.wikipedia.org/wiki/Ramis_software

When Mathematica (also) makes Ramis available to TYMSHARE for their

VM370-based commercial online service, NCSS does their own version

https://en.wikipedia.org/wiki/Nomad_software

and then follow-on FOCUS from IBI

https://en.wikipedia.org/wiki/FOCUS

Information Builders's FOCUS product began as an alternate product to

Mathematica's RAMIS, the first Fourth-generation programming language

(4GL). Key developers/programmers of RAMIS, some stayed with

Mathematica others left to form the company that became Information

Builders, known for its FOCUS product

... snip ...

4th gen programming language

https://en.wikipedia.org/wiki/Fourth-generation_programming_language

commercial online service providers

https://www.garlic.com/~lynn/submain.html#online

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM 5100 Date: 29 Feb, 2024 Blog: Facebookre:

after 23jun1969 unbundling (starting to charge for software, se services, main. etc). As part of that, US HONE cp/67 datacenters were created for SEs to login and practice with guest operating systems in virtual machines. CSC had also ported APL\360 to CMS for CMS\APL. APL\360 workspaces were 16kbytes (sometimes 32k) with the whole workspace swapped. Its storage management allocated new memory for every assignment ... quickly exhausting the workspace and requiring garbage collection. Mapping to CMS\APL with demand page large virtual memory resulted in page thrashing and the storage management had to be redone. CSC also implemented an API for system services (like file i/o), combination enabled a lot of real-world applications. HONE started using it for deploying sales&marketing support applications ... which quickly came to dominate all HONE use ... and the largest use of APL in the world. The Armonk business planners loaded the highest security IBM business data on the CSC system for APL-based business applications (we had to demonstrate really strong security, in part because there were Boston area institution professors, staff, and students were also using the CSC system).

Later as part of morph from CP67->VM370, PASC then did APL\CMS for VM370/CMS and the US HONE datacenters were consolidated across the back parking lot from PASC ... and HONE VM370 was enhanced for max number of 168s in shared DASD, loosely-coupled, single-system-image operation with load-balancing and fall-over across the complex. After joining IBM one of my hobbies was enhanced production operating systems for internal datacenters and HONE was long time customer back to CP67 days. In the morph of CP67->VM370 lots of features were dropped and/or simplified. During 1974, I migrated lots of CP67 stuff to VM370 Release 2 for HONE and other internal datacenters. Then in 1975, I migrated tightly-coupled multiprocessor support to VM370 Release 3, initially for US HONE so they could add a 2nd CPU to each system. Also HONE clones (with APL-based sales&marketing support applications) were cropping up all over the world.

23jun1969 unbundling

https://www.garlic.com/~lynn/submain.html#unbundle

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

SMP, tightly-coupled, multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

The APL\360 people had been complaining that the CMS\APL API for system services didn't conform to APL design ... and they eventually came out with APL\SV ... claiming "shared variables" API for using system services conformed to APL design ... followed by VS/APL.

A major HONE APL-application was few hundred kilobyte "SEQUOIA" (PROFS-like) menu user interface for the branch office sales&marketing people. PASC managed to embed this in the shared memory APL module ... instead of one copy in every workspace, a single shared copy as part of the APL module. HONE was running my paged-mapped CMS filesystem that supported being able to create a shared memory version of CMS MODULES (a very small subset of the shared module support was picked up for VM370 Release 3 as DCSS). PASC had also done the APL microcode assist for the 370/145 ... claiming a lot of APL throughput ran like 168APL. The same person had also done the FORTRAN Q/HX optimization enhancement ... and was used to rewrite some of HONE's most compute intensive APL applications starting in 1974 ... the paged-mapped shared CMS MODULES support also allowed dropping out of APL, running a Fortran application and re-entering APL with the results (transparent to the branch office users).

paged-mapped CMS filesystem posts

https://www.garlic.com/~lynn/submain.html#mmap

Some HONE posts mentioning SEQUOIA

https://www.garlic.com/~lynn/2023g.html#71 MVS/TSO and VM370/CMS Interactive Response

https://www.garlic.com/~lynn/2023f.html#93 CSC, HONE, 23Jun69 Unbundling, Future System

https://www.garlic.com/~lynn/2023f.html#42 Vintage IBM Mainframes & Minicomputers